Deep Learning for Design and Retr of Nano-photonic Structures 论文学习

作者:Itzik Malkiel, Achiya Nagler, Uri Arieli, Michael Mrejen, Uri Arieli Lior Wolf and Haim Suchowski

核心内容

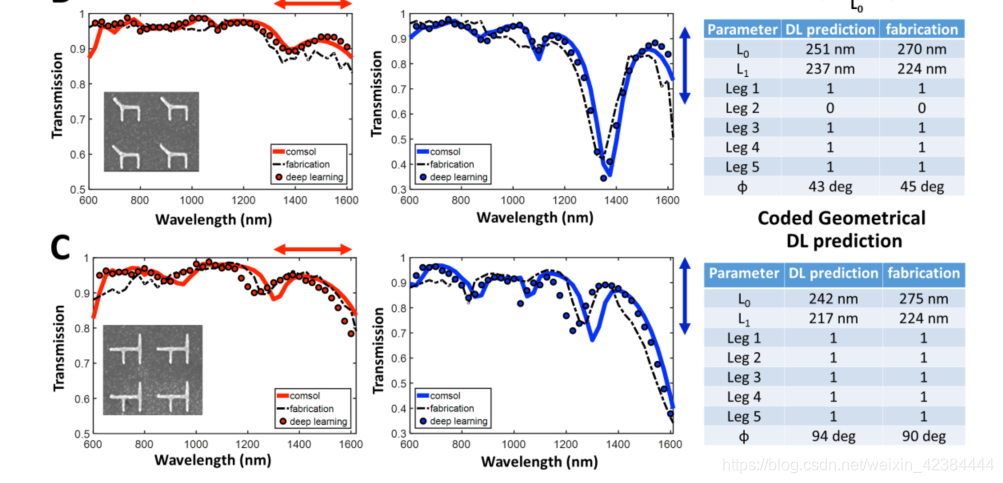

To illustrate our approach, we design a novel deep network, which uses a fully connected neural network. We introduce a bi-directional deep neural network architecture composed of two networks,where the first is a Geometry-predicting-network (GPN) that predicts a geometry based on the spectra (the inverse path) and the second is a Spectrum-predictingnetwork (SPN) that predicts the spectra based on the nanoparticle geometry (the direct path).

利用全连接神经网络设计一个双向的深度神经网络,含有几何预测网络和频谱预测网络。

训练

训练数据:

The data contains more than 15,000 experiments, where each experiment is composed of a plasmonic nanostructure with a defined geometry, its metal properties, the host’s permittivity and the optical response spectrum for both horizontal and vertical polarizations of the incoming field (see Methods).

数据包含了超过15000个测试样本(experiments),每一个测试样本中含有特定几何参数的等离子纳米结构(如图中的’H’型结构),金属特性,主体介电常数和输入场的水平和垂直极化的光学响应谱。学习数据集还含有纳米粒子的厚度。原因如下:

While we maintained the thickness of the nanoparticle constant, it can of course influence the transmission spectra (blueshift and resonance strength). This can be added as a parameter to the learning dataset and allow refined predictions.

- GPN输入:垂直偏振的光谱,竖直方向的光谱和材料特性。

The spectra are fed into the network in a raw form of 43 Y (transmission) values, where X values (wavelengths) are fixed.

上一部分:3层网络

下一部分:6层网络

中间网络神经元个数:

The first part of the inverse network is composed of three parallel pipelines that contain three layers. The second part of the inverse function consists of a sequential network that receives as input, 250 neurons from each part of the parallel network.

- GPN输出:纳米结构的几何参数

its encoded structure, length sizes and angle

- SPN输入:GPN预测输出的几何参数 和给定的材料特性

The direct network receives the predicted geometry as input and the given material

properties.

- SPN输出:利用预测集合参数生成的两个光谱

The output of the direct network is a transmission graph of 43 regressed values in the range [0,1], and is run twice, once for each polarization.

- 激活函数

The ReLU activation function (33) is used throughout the network.

- 损失函数:

Mean Squared Error (MSE)

其他内容

文章在构建该深度神经网络后,研究了网络层深度对性能的影响和用于传感应用的反向预测方法的强度。

In order to gain insight on the effect of the network’s depth on the prediction performance, we conduct an extensive comparison between different network architectures.

Next, we have examined the strength of the inverse predictive approach for sensing applications where plasmonic nanostructures are used for enhance the light-matter interaction with various chemicals and bio-molecules.

论文感想

- 文章在讲述第一部分反向预测的时候并不是特别清楚;

- 我的理解该网络有两个地方的输出,一个为反向预测的输出,另一个为反向预测输入正向预测的输出。这两个输出都存在与真实值之间的误差计算。像比如两个直接复合在一起的两个网络,性能将会更好。

- 这篇文章的结构思想上特别像是CycleGAN的思想:都具有两个生成网络(对应该文章的方向预测的生成和正向预测的生成)和两个判别网络(对应该文章为反向预测的输出的判断和正向预测输出的判断),只是该文章的结构并没有循环。

- 论文阅读:End-to-End Learning of Deformable Mixture of Parts and Deep Convolutional Neural Networks for H

- 论文笔记 A Large Contextual Dataset for Classification,Detection and Counting of Cars with Deep Learning

- 中文译文:Minerva-一种可扩展的高效的深度学习训练平台(Minerva - A Scalable and Highly Efficient Training Platform for Deep Learning)

- End-to-End Learning of Deformable Mixture of Parts and Deep Convolutional Neural Networks for Human

- 【深度学习】论文导读:图像识别中的深度残差网络(Deep Residual Learning for Image Recognition)

- 论文笔记之:Let there be Color!: Joint End-to-end Learning of Global and Local Image Priors for Automatic

- [深度学习论文笔记][Scene Classification] Learning Deep Features for Scene Recognition using Places Database

- deeplearning论文学习笔记(2)A critical review of recurrent neural networks for sequence learning

- 深度学习论文随记(四)ResNet 残差网络-2015年Deep Residual Learning for Image Recognition

- 深度学习论文笔记 [图像处理] Deep Residual Learning for Image Recognition

- 深度学习国外课程资料(Deep Learning for Self-Driving Cars)+(Deep Reinforcement Learning and Control )

- 开始学习深度学习和循环神经网络Some starting points for deep learning and RNNs

- 深度学习笔记——“Mastering the game of Go with deep neural networks and tree search”论文学习

- 深度学习国外课程资料(Deep Learning for Self-Driving Cars)+(Deep Reinforcement Learning and Control )

- 开始学习深度学习和循环神经网络Some starting points for deep learning and RNNs

- 论文阅读:Joint Learning of Single-image and Cross-image Representations for Person Re-identification

- 【论文阅读笔记】Deep Learning in Medical Imaging: Overview and Future Promise of an Exciting New Technique

- [深度学习论文笔记][Image Classification] Deep Residual Learning for Image Recognition

- 论文阅读学习 - ResNet - Deep Residual Learning for Image Recognition

- 论文翻译:Development and Evaluation of Emerging Design Patterns for Ubiquitous Computing