zookeeper docker集群配置

2017-12-19 16:34

597 查看

本篇讲一下zookeeper在docker中集群搭建方式。

先来看看zookeeper的单个Docker配置吧。

共包含两个文件,一个Dockerfile,一个sh脚本

Dockerfile如下:

FROM openjdk:8-jre-alpine

# Install required packages

RUN apk add --no-cache \

bash \

su-exec

ENV ZOO_USER=zookeeper \

ZOO_CONF_DIR=/conf \

ZOO_DATA_DIR=/data \

ZOO_DATA_LOG_DIR=/datalog \

ZOO_PORT=2181 \

ZOO_TICK_TIME=2000 \

ZOO_INIT_LIMIT=5 \

ZOO_SYNC_LIMIT=2 \

ZOO_MAX_CLIENT_CNXNS=60

# Add a user and make dirs

RUN set -ex; \

adduser -D "$ZOO_USER"; \

mkdir -p "$ZOO_DATA_LOG_DIR" "$ZOO_DATA_DIR" "$ZOO_CONF_DIR"; \

chown "$ZOO_USER:$ZOO_USER" "$ZOO_DATA_LOG_DIR" "$ZOO_DATA_DIR" "$ZOO_CONF_DIR"

ARG GPG_KEY=D0BC8D8A4E90A40AFDFC43B3E22A746A68E327C1

ARG DISTRO_NAME=zookeeper-3.4.11

# Download Apache Zookeeper, verify its PGP signature, untar and clean up

RUN set -ex; \

apk add --no-cache --virtual .build-deps \

ca-certificates \

gnupg \

libressl; \

wget -q "https://www.apache.org/dist/zookeeper/$DISTRO_NAME/$DISTRO_NAME.tar.gz"; \

wget -q "https://www.apache.org/dist/zookeeper/$DISTRO_NAME/$DISTRO_NAME.tar.gz.asc"; \

export GNUPGHOME="$(mktemp -d)"; \

gpg --keyserver ha.pool.sks-keyservers.net --recv-key "$GPG_KEY" || \

gpg --keyserver pgp.mit.edu --recv-keys "$GPG_KEY" || \

gpg --keyserver keyserver.pgp.com --recv-keys "$GPG_KEY"; \

gpg --batch --verify "$DISTRO_NAME.tar.gz.asc" "$DISTRO_NAME.tar.gz"; \

tar -xzf "$DISTRO_NAME.tar.gz"; \

mv "$DISTRO_NAME/conf/"* "$ZOO_CONF_DIR"; \

rm -rf "$GNUPGHOME" "$DISTRO_NAME.tar.gz" "$DISTRO_NAME.tar.gz.asc"; \

apk del .build-deps

WORKDIR $DISTRO_NAME

VOLUME ["$ZOO_DATA_DIR", "$ZOO_DATA_LOG_DIR"]

EXPOSE $ZOO_PORT 2888 3888

ENV PATH=$PATH:/$DISTRO_NAME/bin \

ZOOCFGDIR=$ZOO_CONF_DIR

COPY docker-entrypoint.sh /

RUN chmod 777 /docker-entrypoint.sh

ENTRYPOINT ["/docker-entrypoint.sh"]

CMD ["zkServer.sh", "start-foreground"]

docker-entrypoint.sh如下

#!/bin/bash

set -e

# Allow the container to be started with `--user`

if [[ "$1" = 'zkServer.sh' && "$(id -u)" = '0' ]]; then

chown -R "$ZOO_USER" "$ZOO_DATA_DIR" "$ZOO_DATA_LOG_DIR"

exec su-exec "$ZOO_USER" "$0" "$@"

fi

# Generate the config only if it doesn't exist

if [[ ! -f "$ZOO_CONF_DIR/zoo.cfg" ]]; then

CONFIG="$ZOO_CONF_DIR/zoo.cfg"

echo "clientPort=$ZOO_PORT" >> "$CONFIG"

echo "dataDir=$ZOO_DATA_DIR" >> "$CONFIG"

echo "dataLogDir=$ZOO_DATA_LOG_DIR" >> "$CONFIG"

echo "tickTime=$ZOO_TICK_TIME" >> "$CONFIG"

echo "initLimit=$ZOO_INIT_LIMIT" >> "$CONFIG"

echo "syncLimit=$ZOO_SYNC_LIMIT" >> "$CONFIG"

echo "maxClientCnxns=$ZOO_MAX_CLIENT_CNXNS" >> "$CONFIG"

for server in $ZOO_SERVERS; do

echo "$server" >> "$CONFIG"

done

fi

# Write myid only if it doesn't exist

if [[ ! -f "$ZOO_DATA_DIR/myid" ]]; then

echo "${ZOO_MY_ID:-1}" > "$ZOO_DATA_DIR/myid"

fi

exec "$@"

有了这两个文件就可以打镜像了,譬如使用Daocloud即可,将那两个文件放GitHub里,用Daocloud创建一个项目指向该GitHub项目,然后就能打包镜像了。从shell脚本可以看到,有几个项是可以定制的,主要就是zoo.cfg里面的东西,包括ZOO_MY_ID,ZOO_SERVERS,ZOO_TICK_TIME等等各个属性,都可以在docker启动时的环境变量里指定。

比较有用的就是ZOO_SERVERS和ZOO_MY_ID,是在搭建集群时需要指定的自己的id和其他zookeeper服务器的地址。

如果是单体zookeeper,直接用上面构建好的镜像启动就OK了。

搭建集群,如果是用docker-compose的方式,参考如下

version: '3.1'

services:

zoo1:

image: xxxx/zookeeper

restart: always

hostname: zoo1

ports:

- 2181:2181

environment:

ZOO_MY_ID: 1

ZOO_SERVERS: server.1=0.0.0.0:2888:3888 server.2=zoo2:2888:3888 server.3=zoo3:2888:3888

zoo2:

image: xxxx/zookeeper

restart: always

hostname: zoo2

ports:

- 2182:2181

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zoo1:2888:3888 server.2=0.0.0.0:2888:3888 server.3=zoo3:2888:3888

zoo3:

image: xxxx/zookeeper

restart: always

hostname: zoo3

ports:

- 2183:2181

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zoo1:2888:3888 server.2=zoo2:2888:3888 server.3=0.0.0.0:2888:3888

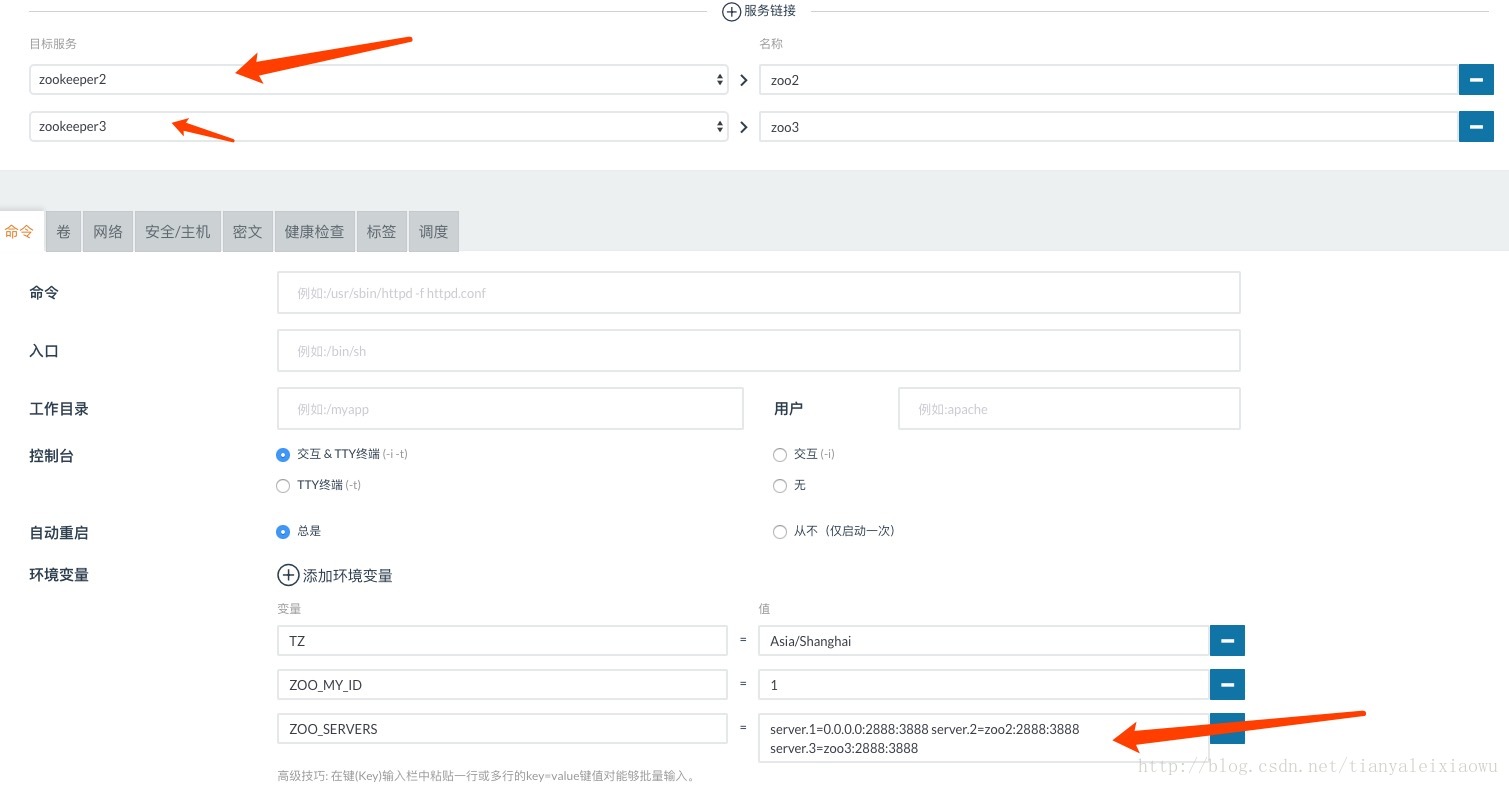

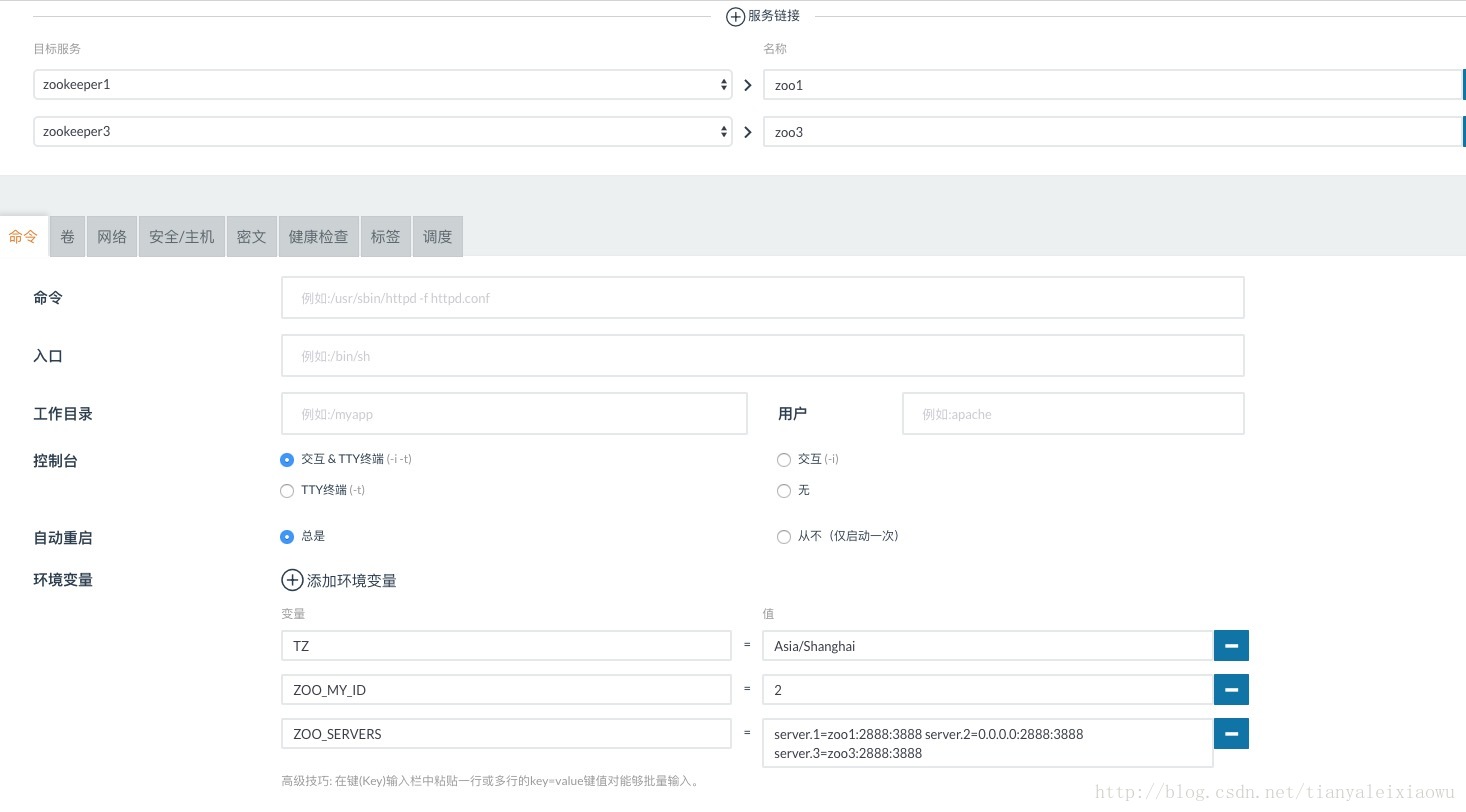

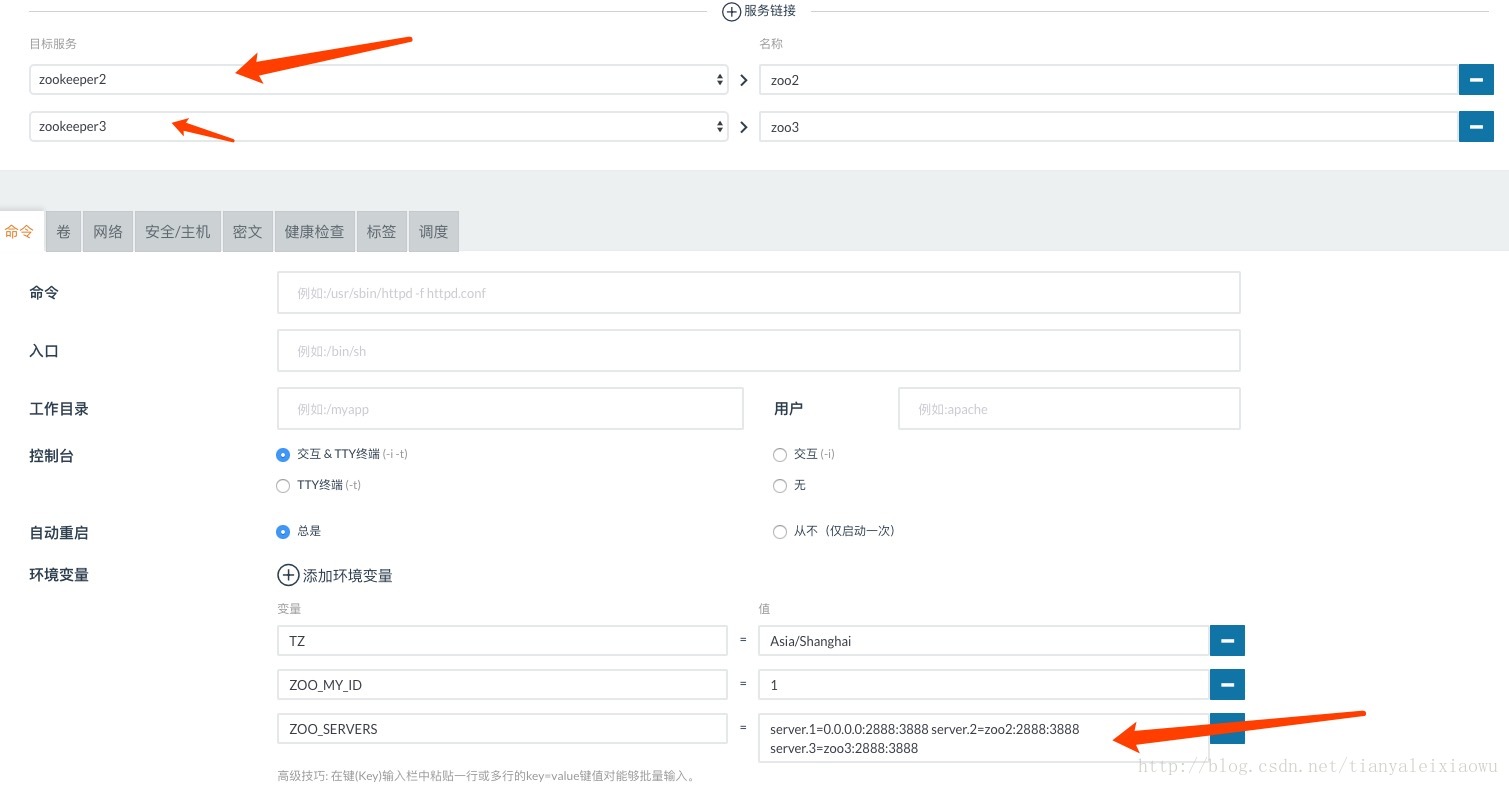

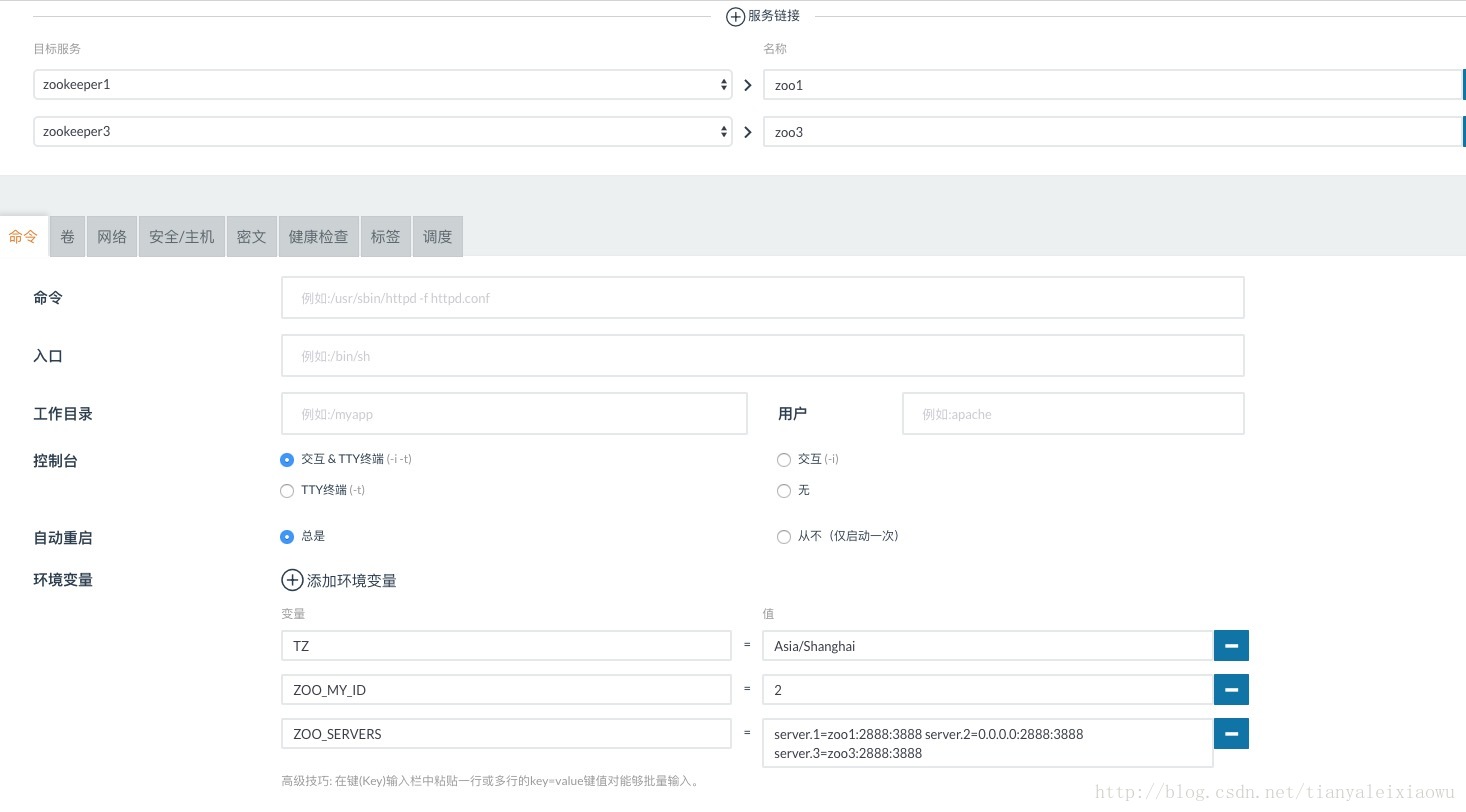

将image地址换成你自己的镜像地址即可。我是将集群用rancher搭建的,看一下rancher的配置:

zookeeper1:

zookeeper2:

zookeeper3的也类似,就是添加另外两个服务的服务链接(即是docker link),然后设置环境变量即可。

3个镜像都启动后,zookeeper集群就算搭建完毕了。

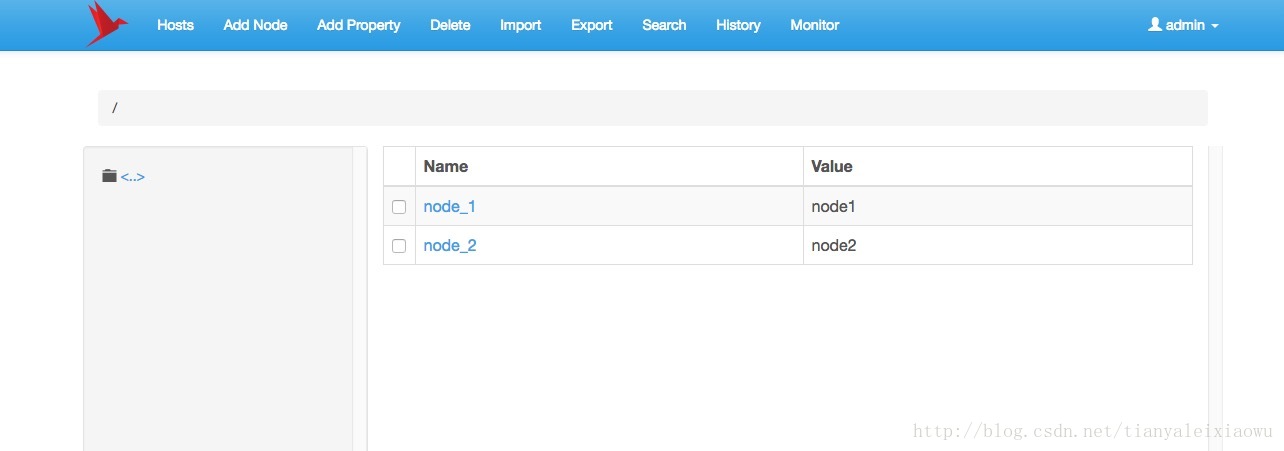

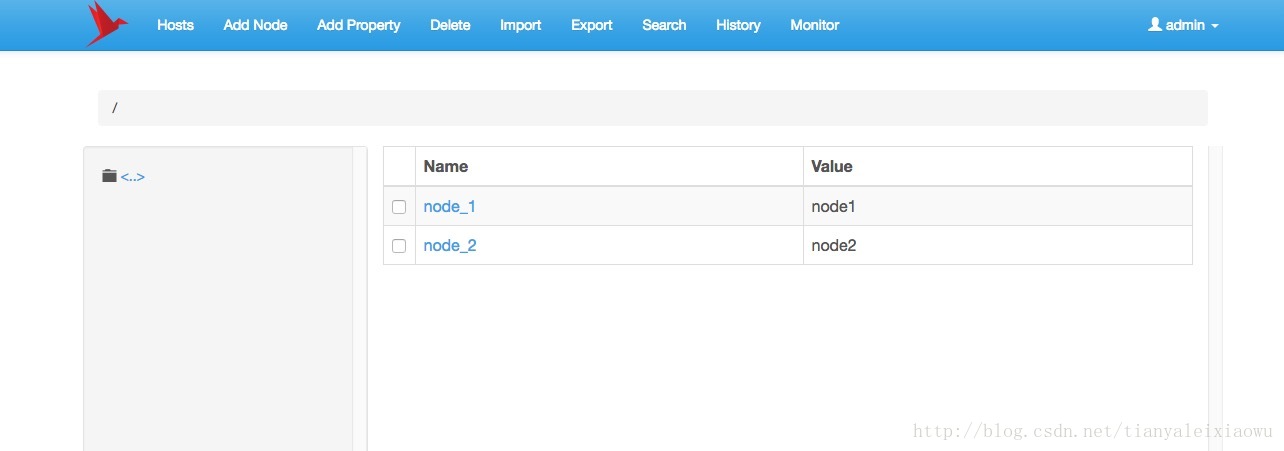

可以分别进入容器1,执行创建node,然后在容器2和容器3分别查看该node,看集群成功的效果。

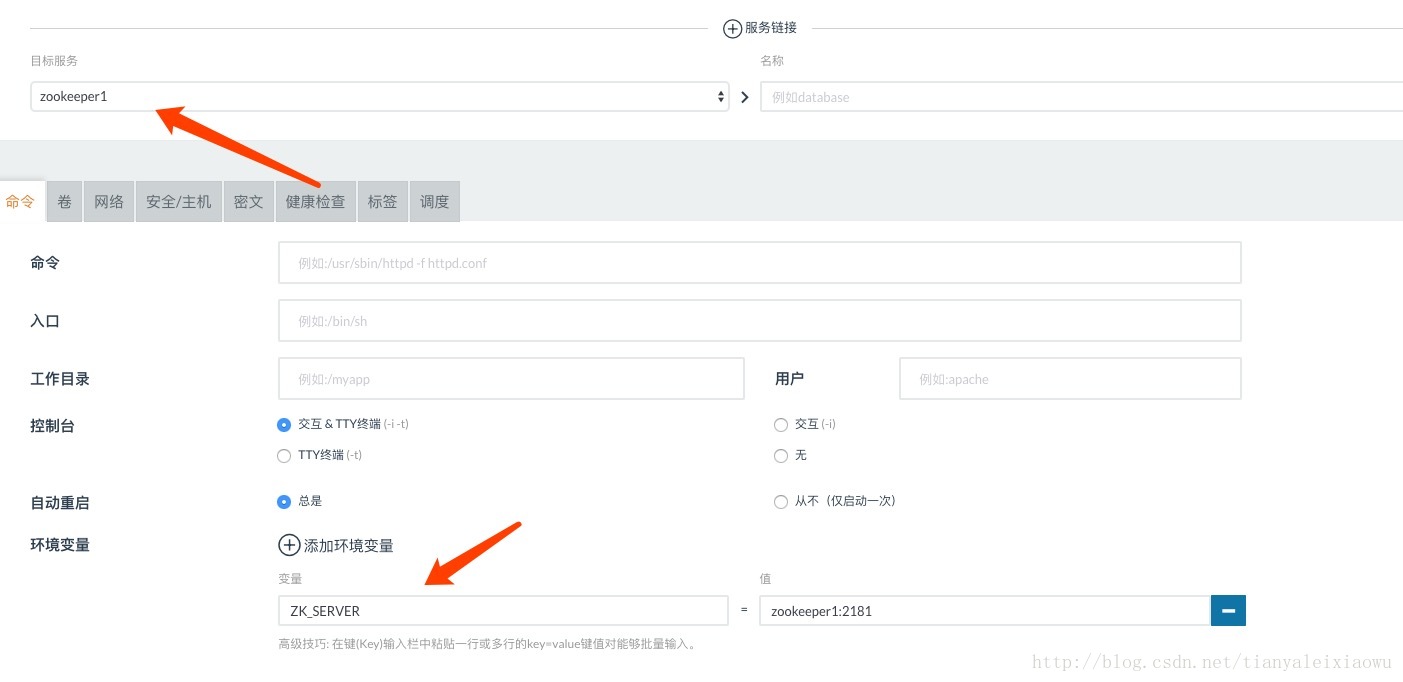

下面我们再使用zkui这个带界面的zookeeper可视化程序,也将它部署到docker里,来查看zookeeper集群的状态。

zkui的jar包可以自行去打包,或者网上去找个下载。最终用来打包镜像的目录如下:

Dockerfile如下:

FROM java:8

WORKDIR /var/app

ADD zkui-*.jar /var/app/zkui.jar

ADD config.cfg /var/app/config.cfg

ADD bootstrap.sh /var/app/bootstrap.sh

RUN chmod 777 /var/app/bootstrap.sh

ENTRYPOINT ["/var/app/bootstrap.sh"]

EXPOSE 9090bootstrap.sh

#!/bin/sh

ZK_SERVER=${ZK_SERVER:-"localhost:2181"}

USER_SET=${USER_SET:-"{\"users\": [{ \"username\":\"admin\" , \"password\":\"manager\",\"role\": \"ADMIN\" \},{ \"username\":\"appconfig\" , \"password\":\"appconfig\",\"role\": \"USER\" \}]\}"}

LOGIN_MESSAGE=${LOGIN_MESSAGE:-"Please login using admin/manager or appconfig/appconfig."}

sed -i "s/^zkServer=.*$/zkServer=$ZK_SERVER/" /var/app/config.cfg

sed -i "s/^userSet = .*$/userSet = $USER_SET/" /var/app/config.cfg

sed -i "s/^loginMessage=.*$/loginMessage=$LOGIN_MESSAGE/" /var/app/config.cfg

echo "Starting zkui with server $ZK_SERVER"

exec java -jar /var/app/zkui.jarconfig.cfg#Server Port

serverPort=9090

#Comma seperated list of all the zookeeper servers

zkServer=localhost:2181,localhost:2181

#Http path of the repository. Ignore if you dont intent to upload files from repository.

scmRepo=http://myserver.com/@rev1=

#Path appended to the repo url. Ignore if you dont intent to upload files from repository.

scmRepoPath=//appconfig.txt

#if set to true then userSet is used for authentication, else ldap authentication is used.

ldapAuth=false

ldapDomain=mycompany,mydomain

#ldap authentication url. Ignore if using file based authentication.

ldapUrl=ldap://<ldap_host>:<ldap_port>/dc=mycom,dc=com

#Specific roles for ldap authenticated users. Ignore if using file based authentication.

ldapRoleSet={"users": [{ "username":"domain\\user1" , "role": "ADMIN" }]}

userSet = {"users": [{ "username":"admin" , "password":"manager","role": "ADMIN" },{ "username":"appconfig" , "password":"appconfig","role": "USER" }]}

#Set to prod in production and dev in local. Setting to dev will clear history each time.

env=prod

jdbcClass=org.h2.Driver

jdbcUrl=jdbc:h2:zkui

jdbcUser=root

jdbcPwd=manager

#If you want to use mysql db to store history then comment the h2 db section.

#jdbcClass=com.mysql.jdbc.Driver

#jdbcUrl=jdbc:mysql://localhost:3306/zkui

#jdbcUser=root

#jdbcPwd=manager

loginMessage=Please login using admin/manager or appconfig/appconfig.

#session timeout 5 mins/300 secs.

sessionTimeout=300

#Default 5 seconds to keep short lived zk sessions. If you have large data then the read will take more than 30 seconds so increase this accordingly.

#A bigger zkSessionTimeout means the connection will be held longer and resource consumption will be high.

zkSessionTimeout=5

#Block PWD exposure over rest call.

blockPwdOverRest=false

#ignore rest of the props below if https=false.

https=false

keystoreFile=/home/user/keystore.jks

keystorePwd=password

keystoreManagerPwd=password

# The default ACL to use for all creation of nodes. If left blank, then all nodes will be universally accessible

# Permissions are based on single character flags: c (Create), r (read), w (write), d (delete), a (admin), * (all)

# For example defaultAcl={"acls": [{"scheme":"ip", "id":"192.168.1.192", "perms":"*"}, {"scheme":"ip", id":"192.168.1.0/24", "perms":"r"}]

defaultAcl=

# Set X-Forwarded-For to true if zkui is behind a proxy

X-Forwarded-For=false

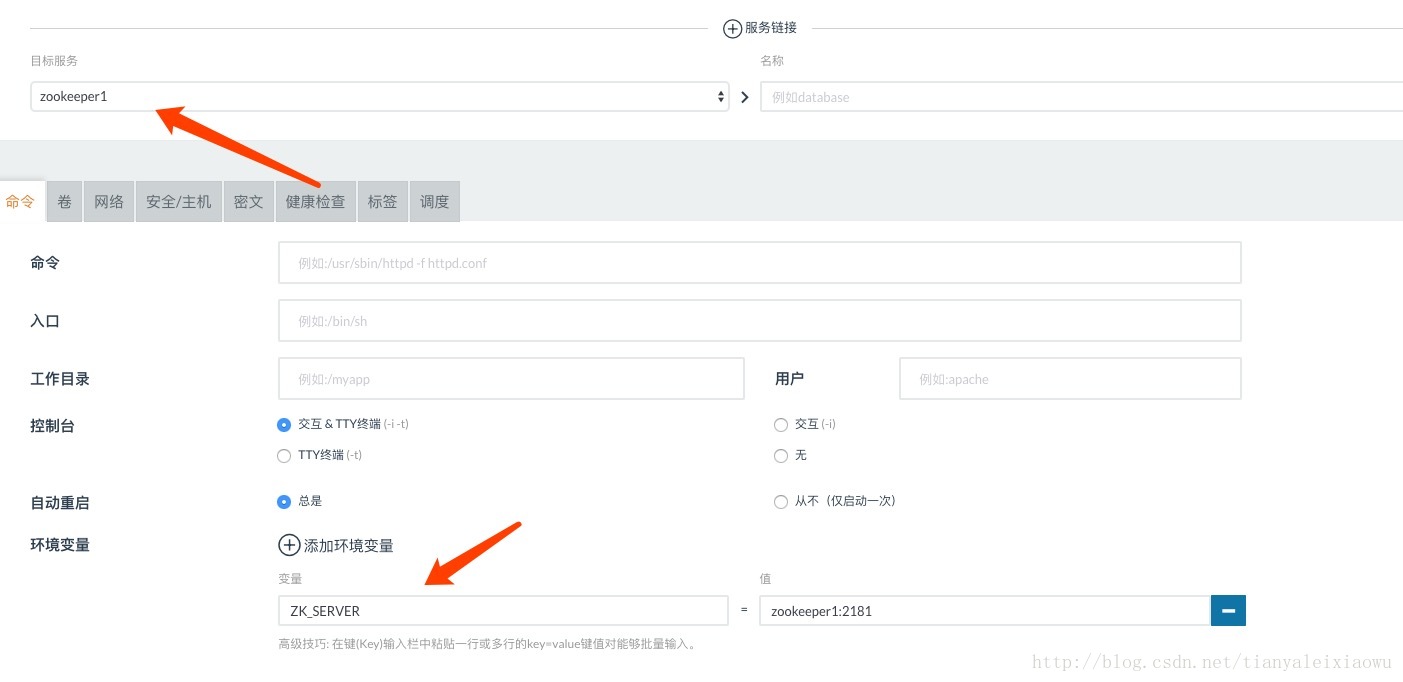

可以看到,需要指定的就是zookeeper server的地址就行了,打成镜像后,部署到rancher中:

我这里只指了一个server,可以指定多个,譬如你把3个zookeeper server都放进去,用逗号分隔。

启动后访问界面,用 admin账号、manager密码登录

可以用命令行在随便一个zookeeper里创建node,然后在zkui界面查看。

先来看看zookeeper的单个Docker配置吧。

共包含两个文件,一个Dockerfile,一个sh脚本

Dockerfile如下:

FROM openjdk:8-jre-alpine

# Install required packages

RUN apk add --no-cache \

bash \

su-exec

ENV ZOO_USER=zookeeper \

ZOO_CONF_DIR=/conf \

ZOO_DATA_DIR=/data \

ZOO_DATA_LOG_DIR=/datalog \

ZOO_PORT=2181 \

ZOO_TICK_TIME=2000 \

ZOO_INIT_LIMIT=5 \

ZOO_SYNC_LIMIT=2 \

ZOO_MAX_CLIENT_CNXNS=60

# Add a user and make dirs

RUN set -ex; \

adduser -D "$ZOO_USER"; \

mkdir -p "$ZOO_DATA_LOG_DIR" "$ZOO_DATA_DIR" "$ZOO_CONF_DIR"; \

chown "$ZOO_USER:$ZOO_USER" "$ZOO_DATA_LOG_DIR" "$ZOO_DATA_DIR" "$ZOO_CONF_DIR"

ARG GPG_KEY=D0BC8D8A4E90A40AFDFC43B3E22A746A68E327C1

ARG DISTRO_NAME=zookeeper-3.4.11

# Download Apache Zookeeper, verify its PGP signature, untar and clean up

RUN set -ex; \

apk add --no-cache --virtual .build-deps \

ca-certificates \

gnupg \

libressl; \

wget -q "https://www.apache.org/dist/zookeeper/$DISTRO_NAME/$DISTRO_NAME.tar.gz"; \

wget -q "https://www.apache.org/dist/zookeeper/$DISTRO_NAME/$DISTRO_NAME.tar.gz.asc"; \

export GNUPGHOME="$(mktemp -d)"; \

gpg --keyserver ha.pool.sks-keyservers.net --recv-key "$GPG_KEY" || \

gpg --keyserver pgp.mit.edu --recv-keys "$GPG_KEY" || \

gpg --keyserver keyserver.pgp.com --recv-keys "$GPG_KEY"; \

gpg --batch --verify "$DISTRO_NAME.tar.gz.asc" "$DISTRO_NAME.tar.gz"; \

tar -xzf "$DISTRO_NAME.tar.gz"; \

mv "$DISTRO_NAME/conf/"* "$ZOO_CONF_DIR"; \

rm -rf "$GNUPGHOME" "$DISTRO_NAME.tar.gz" "$DISTRO_NAME.tar.gz.asc"; \

apk del .build-deps

WORKDIR $DISTRO_NAME

VOLUME ["$ZOO_DATA_DIR", "$ZOO_DATA_LOG_DIR"]

EXPOSE $ZOO_PORT 2888 3888

ENV PATH=$PATH:/$DISTRO_NAME/bin \

ZOOCFGDIR=$ZOO_CONF_DIR

COPY docker-entrypoint.sh /

RUN chmod 777 /docker-entrypoint.sh

ENTRYPOINT ["/docker-entrypoint.sh"]

CMD ["zkServer.sh", "start-foreground"]

docker-entrypoint.sh如下

#!/bin/bash

set -e

# Allow the container to be started with `--user`

if [[ "$1" = 'zkServer.sh' && "$(id -u)" = '0' ]]; then

chown -R "$ZOO_USER" "$ZOO_DATA_DIR" "$ZOO_DATA_LOG_DIR"

exec su-exec "$ZOO_USER" "$0" "$@"

fi

# Generate the config only if it doesn't exist

if [[ ! -f "$ZOO_CONF_DIR/zoo.cfg" ]]; then

CONFIG="$ZOO_CONF_DIR/zoo.cfg"

echo "clientPort=$ZOO_PORT" >> "$CONFIG"

echo "dataDir=$ZOO_DATA_DIR" >> "$CONFIG"

echo "dataLogDir=$ZOO_DATA_LOG_DIR" >> "$CONFIG"

echo "tickTime=$ZOO_TICK_TIME" >> "$CONFIG"

echo "initLimit=$ZOO_INIT_LIMIT" >> "$CONFIG"

echo "syncLimit=$ZOO_SYNC_LIMIT" >> "$CONFIG"

echo "maxClientCnxns=$ZOO_MAX_CLIENT_CNXNS" >> "$CONFIG"

for server in $ZOO_SERVERS; do

echo "$server" >> "$CONFIG"

done

fi

# Write myid only if it doesn't exist

if [[ ! -f "$ZOO_DATA_DIR/myid" ]]; then

echo "${ZOO_MY_ID:-1}" > "$ZOO_DATA_DIR/myid"

fi

exec "$@"

有了这两个文件就可以打镜像了,譬如使用Daocloud即可,将那两个文件放GitHub里,用Daocloud创建一个项目指向该GitHub项目,然后就能打包镜像了。从shell脚本可以看到,有几个项是可以定制的,主要就是zoo.cfg里面的东西,包括ZOO_MY_ID,ZOO_SERVERS,ZOO_TICK_TIME等等各个属性,都可以在docker启动时的环境变量里指定。

比较有用的就是ZOO_SERVERS和ZOO_MY_ID,是在搭建集群时需要指定的自己的id和其他zookeeper服务器的地址。

如果是单体zookeeper,直接用上面构建好的镜像启动就OK了。

搭建集群,如果是用docker-compose的方式,参考如下

version: '3.1'

services:

zoo1:

image: xxxx/zookeeper

restart: always

hostname: zoo1

ports:

- 2181:2181

environment:

ZOO_MY_ID: 1

ZOO_SERVERS: server.1=0.0.0.0:2888:3888 server.2=zoo2:2888:3888 server.3=zoo3:2888:3888

zoo2:

image: xxxx/zookeeper

restart: always

hostname: zoo2

ports:

- 2182:2181

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zoo1:2888:3888 server.2=0.0.0.0:2888:3888 server.3=zoo3:2888:3888

zoo3:

image: xxxx/zookeeper

restart: always

hostname: zoo3

ports:

- 2183:2181

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zoo1:2888:3888 server.2=zoo2:2888:3888 server.3=0.0.0.0:2888:3888

将image地址换成你自己的镜像地址即可。我是将集群用rancher搭建的,看一下rancher的配置:

zookeeper1:

zookeeper2:

zookeeper3的也类似,就是添加另外两个服务的服务链接(即是docker link),然后设置环境变量即可。

3个镜像都启动后,zookeeper集群就算搭建完毕了。

可以分别进入容器1,执行创建node,然后在容器2和容器3分别查看该node,看集群成功的效果。

下面我们再使用zkui这个带界面的zookeeper可视化程序,也将它部署到docker里,来查看zookeeper集群的状态。

zkui的jar包可以自行去打包,或者网上去找个下载。最终用来打包镜像的目录如下:

Dockerfile如下:

FROM java:8

WORKDIR /var/app

ADD zkui-*.jar /var/app/zkui.jar

ADD config.cfg /var/app/config.cfg

ADD bootstrap.sh /var/app/bootstrap.sh

RUN chmod 777 /var/app/bootstrap.sh

ENTRYPOINT ["/var/app/bootstrap.sh"]

EXPOSE 9090bootstrap.sh

#!/bin/sh

ZK_SERVER=${ZK_SERVER:-"localhost:2181"}

USER_SET=${USER_SET:-"{\"users\": [{ \"username\":\"admin\" , \"password\":\"manager\",\"role\": \"ADMIN\" \},{ \"username\":\"appconfig\" , \"password\":\"appconfig\",\"role\": \"USER\" \}]\}"}

LOGIN_MESSAGE=${LOGIN_MESSAGE:-"Please login using admin/manager or appconfig/appconfig."}

sed -i "s/^zkServer=.*$/zkServer=$ZK_SERVER/" /var/app/config.cfg

sed -i "s/^userSet = .*$/userSet = $USER_SET/" /var/app/config.cfg

sed -i "s/^loginMessage=.*$/loginMessage=$LOGIN_MESSAGE/" /var/app/config.cfg

echo "Starting zkui with server $ZK_SERVER"

exec java -jar /var/app/zkui.jarconfig.cfg#Server Port

serverPort=9090

#Comma seperated list of all the zookeeper servers

zkServer=localhost:2181,localhost:2181

#Http path of the repository. Ignore if you dont intent to upload files from repository.

scmRepo=http://myserver.com/@rev1=

#Path appended to the repo url. Ignore if you dont intent to upload files from repository.

scmRepoPath=//appconfig.txt

#if set to true then userSet is used for authentication, else ldap authentication is used.

ldapAuth=false

ldapDomain=mycompany,mydomain

#ldap authentication url. Ignore if using file based authentication.

ldapUrl=ldap://<ldap_host>:<ldap_port>/dc=mycom,dc=com

#Specific roles for ldap authenticated users. Ignore if using file based authentication.

ldapRoleSet={"users": [{ "username":"domain\\user1" , "role": "ADMIN" }]}

userSet = {"users": [{ "username":"admin" , "password":"manager","role": "ADMIN" },{ "username":"appconfig" , "password":"appconfig","role": "USER" }]}

#Set to prod in production and dev in local. Setting to dev will clear history each time.

env=prod

jdbcClass=org.h2.Driver

jdbcUrl=jdbc:h2:zkui

jdbcUser=root

jdbcPwd=manager

#If you want to use mysql db to store history then comment the h2 db section.

#jdbcClass=com.mysql.jdbc.Driver

#jdbcUrl=jdbc:mysql://localhost:3306/zkui

#jdbcUser=root

#jdbcPwd=manager

loginMessage=Please login using admin/manager or appconfig/appconfig.

#session timeout 5 mins/300 secs.

sessionTimeout=300

#Default 5 seconds to keep short lived zk sessions. If you have large data then the read will take more than 30 seconds so increase this accordingly.

#A bigger zkSessionTimeout means the connection will be held longer and resource consumption will be high.

zkSessionTimeout=5

#Block PWD exposure over rest call.

blockPwdOverRest=false

#ignore rest of the props below if https=false.

https=false

keystoreFile=/home/user/keystore.jks

keystorePwd=password

keystoreManagerPwd=password

# The default ACL to use for all creation of nodes. If left blank, then all nodes will be universally accessible

# Permissions are based on single character flags: c (Create), r (read), w (write), d (delete), a (admin), * (all)

# For example defaultAcl={"acls": [{"scheme":"ip", "id":"192.168.1.192", "perms":"*"}, {"scheme":"ip", id":"192.168.1.0/24", "perms":"r"}]

defaultAcl=

# Set X-Forwarded-For to true if zkui is behind a proxy

X-Forwarded-For=false

可以看到,需要指定的就是zookeeper server的地址就行了,打成镜像后,部署到rancher中:

我这里只指了一个server,可以指定多个,譬如你把3个zookeeper server都放进去,用逗号分隔。

启动后访问界面,用 admin账号、manager密码登录

可以用命令行在随便一个zookeeper里创建node,然后在zkui界面查看。

相关文章推荐

- docker Zookeeper 集群安装与配置

- docker深入2-使用自定义的网络来配置zookeeper集群

- swarm+zookeeper配置docker集群

- zookeeper集群安装配置

- windows下tomcat7+solr5.1+zookeeper3.4.6 伪集群SolrCloud配置

- Hadoop+Hbase+Spark集群配置—Zookeeper安装

- solrCloud+tomcat+zookeeper集群配置

- zookeeper 集群配置

- ZooKeeper-3.3.4集群安装配置

- zookeeper集群配置与启动——实战

- HBase集群配置之Zookeeper安装配置

- Solr6.2.0 + zookeeper 集群配置

- Zookeeper的集群配置和Java测试程序

- zookeeper kafka 集群配置(centos6.5)

- zookeeper+activemq配置消息中间件集群 服务器配置

- ZooKeeper-3.3.4集群安装配置-http://blog.csdn.net/shirdrn/article/details/7183503

- Zookeeper集群配置部署

- ZooKeeper集群配置

- 使用Docker搭建zookeeper集群

- zookeeper学习笔记———《zookeeper-3.4.6单机伪集群配置》