Redis集群搭建

2017-11-15 13:41

441 查看

一 实验环境

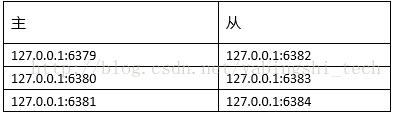

这里为了方便搭建环境,所有节点都安装在同一台服务器上。主从对应关系:

127.0.0.1:6382是127.0.0.1:6379的从节点。如:

二 实验步骤

2.1 用普通方式安装

2.1.1 准备节点

节点数量至少为6个才能保证组成完整高可用的集群。每个节点都需要开启配置cluster-enabled yes,让redis运行在集群模式下。

2.1.1.1 下载源码,解压缩后编译源码

wgethttp://download.redis.io/releases/redis-3.2.11.tar.gztarxzf redis-3.2.11.tar.gz

cdredis-3.2.11

make

2.1.1.2 拷贝相关文件

mkdir /usr/local/redis

cp redis.conf /usr/local/redis

cd src

cp redis-server /usr/local/redis

cp redis-benchmark /usr/local/redis

cp redis-cli /usr/local/redis

cd /usr/local/redis

mkdir conf

cp redis.conf conf/

cd conf

为各个节点都创建一个配置文件,以端口号为6380的节点为例:

cp redis.conf redis-6380.conf

修改redis-6380.conf

port 6380

pidfile /var/run/redis_6380.pid

cluster-enabled yes

cluster-node-timeout 15000

cluster-require-full-coverage no

cluster-config-file "nodes-6380.conf"

备注:第一次启动节点时,如果没有集群配置文件,它会自动创建一份,文件名由cluster-config-file参数控制,建议采用node-{port}.conf格式定义,防止同一机器下的多个节点彼此覆盖,导致集群信息异常。

Redis自动维护集群配置文件(如添加节点,节点下线,故障转移),不要手动修改。

2.1.1.3 配置PATH

vi /root/.bash_profile在PATH=那行后面追加:/usr/local/redis

source /root/.bash_profile

2.1.1.4 启动节点

redis-server/usr/local/redis/conf/redis-6379.conf &redis-server /usr/local/redis/conf/redis-6380.conf &

redis-server /usr/local/redis/conf/redis-6381.conf &

redis-server /usr/local/redis/conf/redis-6382.conf &

redis-server /usr/local/redis/conf/redis-6383.conf &

redis-server /usr/local/redis/conf/redis-6384.conf &

#查看集群节点信息

[root@ocp conf]# redis-cli -p 6380

127.0.0.1:6380> cluster nodes

c8c3517afc1fe09f149c0dbf3e37d080532ea680:6380 myself,master - 0 0 0 connected

目前每个节点只能识别自己的节点信息。

2.1.2 节点握手

命令:cluster meet ip port在集群内任意节点上执行cluster meet命令加入新节点,握手状态会通过信息在集群内传播,这样其他节点会自动发现新节点并发起握手流程。

127.0.0.1:6379> cluster meet 127.0.0.16380

127.0.0.1:6379> cluster meet 127.0.0.16381

127.0.0.1:6379> cluster meet 127.0.0.16382

127.0.0.1:6379> cluster meet 127.0.0.16383

127.0.0.1:6379> cluster meet 127.0.0.16384

127.0.0.1:6379> cluster nodes

c8c3517afc1fe09f149c0dbf3e37d080532ea680127.0.0.1:6380 master - 0 1510633772738 2 connected

ff996e111995fbcb7e23ab69a17bad830d9889f5127.0.0.1:6381 master - 0 1510633775415 0 connected

9b90366e39aeb0bb38b7fe0f506059294aff179e127.0.0.1:6382 master - 0 1510633776418 5 connected

31973463565c541e7bf3c564000d6a6e53bbd1af127.0.0.1:6383 master - 0 1510633773791 3 connected

8d6ef8962453b9bd58112893ef2a8f5fb148e2ce127.0.0.1:6379 myself,master - 0 0 1 connected

c40f88bd4236f06a1437730892f65d0c985a1fcc127.0.0.1:6384 master - 0 1510633777603 4 connected

这时集群处于下线状态,所有的数据读写都被禁止。

127.0.0.1:6379> cluster info

cluster_state:fail

cluster_slots_assigned:0

cluster_slots_ok:0

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:0

cluster_current_epoch:5

cluster_my_epoch:1

cluster_stats_messages_sent:634

cluster_stats_messages_received:634

此时cluster_slots_assigned值为0.只有当16384个槽全部分配给节点后,集群才进入在线状态。

2.1.3 分配槽

通过cluster addslots命令为三个主节点分配槽。[root@ocp ~]# redis-cli -h 127.0.0.1 -p6379 cluster addslots {0..5461}

[root@ocp ~]# redis-cli -h 127.0.0.1 -p6380 cluster addslots {5462..10922}

[root@ocp ~]# redis-cli -h 127.0.0.1 -p6381 cluster addslots {10923..16383}

执行cluster info查看集群状态:

127.0.0.1:6379> cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:5

cluster_my_epoch:1

cluster_stats_messages_sent:139

cluster_stats_messages_received:139

执行cluster nodes可以看到节点和槽的对应关系:

127.0.0.1:6379> cluster nodes

c8c3517afc1fe09f149c0dbf3e37d080532ea680127.0.0.1:6380 master - 0 1510636810462 2 connected 5462-10922

8d6ef8962453b9bd58112893ef2a8f5fb148e2ce127.0.0.1:6379 myself,master - 0 0 1 connected 0-5461

31973463565c541e7bf3c564000d6a6e53bbd1af127.0.0.1:6383 master - 0 1510636809960 3 connected

ff996e111995fbcb7e23ab69a17bad830d9889f5127.0.0.1:6381 master - 0 1510636811965 0 connected 10923-16383

9b90366e39aeb0bb38b7fe0f506059294aff179e127.0.0.1:6382 master - 0 1510636808958 5 connected

c40f88bd4236f06a1437730892f65d0c985a1fcc127.0.0.1:6384 master - 0 1510636810963 4 connected

#查看槽信息

127.0.0.1:6379> cluster slots

1) 1) (integer) 5462

2)(integer) 10922

3)1) "127.0.0.1"

2) (integer) 6380

3) "c8c3517afc1fe09f149c0dbf3e37d080532ea680"

2) 1) (integer) 0

2)(integer) 5461

3)1) "127.0.0.1"

2) (integer) 6379

3) "8d6ef8962453b9bd58112893ef2a8f5fb148e2ce"

3) 1) (integer) 10923

2)(integer) 16383

3)1) "127.0.0.1"

2) (integer) 6381

3) "ff996e111995fbcb7e23ab69a17bad830d9889f5"

2.1.4 为主节点配置从节点

命令:cluster replicate nodeId注意:必须在对应的从节点上执行,nodeId是其对应的主节点id.

127.0.0.1:6382> cluster replicate8d6ef8962453b9bd58112893ef2a8f5fb148e2ce

127.0.0.1:6383> cluster replicatec8c3517afc1fe09f149c0dbf3e37d080532ea680

127.0.0.1:6384> cluster replicateff996e111995fbcb7e23ab69a17bad830d9889f5

127.0.0.1:6384> cluster nodes

8d6ef8962453b9bd58112893ef2a8f5fb148e2ce127.0.0.1:6379 master - 0 1510637287240 1 connected 0-5461

c40f88bd4236f06a1437730892f65d0c985a1fcc127.0.0.1:6384 myself,slave ff996e111995fbcb7e23ab69a17bad830d9889f5 0 0 4connected

ff996e111995fbcb7e23ab69a17bad830d9889f5127.0.0.1:6381 master - 0 1510637288242 0 connected 10923-16383

31973463565c541e7bf3c564000d6a6e53bbd1af127.0.0.1:6383 slave c8c3517afc1fe09f149c0dbf3e37d080532ea680 0 1510637286239 3connected

c8c3517afc1fe09f149c0dbf3e37d080532ea680127.0.0.1:6380 master - 0 1510637284735 2 connected 5462-10922

9b90366e39aeb0bb38b7fe0f506059294aff179e127.0.0.1:6382 slave 8d6ef8962453b9bd58112893ef2a8f5fb148e2ce 0 1510637285236 5connected

可以看到从节点有slave标志,且包含其对应主节点的ID.

2.2 用redis-trib.rb搭建集群

2.2.1 Ruby环境准备

#安装rubywget https://cache.ruby-lang.org/pub/ruby/2.3/ruby-2.3.1.tar.gz

tar xvf ruby-2.3.1.tar.gz

cd ruby-2.3.1

./configure -prefix=/usr/local/ruby

make

make install

cd /usr/local/ruby

cp bin/ruby /usr/local/bin

cp bin/gem /usr/local/bin

#安装rubygem redis依赖

wget http://rubygems.org/downloads/redis-3.3.0.gem

gem install -l redis-3.3.0.gem

#安装redis-trib.rb

cp redis-3.2.11/src/redis-trib.rb/usr/local/bin

安装完Ruby环境后,执行redis-trib.rb命令确认环境是否正确:

[root@ocp /]# redis-trib.rb

Usage: redis-trib <command><options> <arguments ...> create host1:port1 ...hostN:portN --replicas <arg> check host:port info host:port fix host:port --timeout <arg> reshard host:port --from <arg> --to <arg> --slots <arg> --yes --timeout <arg> --pipeline <arg> rebalance host:port --weight <arg> --auto-weights --use-empty-masters --timeout <arg> --simulate --pipeline <arg> --threshold <arg> add-node new_host:new_portexisting_host:existing_port --slave --master-id <arg> del-node host:port node_id set-timeout host:portmilliseconds call host:port commandarg arg .. arg import host:port --from <arg> --copy --replace help (show this help) For check, fix, reshard, del-node,set-timeout you can specify the host and port of any working node in thecluster.

2.2.2 准备节点

步骤同2.1.1。2.2.3 创建集群

用redis-trib.rb create命令完成节点握手和槽分配过程。redis-trib.rb create --replicas 1127.0.0.1:6379 127.0.0.1:6380 127.0.0.1:6381 127.0.0.1:6382 127.0.0.1:6383127.0.0.1:6384

--replicas指定集群中每个主节点配置几个从节点,这里设置为1。

我们出于测试目的,使用本机IP地址127.0.0.1,如果部署节点使用不同的IP地址,redis-trib.rb会尽可能保证主从节点不分配在同一机器上,因此会重新排序节点列表顺序。

节点列表顺序用于确定主从角色,先主节点之后是从节点。

>>> Creating cluster >>> Performing hash slotsallocation on 6 nodes... Using 3 masters: 127.0.0.1:6379 127.0.0.1:6380 127.0.0.1:6381 Adding replica 127.0.0.1:6382 to127.0.0.1:6379 Adding replica 127.0.0.1:6383 to127.0.0.1:6380 Adding replica 127.0.0.1:6384 to127.0.0.1:6381 M: 8636e84389a6ce864c5e99afc08e61d5095fc4f4127.0.0.1:6379 slots:0-5460 (5461 slots) master M: c5424585ae85cfcc8074ee2017463a4de08de8ff127.0.0.1:6380 slots:5461-10922 (5462 slots) master M: fc47848325254ee3ea3253ca5947afc553212f54127.0.0.1:6381 slots:10923-16383 (5461 slots) master S: c7a0e63f207885ac2e7261de635bf53703f24f66127.0.0.1:6382 replicates 8636e84389a6ce864c5e99afc08e61d5095fc4f4 S: f823b940266c33b6b9e11817887c17a45c6c9183127.0.0.1:6383 replicates c5424585ae85cfcc8074ee2017463a4de08de8ff S: 4ef4f547ae119bd7758670153e54417b45668591127.0.0.1:6384 replicates fc47848325254ee3ea3253ca5947afc553212f54 Can I set the above configuration? (type'yes' to accept): yes 这里需要手动输入‘yes’。 >>> Nodes configuration updated >>> Assign a different configepoch to each node 9100:M 15 Nov 13:11:16.663 # configEpochset to 1 via CLUSTER SET-CONFIG-EPOCH 5540:M 15 Nov 13:11:16.664 # configEpochset to 2 via CLUSTER SET-CONFIG-EPOCH 5541:M 15 Nov 13:11:16.664 # configEpochset to 3 via CLUSTER SET-CONFIG-EPOCH 5542:M 15 Nov 13:11:16.665 # configEpochset to 4 via CLUSTER SET-CONFIG-EPOCH 5543:M 15 Nov 13:11:16.665 # configEpochset to 5 via CLUSTER SET-CONFIG-EPOCH 5544:M 15 Nov 13:11:16.665 # configEpochset to 6 via CLUSTER SET-CONFIG-EPOCH >>> Sending CLUSTER MEET messagesto join the cluster 9100:M 15 Nov 13:11:16.680 # IP address forthis node updated to 127.0.0.1 5542:M 15 Nov 13:11:16.686 # IP address forthis node updated to 127.0.0.1 5541:M 15 Nov 13:11:16.687 # IP address forthis node updated to 127.0.0.1 5544:M 15 Nov 13:11:16.787 # IP address forthis node updated to 127.0.0.1 5540:M 15 Nov 13:11:16.787 # IP address forthis node updated to 127.0.0.1 5543:M 15 Nov 13:11:16.787 # IP address forthis node updated to 127.0.0.1 Waiting for the cluster to join... 5542:S 15 Nov 13:11:20.685 # Cluster statechanged: ok 5543:S 15 Nov 13:11:20.685 # Cluster statechanged: ok 5544:S 15 Nov 13:11:20.686 # Cluster statechanged: ok >>> Performing Cluster Check(using node 127.0.0.1:6379) M: 8636e84389a6ce864c5e99afc08e61d5095fc4f4127.0.0.1:6379 slots:0-5460 (5461 slots) master 1additional replica(s) S: 4ef4f547ae119bd7758670153e54417b45668591127.0.0.1:6384 slots: (0 slots) slave replicates fc47848325254ee3ea3253ca5947afc553212f54 S: f823b940266c33b6b9e11817887c17a45c6c9183127.0.0.1:6383 slots: (0 slots) slave replicates c5424585ae85cfcc8074ee2017463a4de08de8ff M: fc47848325254ee3ea3253ca5947afc553212f54127.0.0.1:6381 slots:10923-16383 (5461 slots) master 1additional replica(s) M: c5424585ae85cfcc8074ee2017463a4de08de8ff127.0.0.1:6380 slots:5461-10922 (5462 slots) master 1additional replica(s) S: c7a0e63f207885ac2e7261de635bf53703f24f66127.0.0.1:6382 slots:(0 slots) slave replicates 8636e84389a6ce864c5e99afc08e61d5095fc4f4 [OK] All nodes agree about slotsconfiguration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered. …… 5541:M 15 Nov 13:11:21.593 # Cluster statechanged: ok 5540:M 15 Nov 13:11:21.622 # Cluster statechanged: ok 9100:M 15 Nov 13:11:21.650 # Cluster statechanged: ok

最后回车即可。

需要注意,redis-trib.rb的节点地址必须是不包含任何槽/数据的节点,否则会拒绝创建集群。

2.2.4 集群完整性检查

集群完整性指的是所有的槽都分配到存活的主节点上,只要16384个槽中有一个没有分配给节点,则表示集群不完整。Check命令只需要给出集群中的任意一个节点地址就可以完成整个集群的检查工作。

命令如下:

[root@ocp /]# redis-trib.rb check127.0.0.1:6379

>>> Performing Cluster Check(using node 127.0.0.1:6379) M: 8636e84389a6ce864c5e99afc08e61d5095fc4f4127.0.0.1:6379 slots:0-5460 (5461 slots) master 1additional replica(s) S: 4ef4f547ae119bd7758670153e54417b45668591127.0.0.1:6384 slots: (0 slots) slave replicates fc47848325254ee3ea3253ca5947afc553212f54 S: f823b940266c33b6b9e11817887c17a45c6c9183127.0.0.1:6383 slots: (0 slots) slave replicates c5424585ae85cfcc8074ee2017463a4de08de8ff M: fc47848325254ee3ea3253ca5947afc553212f54127.0.0.1:6381 slots:10923-16383 (5461 slots) master 1additional replica(s) M: c5424585ae85cfcc8074ee2017463a4de08de8ff127.0.0.1:6380 slots:5461-10922 (5462 slots) master 1additional replica(s) S: c7a0e63f207885ac2e7261de635bf53703f24f66127.0.0.1:6382 slots: (0 slots) slave replicates 8636e84389a6ce864c5e99afc08e61d5095fc4f4 [OK] All nodes agree about slotsconfiguration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered.

--本篇文章内容出自《Redis开发与运维》,并做了些许改动。

相关文章推荐

- 在windows上搭建redis集群(redis-cluster)

- Redis高可用集群-哨兵模式(Redis-Sentinel)搭建配置教程【Windows环境】

- redis集群搭建教程(以3.2.2为例)

- 【原创】搭建Nginx(负载均衡)+Redis(Session共享)+Tomcat集群

- Redis集群环境的搭建(从单机模拟到真正的集群搭建)

- redis集群搭建及分析

- 使用Twemproxy搭建redis服务器集群

- 搭建Nginx(负载均衡)+Redis(Session共享)+Tomcat集群

- Redis集群搭建与简单使用

- 《Redis复制与可扩展集群搭建》看后感

- Redis3.0集群搭建/配置/FAQ

- Redis集群搭建(转自一菲聪天的“Windows下搭建Redis集群”)

- Redis 集群搭建

- Redis 3.0集群搭建测试(一)

- Redis集群搭建与简单使用

- redis 集群搭建及相关命令

- redis的集群搭建

- 分布式缓存技术redis学习系列(四)——redis高级应用(集群搭建、集群分区原理、集群操作)

- Redis中sentinel集群的搭建和Jedis测试 图文教程[二]

- Linux中Redis3.5版本后官方给出的集群搭建方式