Oracle 11gR2(11.2.0.4.0)搭建RAC时root.sh执行脚本分析

2017-10-12 18:31

651 查看

在oracle 11g搭建RAC的时候,最后通常会执行两个脚本,第二个脚本比较重要也是最容易出错的地方。

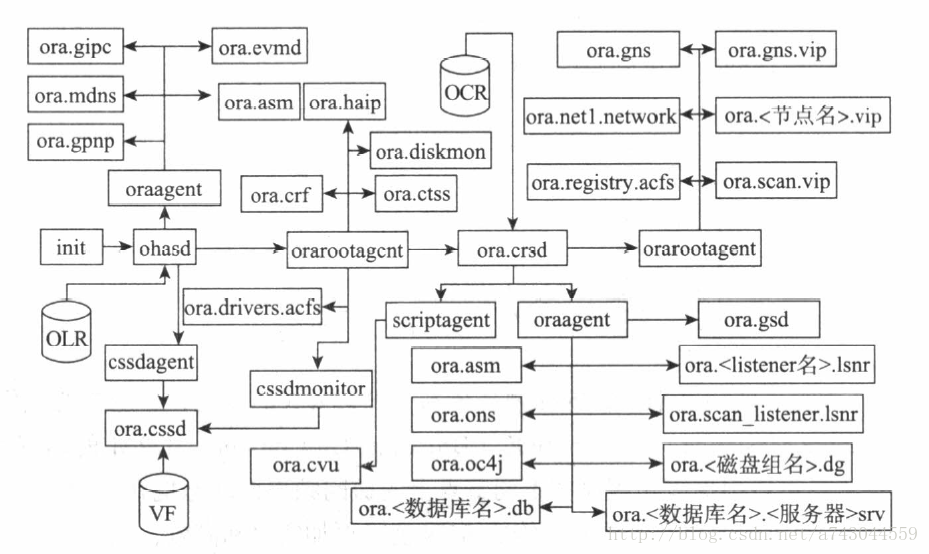

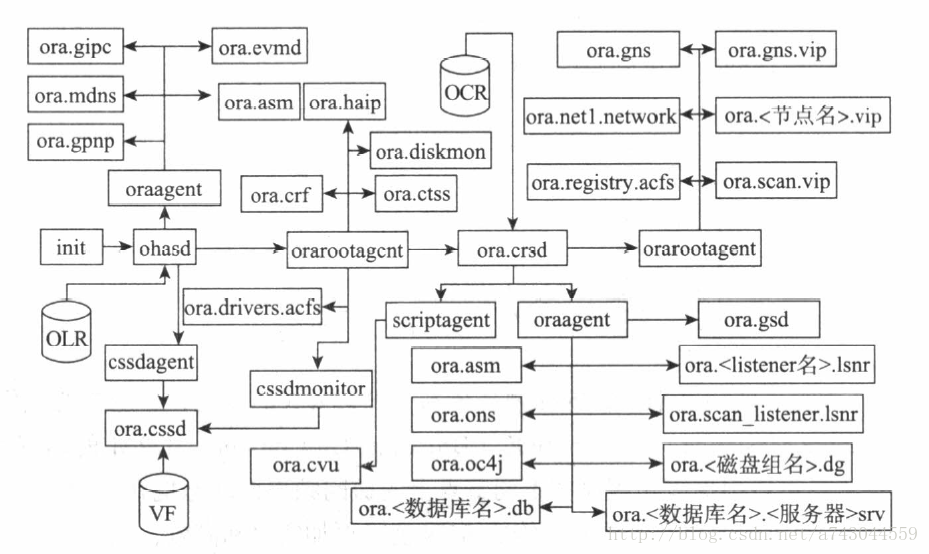

先了解oracle11g r2集群各个组件:

/u01/app/oraInventory/orainstRoot.sh

/u01/app/11.2.0/grid/root.sh

先看看root.sh脚本内容:

. /u01/app/11.2.0/grid/install/utl/rootmacro.sh 负责一些和gi_home 相关的验证工作。

. /u01/app/l l .2.0/grid/install/utl/rootinstall.sh 负责创建一些本地文件。

. /u01/app/11.2. 0/grid/network/install/sqlnet/setowner.sh 负责创建GI 相关的临时文件。

. /u01/app/11.2.0/grid/rdbms/install/rootadd_rdbms.sh 负责验证一些文件的权限。

. /u01/app/11.2.0/grid/rdbms/install/rootadd_filemap.sh 负责验证一些文件的权限。

以上脚本的功能都很简单, 而且并没有包含配置集群或者初始化集群的步骤。

root.sh 脚本的核心部分是/u01/app/ 11.2. 0/g rid/crs/c onfig/rootconfig.sh 脚本。这个脚本的作

用就是通过调用< gi_home>/crs/install/rootcrs.pl 脚本来初始化和配置集群,如下是rootconfig.sh脚本片段:

rootcrs.pl 脚本的日志文件是/u01/app/11.2.0/grid/cfgtoollogs/crsconfig/rootcrs_node2.log .

在配置之前,先通过读取配置文件得到集群基本信息

这些文件配置信息就是在OUI安装时写进去的。

配置文件读取完毕,获取文件目录权限,保存ACL信息:

查看 ParentDirPerm_node2.txt ACL信息:

创建TFA(oracle Trace File Analyzer)

创建checkpoint检查点文件:

从11.2.0.2 版本开始, root.sh 在运行时会产生一个检查点( Check point )文件,在每执行一个阶段的操作过程中,root.sh 都会把对应阶段的操作状态记录到检查点文件当中,以便在下一次运行root.sh 时能够清楚地了解上一次运行所处的阶段,以及对应阶段的状态,从而决定从哪里重新开始。这也是为什么Oracle 从11.2.0.2版本开始宣称,root.sh 脚本可以被重复运行的原因。这意味着用户再也不需要像之前版本那样,当

root.sh 脚本失败后需要将之前的配直彻底删除,才能重新运行root.sh,极大地节省了GI 的部署时间。

查看检查点文件

配置OCR信息

配置gpnp

配置CSS服务

配置OHASD服务

启动CSS

启动rac服务并检查

备份OLR

根据之前的描述, 可以看到root.sh会完成以下几个阶段的工作。

阶段1:完成配置集群前的一些杂项工作。

阶段2:节点的初始化设置。

阶段3:初始化OLR。

阶段4:初始化gpnp wallet和gpnp profile。

阶段5:配置init.ohasd脚本并启动ohasd。

阶段6:向集群添加初始化资源。

阶段7:将css启动到exclusive模式并格式化W。除了第一个运行root.sh的节点以外,

其他节点会以正常模式启动css 。

阶段8:以正常模式启动集群。

阶段9:向集群添加CRS相关的资源。

阶段10:重新启动集群。

先了解oracle11g r2集群各个组件:

/u01/app/oraInventory/orainstRoot.sh

/u01/app/11.2.0/grid/root.sh

先看看root.sh脚本内容:

[root@node2 app]# more /u01/app/11.2.0/grid/root.sh #!/bin/sh . /u01/app/11.2.0/grid/install/utl/rootmacro.sh "$@" . /u01/app/11.2.0/grid/install/utl/rootinstall.sh # # Root Actions related to network # /u01/app/11.2.0/grid/network/install/sqlnet/setowner.sh # # Invoke standalone rootadd_rdbms.sh # /u01/app/11.2.0/grid/rdbms/install/rootadd_rdbms.sh /u01/app/11.2.0/grid/rdbms/install/rootadd_filemap.sh /u01/app/11.2.0/grid/crs/config/rootconfig.sh EXITCODE=$? if [ $EXITCODE -ne 0 ]; then exit $EXITCODE fi

. /u01/app/11.2.0/grid/install/utl/rootmacro.sh 负责一些和gi_home 相关的验证工作。

. /u01/app/l l .2.0/grid/install/utl/rootinstall.sh 负责创建一些本地文件。

. /u01/app/11.2. 0/grid/network/install/sqlnet/setowner.sh 负责创建GI 相关的临时文件。

. /u01/app/11.2.0/grid/rdbms/install/rootadd_rdbms.sh 负责验证一些文件的权限。

. /u01/app/11.2.0/grid/rdbms/install/rootadd_filemap.sh 负责验证一些文件的权限。

以上脚本的功能都很简单, 而且并没有包含配置集群或者初始化集群的步骤。

root.sh 脚本的核心部分是/u01/app/ 11.2. 0/g rid/crs/c onfig/rootconfig.sh 脚本。这个脚本的作

用就是通过调用< gi_home>/crs/install/rootcrs.pl 脚本来初始化和配置集群,如下是rootconfig.sh脚本片段:

[root@node2 app]# more /u01/app/11.2.0/grid/crs/config/rootconfig.sh

#!/bin/sh

ORACLE_HOME=/u01/app/11.2.0/grid

ROOTSCRIPT_ARGS=""

#if upgrade/force/verbose command line args are passed, set ROOTSCRIPT_ARGS accordingly.

for var in "$@"

do

if [ "$var" = "-upgrade" ]; then

ROOTSCRIPT_ARGS=$ROOTSCRIPT_ARGS"$var "

elif [ "$var" = "-force" ]; then

ROOTSCRIPT_ARGS=$ROOTSCRIPT_ARGS"$var "

elif [ "$var" = "-verbose" ]; then

ROOTSCRIPT_ARGS=$ROOTSCRIPT_ARGS"$var "

fi

done

. $ORACLE_HOME/install/utl/rootmacro.sh

SU=/bin/su

SW_ONLY=false

ADDNODE=false

GI_WIZARD=false

HA_CONFIG=false

RAC9I_PRESENT=false

CMDLLROOTSH_CMD=$ORACLE_HOME/crs/install/cmdllroot.sh

CONFIGSH_CMD="$ORACLE_HOME/crs/config/config.sh"

ROOTHASPL="$ORACLE_HOME/perl/bin/perl -I$ORACLE_HOME/perl/lib -I$ORACLE_HOME/crs/install $ORACLE_HOME/crs/install/roothas.pl"

ROOTCRSPL="$ORACLE_HOME/perl/bin/perl -I$ORACLE_HOME/perl/lib -I$ORACLE_HOME/crs/install $ORACLE_HOME/crs/install/rootcrs.pl"

ROOTSCRIPT=""

ISLINUX=false

EXITCODE=0

# For addnode cases

CRSCONFIG_ADDPARAMS=$ORACLE_HOME/crs/install/crsconfig_addparams

# If addparams file exists

if [ -f $CRSCONFIG_ADDPARAMS ]; then

# get the value of LAST[tail -1] occurrence of CRS_ADDNODE=<value> in the file

CRS_ADDNODE=`$AWK -F"=" '{if ($1=="CRS_ADDNODE") print $2}' $CRSCONFIG_ADDPARAMS | tail -1`

# If value for CRS_ADDNODE is found

if [ "$CRS_ADDNODE" = "true" ]; then

# set ADDNODE to true

ADDNODE=true

fi

fi

# if addnode then reset SW_ONLY and HA_CONFIG variables to false

if [ "$ADDNODE" = "true" ]; then

SW_ONLY=false

HA_CONFIG=false

fi

if [ `$UNAME` = "Linux" ]; then

ISLINUX=true

fi

if [ "$SW_ONLY" = "true" ]; then

$ECHO

$ECHO "To configure Grid Infrastructure for a Stand-Alone Server run the following command as the root user:"

$ECHO "$ROOTHASPL"

$ECHO

$ECHO

$ECHO "To configure Grid Infrastructure for a Cluster execute the following command:"

$ECHO "$CONFIGSH_CMD"

$ECHO "This command launches the Grid Infrastructure Configuration Wizard. The wizard also supports silent operation, and the parameters can be passed through the response file that is available in the installation media."

$ECHO

else

if [ "$GI_WIZARD" = "true" ]; then

$ECHO "Relinking oracle with rac_on option"

$SU $ORACLE_OWNER -c "$ECHO \"rootconfig.sh: Relinking oracle with rac_on option\" ; $ORACLE_HOME/crs/config/relink_rac_on $ORACLE_HOME $MAKE" >> $ORACLE_HOME/install/make.log 2>&1

EXITCODE=$?

if [ $EXITCODE -ne 0 ]; then

$ECHO "Relinking rac_on failed"

exit $EXITCODE

fi

fi

if [ "$ISLINUX" = "true" -a "$RAC9I_PRESENT" = "true" ]; then

$CMDLLROOTSH_CMD

fi

if [ "$HA_CONFIG" = "true" ]; then

ROOTSCRIPT=$ROOTHASPL

else

ROOTSCRIPT=$ROOTCRSPL

fi

#Passing the ROOTSCRIPT_ARGS constructed to rootscripts.

$ROOTSCRIPT $ROOTSCRIPT_ARGS

EXITCODE=$?

if [ $EXITCODE -ne 0 ]; then

$ECHO "$ROOTSCRIPT execution failed"

exit $EXITCODE

fi

fi

exit 0rootcrs.pl 脚本的日志文件是/u01/app/11.2.0/grid/cfgtoollogs/crsconfig/rootcrs_node2.log .

在配置之前,先通过读取配置文件得到集群基本信息

017-10-12 13:01:10: The configuration parameter file /u01/app/11.2.0/grid/crs/install/crsconfig_params is valid 2017-10-12 13:01:10: ### Printing the configuration values from files: 2017-10-12 13:01:10: /u01/app/11.2.0/grid/crs/install/crsconfig_params 2017-10-12 13:01:10: /u01/app/11.2.0/grid/crs/install/s_crsconfig_defs 2017-10-12 13:01:10: ASM_AU_SIZE=1 2017-10-12 13:01:10: ASM_DISCOVERY_STRING=/dev/asm-* 2017-10-12 13:01:10: ASM_DISKS=/dev/asm-disk1 2017-10-12 13:01:10: ASM_DISK_GROUP=DATA

这些文件配置信息就是在OUI安装时写进去的。

配置文件读取完毕,获取文件目录权限,保存ACL信息:

2017-10-12 13:01:10: ### Printing of configuration values complete ### 2017-10-12 13:01:10: USER_IGNORED_PREREQ is set to 1 2017-10-12 13:01:10: User ignored Prerequisites during installation 2017-10-12 13:01:10: saving current owner/permisssios of parent dir of /u01/app/11.2.0 2017-10-12 13:01:10: saving parent dir ACLs in /u01/app/11.2.0/grid/crs/install/ParentDirPerm_node2.txt 2017-10-12 13:01:10: Getting file permissions for /u01/app/11.2.0 2017-10-12 13:01:10: Getting file permissions for /u01/app 2017-10-12 13:01:10: Getting file permissions for /u01 2017-10-12 13:01:10: writing /u01/app/11.2.0:grid:oinstall:0775 to /u01/app/11.2.0/grid/crs/install/ParentDirPerm_node2.txt 2017-10-12 13:01:10: writing /u01/app:grid:oinstall:0775 to /u01/app/11.2.0/grid/crs/install/ParentDirPerm_node2.txt 2017-10-12 13:01:10: writing /u01:grid:oinstall:0775 to /u01/app/11.2.0/grid/crs/install/ParentDirPerm_node2.txt 2017-10-12 13:01:10: Installing Trace File Analyzer

查看 ParentDirPerm_node2.txt ACL信息:

[root@node2 ~]# more /u01/app/11.2.0/grid/crs/install/ParentDirPerm_node2.txt /u01/app/11.2.0:grid:oinstall:0775 /u01/app:grid:oinstall:0775 /u01:grid:oinstall:0775

创建TFA(oracle Trace File Analyzer)

> > Using JAVA_HOME : /u01/app/11.2.0/grid/jdk/jre > > Running Auto Setup for TFA as user root... > > > The following installation requires temporary use of SSH. > If SSH is not configured already then we will remove SSH > when complete. > > Installing TFA now... > > > TFA Will be Installed on node2... > > TFA will scan the following Directories > ++++++++++++++++++++++++++++++++++++++++++++ > > .----------------------------------------------------. > | node2 | > +-----------------------------------------+----------+ > | Trace Directory | Resource | > +-----------------------------------------+----------+ > | /u01/app/11.2.0/grid/OPatch/crs/log | CRS | > | /u01/app/11.2.0/grid/cfgtoollogs | INSTALL | > | /u01/app/11.2.0/grid/crs/log | CRS | > | /u01/app/11.2.0/grid/cv/log | CRS | > | /u01/app/11.2.0/grid/evm/admin/log | CRS | > | /u01/app/11.2.0/grid/evm/admin/logger | CRS | > | /u01/app/11.2.0/grid/evm/log | CRS | > | /u01/app/11.2.0/grid/install | INSTALL | > | /u01/app/11.2.0/grid/log | CRS | > | /u01/app/11.2.0/grid/log/ | CRS | > | /u01/app/11.2.0/grid/network/log | CRS | > | /u01/app/11.2.0/grid/oc4j/j2ee/home/log | CRSOC4J | > | /u01/app/11.2.0/grid/opmn/logs | CRS | > | /u01/app/11.2.0/grid/racg/log | CRS | > | /u01/app/11.2.0/grid/rdbms/log | ASM | > | /u01/app/11.2.0/grid/scheduler/log | CRS | > | /u01/app/11.2.0/grid/srvm/log | CRS | > | /u01/app/oraInventory/ContentsXML | INSTALL | > | /u01/app/oraInventory/logs | INSTALL | > '-----------------------------------------+----------' > > > Installing TFA on node2 > HOST: node2 TFA_HOME: /u01/app/11.2.0/grid/tfa/node2/tfa_home > > .------------------------------------------------. > | Host | Status of TFA | PID | Port | Version | > +-------+---------------+-------+------+---------+ > | node2 | RUNNING | 21382 | 5000 | 2.5.1.5 | > | node1 | RUNNING | 21110 | 5000 | 2.5.1.5 | > '-------+---------------+-------+------+---------' > > Summary of TFA Installation: > .---------------------------------------------------------------. > | node2 | > +---------------------+-----------------------------------------+ > | Parameter | Value | > +---------------------+-----------------------------------------+ > | Install location | /u01/app/11.2.0/grid/tfa/node2/tfa_home | > | Repository location | /u01/app/grid/tfa/repository | > | Repository usage | 0 MB out of 10240 MB | > '---------------------+-----------------------------------------' > > > TFA is successfully installed.. > > Usage : /u01/app/11.2.0/grid/tfa/bin/tfactl <command> [options] > <command> = > print Print requested details > purge Delete collections from TFA repository > directory Add or Remove or Modify directory in TFA > host Add or Remove host in TFA > set Turn ON/OFF or Modify various TFA features > diagcollect Collect logs from across nodes in cluster > > For help with a command: /u01/app/11.2.0/grid/tfa/bin/tfactl <command> -help > > >End Command output [root@node2 ~]# /u01/app/11.2.0/grid/tfa/bin/tfactl -help Usage : /u01/app/11.2.0/grid/tfa/bin/tfactl <command> [options] <command> = print Print requested details purge Delete collections from TFA repository directory Add or Remove or Modify directory in TFA host Add or Remove host in TFA set Turn ON/OFF or Modify various TFA features diagcollect Collect logs from across nodes in cluster For help with a command: /u01/app/11.2.0/grid/tfa/bin/tfactl <command> -help

创建checkpoint检查点文件:

从11.2.0.2 版本开始, root.sh 在运行时会产生一个检查点( Check point )文件,在每执行一个阶段的操作过程中,root.sh 都会把对应阶段的操作状态记录到检查点文件当中,以便在下一次运行root.sh 时能够清楚地了解上一次运行所处的阶段,以及对应阶段的状态,从而决定从哪里重新开始。这也是为什么Oracle 从11.2.0.2版本开始宣称,root.sh 脚本可以被重复运行的原因。这意味着用户再也不需要像之前版本那样,当

root.sh 脚本失败后需要将之前的配直彻底删除,才能重新运行root.sh,极大地节省了GI 的部署时间。

2017-10-12 13:01:41: checkpoint ROOTCRS_STACK does not exist

2017-10-12 13:01:41: Oracle clusterware configuration has started

2017-10-12 13:01:41: Running as user grid: /u01/app/11.2.0/grid/bin/cluutil -ckpt -oraclebase /u01/app/grid -writeckpt -name ROOTCRS_STACK -state START

2017-10-12 13:01:41: s_run_as_user2: Running /bin/su grid -c ' /u01/app/11.2.0/grid/bin/cluutil -ckpt -oraclebase /u01/app/grid -writeckpt -name ROOTCRS_STACK -state START '

2017-10-12 13:01:41: Removing file /tmp/filelDvsCK

2017-10-12 13:01:41: Successfully removed file: /tmp/filelDvsCK

2017-10-12 13:01:41: /bin/su successfully executed

2017-10-12 13:01:41: Succeeded in writing the checkpoint:'ROOTCRS_STACK' with status:START

2017-10-12 13:01:41: CkptFile: /u01/app/grid/Clusterware/ckptGridHA_node2.xml

2017-10-12 13:01:42: Succeeded to add (property/value):('VERSION/'11.2.0.4.0') for checkpoint:ROOTCRS_STACK查看检查点文件

[root@node2 ~]# more /u01/app/grid/Clusterware/ckptGridHA_node2.xml <?xml version="1.0" standalone="yes" ?> <!-- Copyright (c) 1999, 2013, Oracle and/or its affiliates. All rights reserved. --> <!-- Do not modify the contents of this file by hand. --> <CHECKPOINTS> <CHECKPOINT LEVEL="MAJOR" NAME="ROOTCRS_STACK" DESC="ROOTCRS_STACK" STATE="SUCCESS"> <PROPERTY_LIST> <PROPERTY NAME="VERSION" TYPE="STRING" VAL="11.2.0.4.0"/> </PROPERTY_LIST> </CHECKPOINT> <CHECKPOINT LEVEL="MAJOR" NAME="ROOTCRS_PARAM" DESC="ROOTCRS_PARAM" STATE="SUCCESS"> <PROPERTY_LIST> <PROPERTY NAME="NODE_NAME_LIST" TYPE="STRING" VAL="node1,node2"/> <PROPERTY NAME="REUSEDG" TYPE="STRING" VAL="false"/> <PROPERTY NAME="ASM_DISKS" TYPE="STRING" VAL="/dev/asm-disk1"/> <PROPERTY NAME="ASM_AU_SIZE" TYPE="NUMBER" VAL="1"/> <PROPERTY NAME="ISROLLING" TYPE="STRING" VAL="true "/> <PROPERTY NAME="GNS_CONF" TYPE="STRING" VAL="false"/> <PROPERTY NAME="ORACLE_BASE" TYPE="STRING" VAL="/u01/app/grid"/> <PROPERTY NAME="VOTING_DISKS" TYPE="STRING" VAL="NO_VAL"/> <PROPERTY NAME="OCR_LOCATIONS" TYPE="STRING" VAL="NO_VAL"/> <PROPERTY NAME="JLIBDIR" TYPE="STRING" VAL="/u01/app/11.2.0/grid/jlib"/> <PROPERTY NAME="ORA_ASM_GROUP" TYPE="STRING" VAL="asmadmin"/> <PROPERTY NAME="ASM_DISK_GROUP" TYPE="STRING" VAL="DATA"/> <PROPERTY NAME="CRS_NODEVIPS" TYPE="STRING" VAL="'node1-vip/255.255.255.0/eth0,node2-vip/255.255.255.0/eth0'"/> <PROPERTY NAME="NEW_NODEVIPS" TYPE="STRING" VAL="'node1-vip/255.255.255.0/eth0,node2-vip/255.255.255.0/eth0'"/> <PROPERTY NAME="ORACLE_HOME" TYPE="STRING" VAL="/u01/app/11.2.0/grid"/> <PROPERTY NAME="GPNPCONFIGDIR" TYPE="STRING" VAL="$ORACLE_HOME"/> <PROPERTY NAME="SILENT" TYPE="STRING" VAL="false"/> <PROPERTY NAME="CRFHOME" TYPE="STRING" VAL=""/u01/app/11.2.0/grid""/> <PROPERTY NAME="ASM_DISCOVERY_STRING" TYPE="STRING" VAL="/dev/asm-*"/> <PROPERTY NAME="HOST_NAME_LIST" TYPE="STRING" VAL="node1,node2"/> <PROPERTY NAME="LANGUAGE_ID" TYPE="STRING" VAL="AMERICAN_AMERICA.WE8ISO8859P1"/> <PROPERTY NAME="CSS_LEASEDURATION" TYPE="NUMBER" VAL="400"/> <PROPERTY NAME="ORA_DBA_GROUP" TYPE="STRING" VAL="oinstall"/> <PROPERTY NAME="NETWORKS" TYPE="STRING" VAL=""eth0"/10.37.2.0:public,"eth1"/192.168.52.0:cluster_interconnect"/> <PROPERTY NAME="GPNPGCONFIGDIR" TYPE="STRING" VAL="$ORACLE_HOME"/> <PROPERTY NAME="SCAN_PORT" TYPE="NUMBER" VAL="1521"/> <PROPERTY NAME="TZ" TYPE="STRING" VAL="PRC"/> <PROPERTY NAME="ASM_UPGRADE" TYPE="STRING" VAL="false"/> <PROPERTY NAME="SCAN_NAME" TYPE="STRING" VAL="rac-scan"/> <PROPERTY NAME="NODELIST" TYPE="STRING" VAL="node1,node2"/> <PROPERTY NAME="VNDR_CLUSTER" TYPE="STRING" VAL="false"/> <PROPERTY NAME="CRS_STORAGE_OPTION" TYPE="NUMBER" VAL="1"/> <PROPERTY NAME="USER_IGNORED_PREREQ" TYPE="STRING" VAL="true"/> <PROPERTY NAME="JREDIR" TYPE="STRING" VAL="/u01/app/11.2.0/grid/jdk/jre/"/> <PROPERTY NAME="CLUSTER_NAME" TYPE="STRING" VAL="node-cluster"/> <PROPERTY NAME="ORACLE_OWNER" TYPE="STRING" VAL="grid"/> <PROPERTY NAME="ASM_REDUNDANCY" TYPE="STRING" VAL="EXTERNAL "/> </PROPERTY_LIST> </CHECKPOINT> <CHECKPOINT LEVEL="MAJOR" NAME="ROOTCRS_OSDSETUP" DESC="ROOTCRS_OSDSETUP" STATE="SUCCESS"/> <CHECKPOINT LEVEL="MAJOR" NAME="ROOTCRS_ONETIME" DESC="ROOTCRS_ONETIME" STATE="SUCCESS"/> <CHECKPOINT LEVEL="MAJOR" NAME="ROOTCRS_OLR" DESC="ROOTCRS_OLR" STATE="SUCCESS"/> <CHECKPOINT LEVEL="MAJOR" NAME="ROOTCRS_GPNPSETUP" DESC="ROOTCRS_GPNPSETUP" STATE="SUCCESS"/> <CHECKPOINT LEVEL="MAJOR" NAME="ROOTCRS_OHASD" DESC="ROOTCRS_OHASD" STATE="SUCCESS"/> <CHECKPOINT LEVEL="MAJOR" NAME="ROOTCRS_INITRES" DESC="ROOTCRS_INITRES" STATE="SUCCESS"/> <CHECKPOINT LEVEL="MAJOR" NAME="ROOTCRS_ACFSINST" DESC="ROOTCRS_ACFSINST" STATE="SUCCESS"/> <CHECKPOINT LEVEL="MAJOR" NAME="ROOTCRS_BOOTCFG" DESC="ROOTCRS_BOOTCFG" STATE="SUCCESS"/> <CHECKPOINT LEVEL="MAJOR" NAME="ROOTCRS_NODECONFIG" DESC="ROOTCRS_NODECONFIG" STATE="SUCCESS"/> </CHECKPOINTS>

配置OCR信息

2017-10-12 13:01:49: OCR locations = +DATA 2017-10-12 13:01:49: Validating OCR 2017-10-12 13:01:49: Retrieving OCR location used by previous installations 2017-10-12 13:01:49: Opening file /etc/oracle/ocr.loc 2017-10-12 13:01:49: Value () is set for key=ocrconfig_loc 2017-10-12 13:01:49: Opening file /etc/oracle/ocr.loc 2017-10-12 13:01:49: Value () is set for key=ocrmirrorconfig_loc 2017-10-12 13:01:49: Opening file /etc/oracle/ocr.loc 2017-10-12 13:01:49: Value () is set for key=ocrconfig_loc3 2017-10-12 13:01:49: Opening file /etc/oracle/ocr.loc 2017-10-12 13:01:49: Value () is set for key=ocrconfig_loc4 2017-10-12 13:01:49: Opening file /etc/oracle/ocr.loc 2017-10-12 13:01:49: Value () is set for key=ocrconfig_loc5 2017-10-12 13:01:49: Checking if OCR sync file exists 2017-10-12 13:01:49: No need to sync OCR file 2017-10-12 13:01:49: OCR_LOCATION=+DATA 2017-10-12 13:01:49: OCR_MIRROR_LOCATION= 2017-10-12 13:01:49: OCR_MIRROR_LOC3= 2017-10-12 13:01:49: OCR_MIRROR_LOC4= 2017-10-12 13:01:49: OCR_MIRROR_LOC5= 2017-10-12 13:01:49: Current OCR location= 2017-10-12 13:01:49: Current OCR mirror location= 2017-10-12 13:01:49: Current OCR mirror loc3= 2017-10-12 13:01:49: Current OCR mirror loc4= 2017-10-12 13:01:49: Current OCR mirror loc5= 2017-10-12 13:01:49: Verifying current OCR settings with user entered values 2017-10-12 13:01:49: Setting OCR locations in /etc/oracle/ocr.loc 2017-10-12 13:01:49: Validating OCR locations in /etc/oracle/ocr.loc 2017-10-12 13:01:49: Checking for existence of /etc/oracle/ocr.loc 2017-10-12 13:01:49: Backing up /etc/oracle/ocr.loc to /etc/oracle/ocr.loc.orig 2017-10-12 13:01:49: Setting ocr location +DATA 2017-10-12 13:01:49: Creating or upgrading Oracle Local Registry (OLR) 2017-10-12 13:01:49: Executing /u01/app/11.2.0/grid/bin/ocrconfig -local -upgrade grid oinstall 2017-10-12 13:01:49: Executing cmd: /u01/app/11.2.0/grid/bin/ocrconfig -local -upgrade grid oinstall 2017-10-12 13:01:50: OLR successfully created or upgraded 2017-10-12 13:01:50: /u01/app/11.2.0/grid/bin/clscfg -localadd 2017-10-12 13:01:50: Executing cmd: /u01/app/11.2.0/grid/bin/clscfg -localadd 2017-10-12 13:01:50: Command output: > LOCAL ADD MODE > Creating OCR keys for user 'root', privgrp 'root'.. > Operation successful. >End Command output

配置gpnp

Oracle CRS stack is not configured yet 2017-10-12 13:01:47: ---Checking local gpnp setup... 2017-10-12 13:01:47: The setup file "/u01/app/11.2.0/grid/gpnp/node2/profiles/peer/profile.xml" does not exist 2017-10-12 13:01:47: The setup file "/u01/app/11.2.0/grid/gpnp/node2/wallets/peer/cwallet.sso" does not exist 2017-10-12 13:01:47: The setup file "/u01/app/11.2.0/grid/gpnp/node2/wallets/prdr/cwallet.sso" does not exist 2017-10-12 13:01:47: chk gpnphome /u01/app/11.2.0/grid/gpnp/node2: profile_ok 0 wallet_ok 0 r/o_wallet_ok 0 2017-10-12 13:01:47: chk gpnphome /u01/app/11.2.0/grid/gpnp/node2: INVALID (bad profile/wallet) 2017-10-12 13:01:47: ---Checking cluster-wide gpnp setup... 2017-10-12 13:01:47: chk gpnphome /u01/app/11.2.0/grid/gpnp: profile_ok 1 wallet_ok 1 r/o_wallet_ok 1

配置CSS服务

Validating for SI-CSS configuration 2017-10-12 13:01:49: Retrieving OCR main disk location 2017-10-12 13:01:49: Opening file /etc/oracle/ocr.loc 2017-10-12 13:01:49: Value () is set for key=ocrconfig_loc 2017-10-12 13:01:49: Unable to retrieve ocr disk info 2017-10-12 13:01:49: Checking to see if any 9i GSD is up 2017-10-12 13:01:49: libskgxnBase_lib = /etc/ORCLcluster/oracm/lib/libskgxn2.so 2017-10-12 13:01:49: libskgxn_lib = /opt/ORCLcluster/lib/libskgxn2.so 2017-10-12 13:01:49: SKGXN library file does not exists 2017-10-12 13:01:49: OLR location = /u01/app/11.2.0/grid/cdata/node2.olr 2017-10-12 13:01:49: Oracle CRS Home = /u01/app/11.2.0/grid 2017-10-12 13:01:49: Validating /etc/oracle/olr.loc file for OLR location /u01/app/11.2.0/grid/cdata/node2.olr 2017-10-12 13:01:49: /etc/oracle/olr.loc already exists. Backing up /etc/oracle/olr.loc to /etc/oracle/olr.loc.orig 2017-10-12 13:01:49: Done setting permissions on file /etc/oracle/olr.loc 2017-10-12 13:01:49: Oracle CRS home = /u01/app/11.2.0/grid 2017-10-12 13:01:49: Oracle cluster name = node-cluster 2017-10-12 13:01:49: OCR locations = +DATA

配置OHASD服务

checkpoint ROOTCRS_OHASD does not exist 2017-10-12 13:01:52: Running as user grid: /u01/app/11.2.0/grid/bin/cluutil -ckpt -oraclebase /u01/app/grid -writeckpt -name ROOTCRS_OHASD -state START 2017-10-12 13:01:52: s_run_as_user2: Running /bin/su grid -c ' /u01/app/11.2.0/grid/bin/cluutil -ckpt -oraclebase /u01/app/grid -writeckpt -name ROOTCRS_OHASD -state START ' 2017-10-12 13:01:53: Removing file /tmp/file1SjuYP 2017-10-12 13:01:53: Successfully removed file: /tmp/file1SjuYP 2017-10-12 13:01:53: /bin/su successfully executed 2017-10-12 13:01:53: Succeeded in writing the checkpoint:'ROOTCRS_OHASD' with status:START 2017-10-12 13:01:53: CkptFile: /u01/app/grid/Clusterware/ckptGridHA_node2.xml 2017-10-12 13:01:53: Sync the checkpoint file '/u01/app/grid/Clusterware/ckptGridHA_node2.xml' 2017-10-12 13:01:53: Sync '/u01/app/grid/Clusterware/ckptGridHA_node2.xml' to the physical disk 2017-10-12 13:01:53: Executing cmd: /bin/rpm -q sles-release 2017-10-12 13:01:53: Command output: > package sles-release is not installed >End Command output 2017-10-12 13:01:53: init file = /u01/app/11.2.0/grid/crs/init/init.ohasd 2017-10-12 13:01:53: Copying file /u01/app/11.2.0/grid/crs/init/init.ohasd to /etc/init.d directory 2017-10-12 13:01:53: Setting init.ohasd permission in /etc/init.d directory 2017-10-12 13:01:53: init file = /u01/app/11.2.0/grid/crs/init/ohasd 2017-10-12 13:01:53: Copying file /u01/app/11.2.0/grid/crs/init/ohasd to /etc/init.d directory 2017-10-12 13:01:53: Setting ohasd permission in /etc/init.d directory 2017-10-12 13:01:53: Executing cmd: /bin/rpm -q sles-release 2017-10-12 13:01:53: Command output: > package sles-release is not installed 'ohasd' is now registered 2017-10-12 13:01:53: Starting ohasd 2017-10-12 13:01:53: Checking the status of ohasd 2017-10-12 13:01:53: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl check has 2017-10-12 13:01:55: Checking the status of ohasd 2017-10-12 13:02:00: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl check has 2017-10-12 13:02:02: Checking the status of ohasd 2017-10-12 13:02:07: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl check has 2017-10-12 13:02:09: Checking the status of ohasd 2017-10-12 13:02:14: ohasd is not already running.. will start it now 2017-10-12 13:02:14: Executing cmd: /bin/rpm -qf /sbin/init 2017-10-12 13:02:14: Command output: > upstart-0.6.5-13.el6_5.3.x86_64

2017-10-12 13:02:33: Registering type ora.daemon.type 2017-10-12 13:02:33: Executing /u01/app/11.2.0/grid/bin/crsctl add type ora.daemon.type -basetype cluster_resource -file /u01/app/11.2.0/grid/log/node2/ohasd/ora.daemon.type -init 2017-10-12 13:02:33: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl add type ora.daemon.type -basetype cluster_resource -file /u01/app/11.2.0/grid/log/node2/ohasd/ora.daemon.type -init 2017-10-12 13:02:34: Registering type ora.haip.type 2017-10-12 13:02:34: Executing /u01/app/11.2.0/grid/bin/crsctl add type ora.haip.type -basetype cluster_resource -file /u01/app/11.2.0/grid/log/node2/ohasd/ora.haip.type -init 2017-10-12 13:02:34: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl add type ora.haip.type -basetype cluster_resource -file /u01/app/11.2.0/grid/log/node2/ohasd/ora.haip.type -init 2017-10-12 13:02:34: Removing file /u01/app/11.2.0/grid/log/node2/ohasd/ora.haip.type 2017-10-12 13:02:34: Successfully removed file: /u01/app/11.2.0/grid/log/node2/ohasd/ora.haip.type 2017-10-12 13:02:34: Registering type 'ora.mdns.type' 2017-10-12 13:02:34: Executing /u01/app/11.2.0/grid/bin/crsctl add type ora.mdns.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/mdns.type -init 2017-10-12 13:02:34: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl add type ora.mdns.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/mdns.type -init 2017-10-12 13:02:34: Type 'ora.mdns.type' added successfully 2017-10-12 13:02:34: Registering type 'ora.gpnp.type' 2017-10-12 13:02:34: Executing /u01/app/11.2.0/grid/bin/crsctl add type ora.gpnp.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/gpnp.type -init 2017-10-12 13:02:34: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl add type ora.gpnp.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/gpnp.type -init 2017-10-12 13:02:34: Type 'ora.gpnp.type' added successfully 2017-10-12 13:02:34: Registering type 'ora.gipc.type' 2017-10-12 13:02:34: Executing /u01/app/11.2.0/grid/bin/crsctl add type ora.gipc.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/gipc.type -init 2017-10-12 13:02:34: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl add type ora.gipc.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/gipc.type -init 2017-10-12 13:02:34: Type 'ora.gipc.type' added successfully 2017-10-12 13:02:34: Registering type 'ora.cssd.type' 2017-10-12 13:02:34: Executing /u01/app/11.2.0/grid/bin/crsctl add type ora.cssd.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/cssd.type -init 2017-10-12 13:02:34: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl add type ora.cssd.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/cssd.type -init 2017-10-12 13:02:35: Type 'ora.cssd.type' added successfully 2017-10-12 13:02:35: Registering type 'ora.cssdmonitor.type' 2017-10-12 13:02:35: Executing /u01/app/11.2.0/grid/bin/crsctl add type ora.cssdmonitor.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/cssdmonitor.type -init 2017-10-12 13:02:35: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl add type ora.cssdmonitor.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/cssdmonitor.type -init 2017-10-12 13:02:35: Type 'ora.cssdmonitor.type' added successfully 2017-10-12 13:02:35: Registering type 'ora.crs.type' 2017-10-12 13:02:35: Executing /u01/app/11.2.0/grid/bin/crsctl add type ora.crs.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/crs.type -init 2017-10-12 13:02:35: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl add type ora.crs.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/crs.type -init 2017-10-12 13:02:35: Type 'ora.crs.type' added successfully 2017-10-12 13:02:35: Registering type 'ora.evm.type' 2017-10-12 13:02:35: Executing /u01/app/11.2.0/grid/bin/crsctl add type ora.evm.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/evm.type -init 2017-10-12 13:02:35: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl add type ora.evm.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/evm.type -init 2017-10-12 13:02:35: Type 'ora.evm.type' added successfully 2017-10-12 13:02:35: Registering type 'ora.ctss.type' 2017-10-12 13:02:35: Executing /u01/app/11.2.0/grid/bin/crsctl add type ora.ctss.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/ctss.type -init 2017-10-12 13:02:35: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl add type ora.ctss.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/ctss.type -init 2017-10-12 13:02:36: Type 'ora.ctss.type' added successfully 2017-10-12 13:02:36: Registering type 'ora.crf.type' 2017-10-12 13:02:36: Executing /u01/app/11.2.0/grid/bin/crsctl add type ora.crf.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/crf.type -init 2017-10-12 13:02:36: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl add type ora.crf.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/crf.type -init 2017-10-12 13:02:36: Type 'ora.crf.type' added successfully 2017-10-12 13:02:36: Registering type 'ora.asm.type' 2017-10-12 13:02:36: Executing /u01/app/11.2.0/grid/bin/crsctl add type ora.asm.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/asm.type -init 2017-10-12 13:02:36: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl add type ora.asm.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/asm.type -init 2017-10-12 13:02:36: Type 'ora.asm.type' added successfully 2017-10-12 13:02:36: Registering type 'ora.drivers.acfs.type' 2017-10-12 13:02:36: Executing /u01/app/11.2.0/grid/bin/crsctl add type ora.drivers.acfs.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/drivers.acfs.type -init 2017-10-12 13:02:36: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl add type ora.drivers.acfs.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/drivers.acfs.type -init 2017-10-12 13:02:36: Type 'ora.drivers.acfs.type' added successfully 2017-10-12 13:02:36: Registering type 'ora.diskmon.type' 2017-10-12 13:02:36: Executing /u01/app/11.2.0/grid/bin/crsctl add type ora.diskmon.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/diskmon.type -init 2017-10-12 13:02:36: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl add type ora.diskmon.type -basetype ora.daemon.type -file /u01/app/11.2.0/grid/log/node2/ohasd/diskmon.type -init 2017-10-12 13:02:37: Type 'ora.diskmon.type' added successfully 2017-10-12 13:02:37: Executing /u01/app/11.2.0/grid/bin/crsctl add resource ora.mdnsd -attr "ACL='owner:grid:rw-,pgrp:oinstall:rw-,other::r--,user:grid:rwx'" -type ora.mdns.type -init 2017-10-12 13:02:37: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl add resource ora.mdnsd -attr "ACL='owner:grid:rw-,pgrp:oinstall:rw-,other::r--,user:grid:rwx'" -type ora.mdns.type -init 2017-10-12 13:02:37: Executing /u01/app/11.2.0/grid/bin/crsctl add resource ora.gpnpd -attr "ACL='owner:grid:rw-,pgrp:oinstall:rw-,other::r--,user:grid:rwx',START_DEPENDENCIES='weak(ora.mdnsd)'" -type ora.gpnp.type -init 2017-10-12 13:02:37: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl add resource ora.gpnpd -attr "ACL='owner:grid:rw-,pgrp:oinstall:rw-,other::r--,user:grid:rwx',START_DEPENDENCIES='weak(ora.mdnsd)'" -type ora.gpnp.type -init 2017-10-12 13:02:37: Executing /u01/app/11.2.0/grid/bin/crsctl add resource ora.gipcd -attr "ACL='owner:grid:rw-,pgrp:oinstall:rw-,other::r--,user:grid:rwx',START_DEPENDENCIES='hard(ora.gpnpd)',STOP_DEPENDENCIES=hard(intermediate:ora.gpn pd)" -type ora.gipc.type -init

启动CSS

2017-10-12 13:02:43: Starting CSS in exclusive mode 2017-10-12 13:02:43: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl start resource ora.cssd -init -env CSSD_MODE=-X 2017-10-12 13:03:31: Command output: > CRS-2672: Attempting to start 'ora.mdnsd' on 'node2' > CRS-2676: Start of 'ora.mdnsd' on 'node2' succeeded > CRS-2672: Attempting to start 'ora.gpnpd' on 'node2' > CRS-2676: Start of 'ora.gpnpd' on 'node2' succeeded > CRS-2672: Attempting to start 'ora.cssdmonitor' on 'node2' > CRS-2672: Attempting to start 'ora.gipcd' on 'node2' > CRS-2676: Start of 'ora.cssdmonitor' on 'node2' succeeded > CRS-2676: Start of 'ora.gipcd' on 'node2' succeeded > CRS-2672: Attempting to start 'ora.cssd' on 'node2' > CRS-2672: Attempting to start 'ora.diskmon' on 'node2' > CRS-2676: Start of 'ora.diskmon' on 'node2' succeeded > CRS-4402: The CSS daemon was started in exclusive mode but found an active CSS daemon on node node1, number 1, and is terminating > CRS-2674: Start of 'ora.cssd' on 'node2' failed > CRS-2679: Attempting to clean 'ora.cssd' on 'node2' > CRS-2681: Clean of 'ora.cssd' on 'node2' succeeded > CRS-2673: Attempting to stop 'ora.gipcd' on 'node2' > CRS-2677: Stop of 'ora.gipcd' on 'node2' succeeded > CRS-2673: Attempting to stop 'ora.cssdmonitor' on 'node2' > CRS-2677: Stop of 'ora.cssdmonitor' on 'node2' succeeded > CRS-2673: Attempting to stop 'ora.gpnpd' on 'node2' > CRS-2677: Stop of 'ora.gpnpd' on 'node2' succeeded > CRS-2673: Attempting to stop 'ora.mdnsd' on 'node2' > CRS-2677: Stop of 'ora.mdnsd' on 'node2' succeeded > CRS-4000: Command Start failed, or completed with errors. >End Command output 2017-10-12 13:03:46: Starting CSS in clustered mode 2017-10-12 13:03:46: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl start resource ora.cssd -init 2017-10-12 13:04:07: Command output: > CRS-2672: Attempting to start 'ora.cssdmonitor' on 'node2' > CRS-2672: Attempting to start 'ora.gipcd' on 'node2' > CRS-2676: Start of 'ora.cssdmonitor' on 'node2' succeeded > CRS-2676: Start of 'ora.gipcd' on 'node2' succeeded > CRS-2672: Attempting to start 'ora.cssd' on 'node2' > CRS-2672: Attempting to start 'ora.diskmon' on 'node2' > CRS-2676: Start of 'ora.diskmon' on 'node2' succeeded > CRS-2676: Start of 'ora.cssd' on 'node2' succeeded >End Command output

启动rac服务并检查

2017-10-12 13:04:24: Start of resource "ora.cluster_interconnect.haip" Succeeded 2017-10-12 13:04:24: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl start resource ora.asm -init 2017-10-12 13:04:37: Command output: > CRS-2672: Attempting to start 'ora.asm' on 'node2' > CRS-2676: Start of 'ora.asm' on 'node2' succeeded >End Command output 2017-10-12 13:04:37: Start of resource "ora.asm" Succeeded 2017-10-12 13:04:37: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl start resource ora.crsd -init 2017-10-12 13:04:38: Command output: > CRS-2672: Attempting to start 'ora.crsd' on 'node2' > CRS-2676: Start of 'ora.crsd' on 'node2' succeeded >End Command output 2017-10-12 13:04:38: Start of resource "ora.crsd" Succeeded 2017-10-12 13:04:38: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl start resource ora.evmd -init 2017-10-12 13:04:40: Command output: > CRS-2672: Attempting to start 'ora.evmd' on 'node2' > CRS-2676: Start of 'ora.evmd' on 'node2' succeeded >End Command output 2017-10-12 13:04:40: Start of resource "ora.evmd" Succeeded 2017-10-12 13:04:40: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl check crs 2017-10-12 13:04:40: Command output: > CRS-4638: Oracle High Availability Services is online > CRS-4535: Cannot communicate with Cluster Ready Services > CRS-4529: Cluster Synchronization Services is online > CRS-4534: Cannot communicate with Event Manager >End Command output 2017-10-12 13:04:40: Running /u01/app/11.2.0/grid/bin/crsctl check cluster -n node2 2017-10-12 13:04:40: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl check cluster -n node2 2017-10-12 13:04:40: Checking the status of cluster 2017-10-12 13:04:45: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl check cluster -n node2 2017-10-12 13:04:45: Checking the status of cluster 2017-10-12 13:04:50: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl check cluster -n node2 2017-10-12 13:04:50: Checking the status of cluster 2017-10-12 13:04:55: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl check cluster -n node2 2017-10-12 13:04:55: Checking the status of cluster 2017-10-12 13:05:00: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl check cluster -n node2 2017-10-12 13:05:01: Checking the status of cluster 2017-10-12 13:05:06: Executing cmd: /u01/app/11.2.0/grid/bin/crsctl check cluster -n node2

备份OLR

> > node2 2017/10/12 13:05:20 /u01/app/11.2.0/grid/cdata/node2/backup_20171012_130520.olr >End Command output 2017-10-12 13:05:20: Successfully generated OLR backup

根据之前的描述, 可以看到root.sh会完成以下几个阶段的工作。

阶段1:完成配置集群前的一些杂项工作。

阶段2:节点的初始化设置。

阶段3:初始化OLR。

阶段4:初始化gpnp wallet和gpnp profile。

阶段5:配置init.ohasd脚本并启动ohasd。

阶段6:向集群添加初始化资源。

阶段7:将css启动到exclusive模式并格式化W。除了第一个运行root.sh的节点以外,

其他节点会以正常模式启动css 。

阶段8:以正常模式启动集群。

阶段9:向集群添加CRS相关的资源。

阶段10:重新启动集群。

相关文章推荐

- Configure Oracle 11gR2 RAC 一节点执行root.sh脚本报错

- oracle 11gr2 RAC安装中在node 2节点再次执行root.sh遇到的问题

- oracle 11gR2 RAC root.sh 错误 ORA-15072 ORA-15018

- Oracle 11g RAC 第二节点root.sh执行失败后再次执行root.sh

- Configure Oracle 11gR2 RAC 一节点执行root.sh脚本报错

- Oracle 11g RAC 二节点root.sh执行报错故障一例

- oracle 11gR2 RAC root.sh 错误 ORA-15072 ORA-15018

- oracle 11gR2 RAC root.sh 错误 ORA-15072 ORA-15018

- oracle 11gR2 RAC root.sh 错误 ORA-15072 ORA-15018

- oracle 11gR2 RAC root.sh 错误 ORA-15072 ORA-15018

- Oracle 11gR2 RAC root.sh Deconfigure the existing cluster configuration 解决方法

- Oracle 11gR2 RAC root.sh Deconfigure the existing cluster configuration 解决方法

- Configure Oracle 11gR2 RAC 一节点执行root.sh脚本报错

- Oracle 11gR2 RAC root.sh Deconfigure the existing cluster configuration 解决方法

- Oracle 11g RAC 执行root.sh时遭遇 CRS-0184/PRCR-1070

- Oracle 11gR2 RAC root.sh Deconfigure the existing cluster configuration 解决方法

- 【RAC】安装cluster软件 在节点2执行root.sh脚本

- TAR包CLONE方式 安装11GR2 RAC数据库 (gril软件root.sh)

- Oracle Rac root.sh报错 Failed to create keys in the OLR, rc = 127 libcap.so.1

- Oracle RAC root.sh 报错 Timed out waiting for the CRS stack to start 解决方法—--范例篇