搭积木般构建深度学习网络——Xception完整代码解析

2017-06-28 20:45

579 查看

在了解什么是Xception以前,我们首先要了解Inception。Inception结构,由Szegedy等在2014年引入,被称为GoogLeNet(Inception V1),之后被优化为Inception V2, Inception V3以及最新的Inception-ResNet。Inception自身受早期的网络-网络结构启发。自从首次推出,Inception无论是对于ImageNet的数据集,还是Google内部的数据集,特别是JFT,都是表现最好的模型之一。Inception风格模型最重要的内容是Inception模块,该模块有不同版本存在。

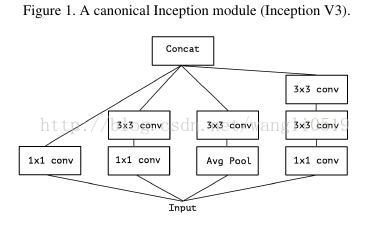

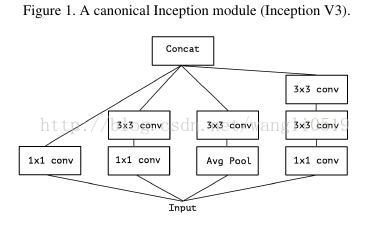

上图是Inception V3结构中传统Inception模块。一个Inception模型是该模块的堆叠。这与早期的VGG风格简单卷积层堆叠的网络不同。

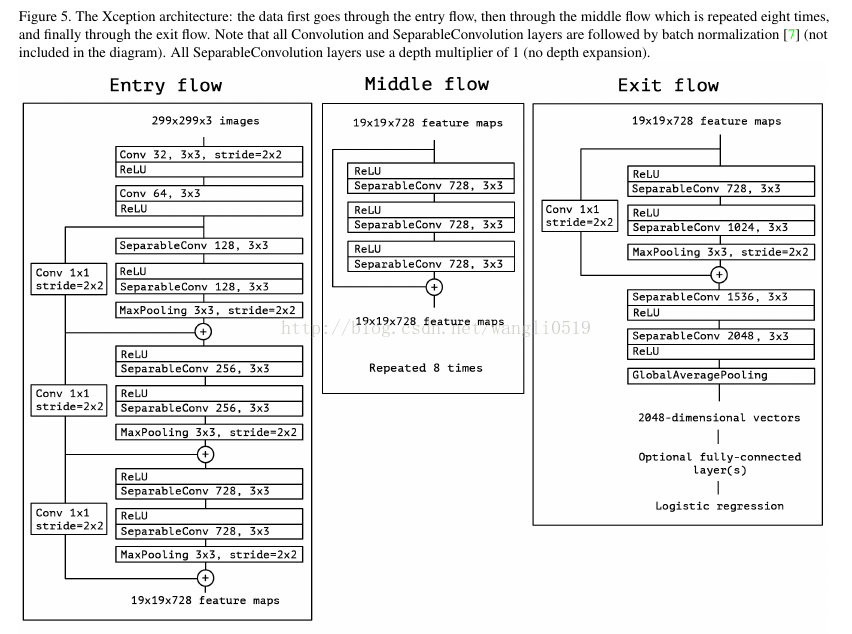

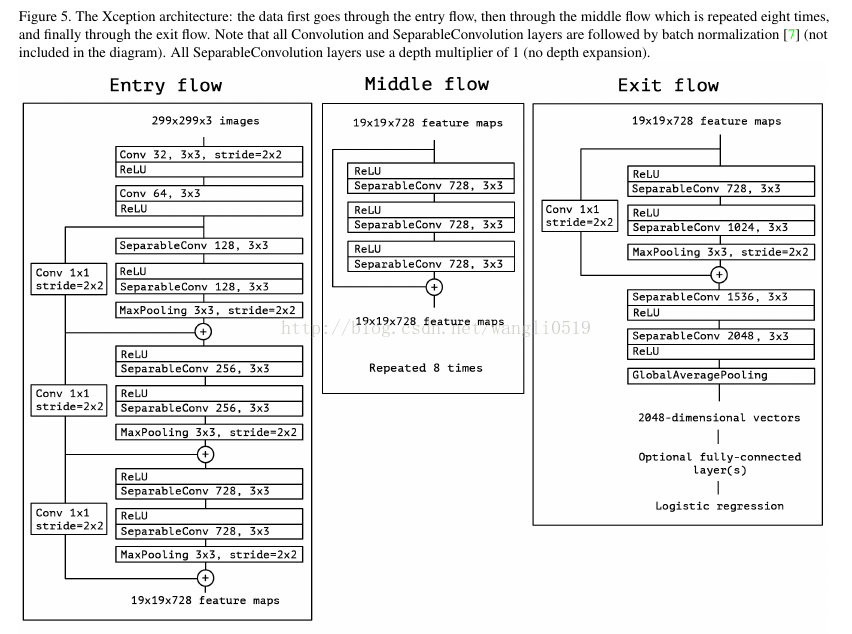

而Xception是指卷积神经网络的特征图中的跨通道相关性和空间相关性的映射可以完全脱钩。由于这种假设是Inception结构中极端化的假设,我们将它称作Xception,意指极端Inception。

Xception在ImageNet上top-1的验证准确度达0.790,top-5的验证准确度达0.945。需要注意的是输入图片的模式不同于VGG16和ResNet的224×224,是299×299,并且输入预处理函数也有不同。另外需要指出的是目前该模型只支持TensorFlow作为后端。

详细介绍请见 深度可分卷积

点击打开链接

首先我们载入一些依赖库文件

from __future__ import print_function

from __future__ import absolute_import

import warnings

import numpy as np

from keras.preprocessing import image

from keras.models import Model

from keras import layers

from keras.layers import Dense

from keras.layers import Input

from keras.layers import BatchNormalization

from keras.layers import Activation

from keras.layers import Conv2D

from keras.layers import SeparableConv2D

from keras.layers import MaxPooling2D

from keras.layers import GlobalAveragePooling2D

from keras.layers import GlobalMaxPooling2D

from keras.engine.topology import get_source_inputs

from keras.utils.data_utils import get_file

from keras import backend as K

from keras.applications.imagenet_utils import decode_predictions

from keras.applications.imagenet_utils import _obtain_input_shape

然后我们指定预训练模型的权重路径。权重可以从网上下载也可以是你自己此前训练并保存的权重,如何保存训练好的模型和权重可以参阅keras常见问题汇总

点击打开链接

这里提供网上预训练的Xception参数下载。再次提醒模型目前只支持TensorFlow作为后端,因此仅提供TensorFlow的模型训练参数。

如果你没有阅读本文此前关于深度可分卷积的链接文章,那么我们简单回顾一下它的模型结构。

接下来我们用代码来表现上述架构。注意载入预训练的权重是可选项,数据格式要符合TensorFlow的顺序要求(宽、高、通道),在Keras的设置文件keras.json中将image_data_format设置成‘channel_last',默认的输入图像大小是299×299。通道确定为3,宽和高应不小于71。

def Xception(include_top=True, weights='imagenet',

input_tensor=None, input_shape=None,

pooling=None,

classes=1000):

if weights not in {'imagenet', None}:

raise ValueError('The `weights` argument should be either '

'`None` (random initialization) or `imagenet` '

'(pre-training on ImageNet).')

if weights == 'imagenet' and include_top and classes != 1000:

raise ValueError('If using `weights` as imagenet with `include_top`'

' as true, `classes` should be 1000')

if K.backend() != 'tensorflow':

raise RuntimeError('The Xception model is only available with '

'the TensorFlow backend.')

if K.image_data_format() != 'channels_last':

warnings.warn('The Xception model is only available for the '

'input data format "channels_last" '

'(width, height, channels). '

'However your settings specify the default '

'data format "channels_first" (channels, width, height). '

'You should set `image_data_format="channels_last"` in your Keras '

'config located at ~/.keras/keras.json. '

'The model being returned right now will expect inputs '

'to follow the "channels_last" data format.')

K.set_image_data_format('channels_last')

old_data_format = 'channels_first'

else:

old_data_format = None

input_shape = _obtain_input_shape(input_shape,

default_size=299,

min_size=71,

data_format=K.image_data_format(),

include_top=include_top)

if input_tensor is None:

img_input = Input(shape=input_shape)

else:

if not K.is_keras_tensor(input_tensor):

img_input = Input(tensor=input_tensor, shape=input_shape)

else:

img_input = input_tensor

x = Conv2D(32, (3, 3), strides=(2, 2), use_bias=False, name='block1_conv1')(img_input)

x = BatchNormalization(name='block1_conv1_bn')(x)

x = Activation('relu', name='block1_conv1_act')(x)

x = Conv2D(64, (3, 3), use_bias=False, name='block1_conv2')(x)

x = BatchNormalization(name='block1_conv2_bn')(x)

x = Activation('relu', name='block1_conv2_act')(x)

residual = Conv2D(128, (1, 1), strides=(2, 2),

padding='same', use_bias=False)(x)

residual = BatchNormalization()(residual)

x = SeparableConv2D(128, (3, 3), padding='same', use_bias=False, name='block2_sepconv1')(x)

x = BatchNormalization(name='block2_sepconv1_bn')(x)

x = Activation('relu', name='block2_sepconv2_act')(x)

x = SeparableConv2D(128, (3, 3), padding='same', use_bias=False, name='block2_sepconv2')(x)

x = BatchNormalization(name='block2_sepconv2_bn')(x)

x = MaxPooling2D((3, 3), strides=(2, 2), padding='same', name='block2_pool')(x)

x = layers.add([x, residual])

residual = Conv2D(256, (1, 1), strides=(2, 2),

padding='same', use_bias=False)(x)

residual = BatchNormalization()(residual)

x = Activation('relu', name='block3_sepconv1_act')(x)

x = SeparableConv2D(256, (3, 3), padding='same', use_bias=False, name='block3_sepconv1')(x)

x = BatchNormalization(name='block3_sepconv1_bn')(x)

x = Activation('relu', name='block3_sepconv2_act')(x)

x = SeparableConv2D(256, (3, 3), padding='same', use_bias=False, name='block3_sepconv2')(x)

x = BatchNormalization(name='block3_sepconv2_bn')(x)

x = MaxPooling2D((3, 3), strides=(2, 2), padding='same', name='block3_pool')(x)

x = layers.add([x, residual])

residual = Conv2D(728, (1, 1), strides=(2, 2),

padding='same', use_bias=False)(x)

residual = BatchNormalization()(residual)

x = Activation('relu', name='block4_sepconv1_act')(x)

x = SeparableConv2D(728, (3, 3), padding='same', use_bias=False, name='block4_sepconv1')(x)

x = BatchNormalization(name='block4_sepconv1_bn')(x)

x = Activation('relu', name='block4_sepconv2_act')(x)

x = SeparableConv2D

bec4

(728, (3, 3), padding='same', use_bias=False, name='block4_sepconv2')(x)

x = BatchNormalization(name='block4_sepconv2_bn')(x)

x = MaxPooling2D((3, 3), strides=(2, 2), padding='same', name='block4_pool')(x)

x = layers.add([x, residual])

for i in range(8):

residual = x

prefix = 'block' + str(i + 5)

x = Activation('relu', name=prefix + '_sepconv1_act')(x)

x = SeparableConv2D(728, (3, 3), padding='same', use_bias=False, name=prefix + '_sepconv1')(x)

x = BatchNormalization(name=prefix + '_sepconv1_bn')(x)

x = Activation('relu', name=prefix + '_sepconv2_act')(x)

x = SeparableConv2D(728, (3, 3), padding='same', use_bias=False, name=prefix + '_sepconv2')(x)

x = BatchNormalization(name=prefix + '_sepconv2_bn')(x)

x = Activation('relu', name=prefix + '_sepconv3_act')(x)

x = SeparableConv2D(728, (3, 3), padding='same', use_bias=False, name=prefix + '_sepconv3')(x)

x = BatchNormalization(name=prefix + '_sepconv3_bn')(x)

x = layers.add([x, residual])

residual = Conv2D(1024, (1, 1), strides=(2, 2),

padding='same', use_bias=False)(x)

residual = BatchNormalization()(residual)

x = Activation('relu', name='block13_sepconv1_act')(x)

x = SeparableConv2D(728, (3, 3), padding='same', use_bias=False, name='block13_sepconv1')(x)

x = BatchNormalization(name='block13_sepconv1_bn')(x)

x = Activation('relu', name='block13_sepconv2_act')(x)

x = SeparableConv2D(1024, (3, 3), padding='same', use_bias=False, name='block13_sepconv2')(x)

x = BatchNormalization(name='block13_sepconv2_bn')(x)

x = MaxPooling2D((3, 3), strides=(2, 2), padding='same', name='block13_pool')(x)

x = layers.add([x, residual])

x = SeparableConv2D(1536, (3, 3), padding='same', use_bias=False, name='block14_sepconv1')(x)

x = BatchNormalization(name='block14_sepconv1_bn')(x)

x = Activation('relu', name='block14_sepconv1_act')(x)

x = SeparableConv2D(2048, (3, 3), padding='same', use_bias=False, name='block14_sepconv2')(x)

x = BatchNormalization(name='block14_sepconv2_bn')(x)

x = Activation('relu', name='block14_sepconv2_act')(x)

if include_top:

x = GlobalAveragePooling2D(name='avg_pool')(x)

x = Dense(classes, activation='softmax', name='predictions')(x)

else:

if pooling == 'avg':

x = GlobalAveragePooling2D()(x)

elif pooling == 'max':

x = GlobalMaxPooling2D()(x)

if input_tensor is not None:

inputs = get_source_inputs(input_tensor)

else:

inputs = img_input

model = Model(inputs, x, name='xception')

if weights == 'imagenet':

if include_top:

weights_path = get_file('xception_weights_tf_dim_ordering_tf_kernels.h5',

TF_WEIGHTS_PATH,

cache_subdir='models')

else:

weights_path = get_file('xception_weights_tf_dim_ordering_tf_kernels_notop.h5',

TF_WEIGHTS_PATH_NO_TOP,

cache_subdir='models')

model.load_weights(weights_path)

if old_data_format:

K.set_image_data_format(old_data_format)

return model

定义输入预处理函数

def preprocess_input(x):

x /= 255.

x -= 0.5

x *= 2.

return x

最后是文件运行主函数

if __name__ == '__main__':

model = Xception(include_top=True, weights='imagenet')

img_path = 'elephant.jpg'

img = image.load_img(img_path, target_size=(299, 299))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

print('Input image shape:', x.shape)

preds = model.predict(x)

print(np.argmax(preds))

print('Predicted:', decode_predictions(preds, 1))

简单讲,Xception结构是带有残差连接的深度可分卷积层的线性堆叠,它吸收了此前一些模型的优点,并且作为这一复杂程度的模型来讲容易定义与修改,并在实践中取得了非常好的效果。

上图是Inception V3结构中传统Inception模块。一个Inception模型是该模块的堆叠。这与早期的VGG风格简单卷积层堆叠的网络不同。

而Xception是指卷积神经网络的特征图中的跨通道相关性和空间相关性的映射可以完全脱钩。由于这种假设是Inception结构中极端化的假设,我们将它称作Xception,意指极端Inception。

Xception在ImageNet上top-1的验证准确度达0.790,top-5的验证准确度达0.945。需要注意的是输入图片的模式不同于VGG16和ResNet的224×224,是299×299,并且输入预处理函数也有不同。另外需要指出的是目前该模型只支持TensorFlow作为后端。

详细介绍请见 深度可分卷积

点击打开链接

首先我们载入一些依赖库文件

from __future__ import print_function

from __future__ import absolute_import

import warnings

import numpy as np

from keras.preprocessing import image

from keras.models import Model

from keras import layers

from keras.layers import Dense

from keras.layers import Input

from keras.layers import BatchNormalization

from keras.layers import Activation

from keras.layers import Conv2D

from keras.layers import SeparableConv2D

from keras.layers import MaxPooling2D

from keras.layers import GlobalAveragePooling2D

from keras.layers import GlobalMaxPooling2D

from keras.engine.topology import get_source_inputs

from keras.utils.data_utils import get_file

from keras import backend as K

from keras.applications.imagenet_utils import decode_predictions

from keras.applications.imagenet_utils import _obtain_input_shape

然后我们指定预训练模型的权重路径。权重可以从网上下载也可以是你自己此前训练并保存的权重,如何保存训练好的模型和权重可以参阅keras常见问题汇总

点击打开链接

这里提供网上预训练的Xception参数下载。再次提醒模型目前只支持TensorFlow作为后端,因此仅提供TensorFlow的模型训练参数。

TF_WEIGHTS_PATH = 'https://github.com/fchollet/deep-learning-models/releases/download/v0.4/xception_weights_tf_dim_ordering_tf_kernels.h5' TF_WEIGHTS_PATH_NO_TOP = 'https://github.com/fchollet/deep-learning-models/releases/download/v0.4/xception_weights_tf_dim_ordering_tf_kernels_notop.h5'

如果你没有阅读本文此前关于深度可分卷积的链接文章,那么我们简单回顾一下它的模型结构。

接下来我们用代码来表现上述架构。注意载入预训练的权重是可选项,数据格式要符合TensorFlow的顺序要求(宽、高、通道),在Keras的设置文件keras.json中将image_data_format设置成‘channel_last',默认的输入图像大小是299×299。通道确定为3,宽和高应不小于71。

def Xception(include_top=True, weights='imagenet',

input_tensor=None, input_shape=None,

pooling=None,

classes=1000):

if weights not in {'imagenet', None}:

raise ValueError('The `weights` argument should be either '

'`None` (random initialization) or `imagenet` '

'(pre-training on ImageNet).')

if weights == 'imagenet' and include_top and classes != 1000:

raise ValueError('If using `weights` as imagenet with `include_top`'

' as true, `classes` should be 1000')

if K.backend() != 'tensorflow':

raise RuntimeError('The Xception model is only available with '

'the TensorFlow backend.')

if K.image_data_format() != 'channels_last':

warnings.warn('The Xception model is only available for the '

'input data format "channels_last" '

'(width, height, channels). '

'However your settings specify the default '

'data format "channels_first" (channels, width, height). '

'You should set `image_data_format="channels_last"` in your Keras '

'config located at ~/.keras/keras.json. '

'The model being returned right now will expect inputs '

'to follow the "channels_last" data format.')

K.set_image_data_format('channels_last')

old_data_format = 'channels_first'

else:

old_data_format = None

input_shape = _obtain_input_shape(input_shape,

default_size=299,

min_size=71,

data_format=K.image_data_format(),

include_top=include_top)

if input_tensor is None:

img_input = Input(shape=input_shape)

else:

if not K.is_keras_tensor(input_tensor):

img_input = Input(tensor=input_tensor, shape=input_shape)

else:

img_input = input_tensor

x = Conv2D(32, (3, 3), strides=(2, 2), use_bias=False, name='block1_conv1')(img_input)

x = BatchNormalization(name='block1_conv1_bn')(x)

x = Activation('relu', name='block1_conv1_act')(x)

x = Conv2D(64, (3, 3), use_bias=False, name='block1_conv2')(x)

x = BatchNormalization(name='block1_conv2_bn')(x)

x = Activation('relu', name='block1_conv2_act')(x)

residual = Conv2D(128, (1, 1), strides=(2, 2),

padding='same', use_bias=False)(x)

residual = BatchNormalization()(residual)

x = SeparableConv2D(128, (3, 3), padding='same', use_bias=False, name='block2_sepconv1')(x)

x = BatchNormalization(name='block2_sepconv1_bn')(x)

x = Activation('relu', name='block2_sepconv2_act')(x)

x = SeparableConv2D(128, (3, 3), padding='same', use_bias=False, name='block2_sepconv2')(x)

x = BatchNormalization(name='block2_sepconv2_bn')(x)

x = MaxPooling2D((3, 3), strides=(2, 2), padding='same', name='block2_pool')(x)

x = layers.add([x, residual])

residual = Conv2D(256, (1, 1), strides=(2, 2),

padding='same', use_bias=False)(x)

residual = BatchNormalization()(residual)

x = Activation('relu', name='block3_sepconv1_act')(x)

x = SeparableConv2D(256, (3, 3), padding='same', use_bias=False, name='block3_sepconv1')(x)

x = BatchNormalization(name='block3_sepconv1_bn')(x)

x = Activation('relu', name='block3_sepconv2_act')(x)

x = SeparableConv2D(256, (3, 3), padding='same', use_bias=False, name='block3_sepconv2')(x)

x = BatchNormalization(name='block3_sepconv2_bn')(x)

x = MaxPooling2D((3, 3), strides=(2, 2), padding='same', name='block3_pool')(x)

x = layers.add([x, residual])

residual = Conv2D(728, (1, 1), strides=(2, 2),

padding='same', use_bias=False)(x)

residual = BatchNormalization()(residual)

x = Activation('relu', name='block4_sepconv1_act')(x)

x = SeparableConv2D(728, (3, 3), padding='same', use_bias=False, name='block4_sepconv1')(x)

x = BatchNormalization(name='block4_sepconv1_bn')(x)

x = Activation('relu', name='block4_sepconv2_act')(x)

x = SeparableConv2D

bec4

(728, (3, 3), padding='same', use_bias=False, name='block4_sepconv2')(x)

x = BatchNormalization(name='block4_sepconv2_bn')(x)

x = MaxPooling2D((3, 3), strides=(2, 2), padding='same', name='block4_pool')(x)

x = layers.add([x, residual])

for i in range(8):

residual = x

prefix = 'block' + str(i + 5)

x = Activation('relu', name=prefix + '_sepconv1_act')(x)

x = SeparableConv2D(728, (3, 3), padding='same', use_bias=False, name=prefix + '_sepconv1')(x)

x = BatchNormalization(name=prefix + '_sepconv1_bn')(x)

x = Activation('relu', name=prefix + '_sepconv2_act')(x)

x = SeparableConv2D(728, (3, 3), padding='same', use_bias=False, name=prefix + '_sepconv2')(x)

x = BatchNormalization(name=prefix + '_sepconv2_bn')(x)

x = Activation('relu', name=prefix + '_sepconv3_act')(x)

x = SeparableConv2D(728, (3, 3), padding='same', use_bias=False, name=prefix + '_sepconv3')(x)

x = BatchNormalization(name=prefix + '_sepconv3_bn')(x)

x = layers.add([x, residual])

residual = Conv2D(1024, (1, 1), strides=(2, 2),

padding='same', use_bias=False)(x)

residual = BatchNormalization()(residual)

x = Activation('relu', name='block13_sepconv1_act')(x)

x = SeparableConv2D(728, (3, 3), padding='same', use_bias=False, name='block13_sepconv1')(x)

x = BatchNormalization(name='block13_sepconv1_bn')(x)

x = Activation('relu', name='block13_sepconv2_act')(x)

x = SeparableConv2D(1024, (3, 3), padding='same', use_bias=False, name='block13_sepconv2')(x)

x = BatchNormalization(name='block13_sepconv2_bn')(x)

x = MaxPooling2D((3, 3), strides=(2, 2), padding='same', name='block13_pool')(x)

x = layers.add([x, residual])

x = SeparableConv2D(1536, (3, 3), padding='same', use_bias=False, name='block14_sepconv1')(x)

x = BatchNormalization(name='block14_sepconv1_bn')(x)

x = Activation('relu', name='block14_sepconv1_act')(x)

x = SeparableConv2D(2048, (3, 3), padding='same', use_bias=False, name='block14_sepconv2')(x)

x = BatchNormalization(name='block14_sepconv2_bn')(x)

x = Activation('relu', name='block14_sepconv2_act')(x)

if include_top:

x = GlobalAveragePooling2D(name='avg_pool')(x)

x = Dense(classes, activation='softmax', name='predictions')(x)

else:

if pooling == 'avg':

x = GlobalAveragePooling2D()(x)

elif pooling == 'max':

x = GlobalMaxPooling2D()(x)

if input_tensor is not None:

inputs = get_source_inputs(input_tensor)

else:

inputs = img_input

model = Model(inputs, x, name='xception')

if weights == 'imagenet':

if include_top:

weights_path = get_file('xception_weights_tf_dim_ordering_tf_kernels.h5',

TF_WEIGHTS_PATH,

cache_subdir='models')

else:

weights_path = get_file('xception_weights_tf_dim_ordering_tf_kernels_notop.h5',

TF_WEIGHTS_PATH_NO_TOP,

cache_subdir='models')

model.load_weights(weights_path)

if old_data_format:

K.set_image_data_format(old_data_format)

return model

定义输入预处理函数

def preprocess_input(x):

x /= 255.

x -= 0.5

x *= 2.

return x

最后是文件运行主函数

if __name__ == '__main__':

model = Xception(include_top=True, weights='imagenet')

img_path = 'elephant.jpg'

img = image.load_img(img_path, target_size=(299, 299))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

print('Input image shape:', x.shape)

preds = model.predict(x)

print(np.argmax(preds))

print('Predicted:', decode_predictions(preds, 1))

简单讲,Xception结构是带有残差连接的深度可分卷积层的线性堆叠,它吸收了此前一些模型的优点,并且作为这一复杂程度的模型来讲容易定义与修改,并在实践中取得了非常好的效果。

相关文章推荐

- 深度学习框架caffe代码解析一:主要类的关系说明

- 机器学习完整过程案例分布解析,python代码解析

- 代码解析深度学习系统编程模型:TensorFlow vs. CNTK

- 神经网络解析和深度学习简介

- 【深度学习】torch使用nngraph构建网络并训练

- 深度学习神经网络从欠拟合到拟合的调整方法及示例代码

- 深度学习 13. 能力提升, 一步一步的介绍如何自己构建网络和训练,利用MatConvNet(二),思路整理

- 文章解析整理:《从全卷积网络到大型卷积核:深度学习的语义分割全指南》未完

- 十种深度学习算法要点及代码解析

- 深度学习DeepLearning.ai系列课程学习总结:8. 多层神经网络代码实战

- 深度学习caffe代码怎么读 & 添加新网络层

- 谷歌新 AI 实验室主管 Hugo 深度学习教程:神经网络、CV、NLP 难点解析

- 简易的深度学习框架Keras代码解析与应用

- Spring MVC学习总结(16)——SpringMVC运行流程深度解析(含代码)

- 机器学习完整过程案例分布解析,python代码解析

- 代码解析深度学习系统编程模型:TensorFlow vs. CNTK

- 【深度学习】笔记2_caffe自带的第一个例子,Mnist手写数字识别代码,过程,网络详解

- 【深度学习】笔记7: CNN训练Cifar-10技巧 ---如何进行实验,如何进行构建自己的网络模型,提高精度

- 第四篇:JAVA网络编程之构建和解析自定义协议消息(含代码)

- 神经网络与深度学习 第二章 反向传播算法(两个假设、四个基本方程及其证明、代码及注释)