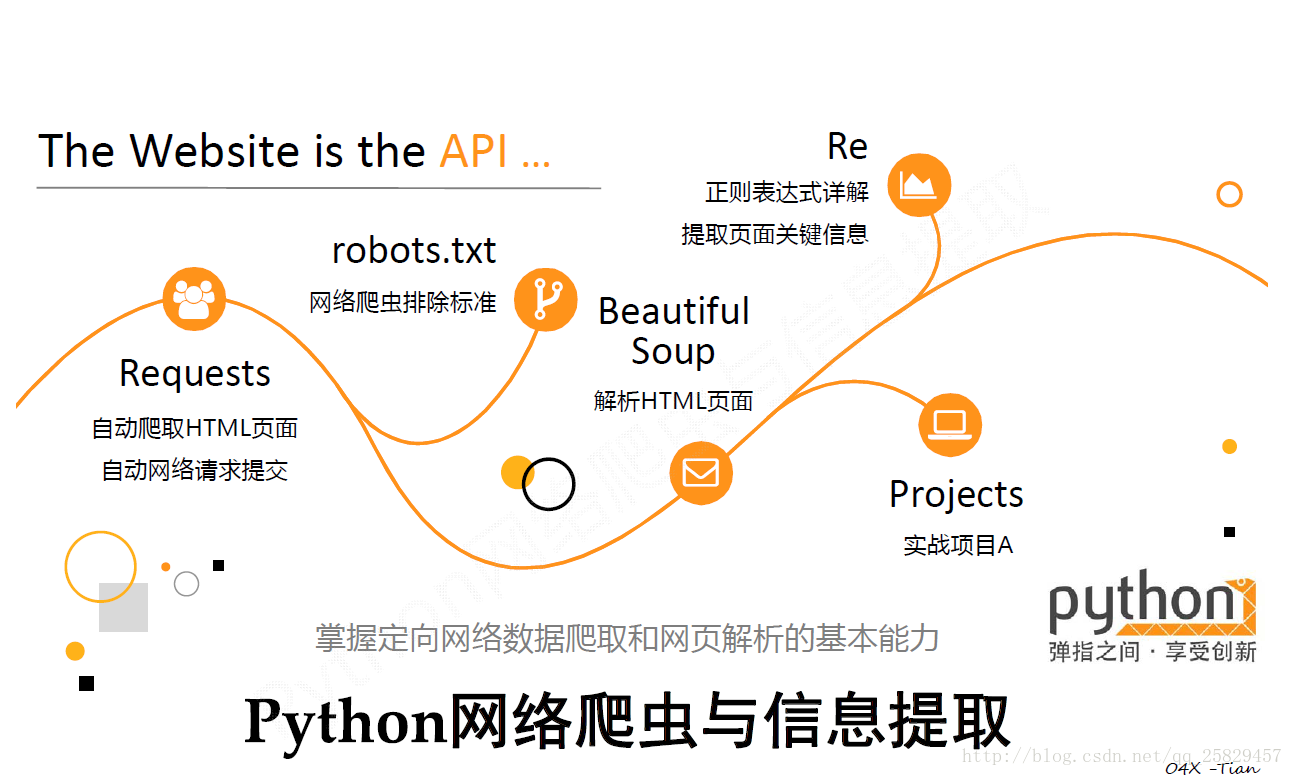

Python网络爬虫与信息提取(中国大学mooc)

2017-06-04 17:11

603 查看

目录

目录Python网络爬虫与信息提取

淘宝商品比价定向爬虫

目标获取淘宝搜索页面的信息

理解淘宝的搜索接口翻页的处理

技术路线requests-refootnote

代码如下

股票数据定向爬虫

列表内容

爬取网站原则

代码如下

代码优化

Python网络爬虫与信息提取

淘宝商品比价定向爬虫股票数据定向爬虫

1. 淘宝商品比价定向爬虫

功能描述

目标:获取淘宝搜索页面的信息

理解:淘宝的搜索接口翻页的处理

技术路线:requests-re[^footnote].

代码如下:

#CrowTaobaoPrice.py

import requests

import re

def getHTMLText(url):

try:

r = requests.get(url, timeout=30)

r.raise_for_status()

r.encoding = r.apparent_encoding

return r.text

except:

return ""

def parsePage(ilt, html):

try:

plt = re.findall(r'\"view_price\"\:\"[\d\.]*\"',html)

tlt = re.findall(r'\"raw_title\"\:\".*?\"',html)

for i in range(len(plt)):

price = eval(plt[i].split(':')[1])

title = eval(tlt[i].split(':')[1])

ilt.append([price , title])

except:

print("")

def printGoodsList(ilt):

tplt = "{:4}\t{:8}\t{:16}"

print(tplt.format("序号", "价格", "商品名称"))

count = 0

for g in ilt:

count = count + 1

print(tplt.format(count, g[0], g[1]))

def main():

goods = '书包'

depth = 3

start_url = 'https://s.taobao.com/search?q=' + goods

infoList = []

for i in range(depth):

try:

url = start_url + '&s=' + str(44*i)

html = getHTMLText(url)

parsePage(infoList, html)

except:

continue

printGoodsList(infoList)

main()流程图:

步骤1:提交商品搜索请求,循环获取页面

步骤2:对于每个页面,提取商品名称和价格信息

步骤3:将信息输出到屏幕上

Created with Raphaël 2.1.0开始提交商品搜索请求,循环获取页面对于每个页面,提取商品名称和价格信息将信息输出到屏幕上结束

2. 股票数据定向爬虫

1. 列表内容

功能描述目标:获取上交所和深交所所有股票的名称和交易信息

输出:保存到文件中

技术路线:requests-bs4-re

新浪股票:http://finance.sina.com.cn/stock/

百度股票:https://gupiao.baidu.com/stock/

2.爬取网站原则

选取原则:股票信息静态存在于HTML页面中,非js代码生成,没有Robots协议限制选取方法:浏览器F12,源代码查看等

选取心态:不要纠结于某个网站,多找信息源尝试

程序结构如下

Created with Raphaël 2.1.0开始步骤1:从东方财富网获取股票列表步骤2:根据股票列表逐个到百度股票获取个股信息步骤3:将结果存储到文件结束

代码如下

#CrawBaiduStocksA.py

import requests

from bs4 import BeautifulSoup

import traceback

import re

def getHTMLText(url):

try:

r = requests.get(url)

r.raise_for_status()

r.encoding = r.apparent_encoding

return r.text

except:

return ""

def getStockList(lst, stockURL):

html = getHTMLText(stockURL)

soup = BeautifulSoup(html, 'html.parser')

a = soup.find_all('a')

for i in a:

try:

href = i.attrs['href']

lst.append(re.findall(r"[s][hz]\d{6}", href)[0])

except:

continue

def getStockInfo(lst, stockURL, fpath):

for stock in lst:

url = stockURL + stock + ".html"

html = getHTMLText(url)

try:

if html=="":

continue

infoDict = {}

soup = BeautifulSoup(html, 'html.parser')

stockInfo = soup.find('div',attrs={'class':'stock-bets'})

name = stockInfo.find_all(attrs={'class':'bets-name'})[0]

infoDict.update({'股票名称': name.text.split()[0]})

keyList = stockInfo.find_all('dt')

valueList = stockInfo.find_all('dd')

for i in range(len(keyList)):

key = keyList[i].text

val = valueList[i].text

infoDict[key] = val

with open(fpath, 'a', encoding='utf-8') as f:

f.write( str(infoDict) + '\n' )

except:

traceback.print_exc()

continue

def main():

stock_list_url = 'http://quote.eastmoney.com/stocklist.html'

stock_info_url = 'https://gupiao.baidu.com/stock/'

output_file = 'D:/BaiduStockInfo.txt'

slist=[]

getStockList(slist, stock_list_url)

getStockInfo(slist, stock_info_url, output_file)

main()代码优化

1.编码识别优化2.增加动态进度显示

优化后代码如下

import requests

from bs4 import BeautifulSoup

import traceback

import re

def getHTMLText(url, code="utf-8"):

try:

r = requests.get(url)

r.raise_for_status()

r.encoding = code

return r.text

except:

return ""

def getStockList(lst, stockURL):

html = getHTMLText(stockURL, "GB2312")

soup = BeautifulSoup(html, 'html.parser')

a = soup.find_all('a')

for i in a:

try:

href = i.attrs['href']

lst.append(re.findall(r"[s][hz]\d{6}", href)[0])

except:

continue

def getStockInfo(lst, stockURL, fpath):

count = 0

for stock in lst:

url = stockURL + stock + ".html"

html = getHTMLText(url)

try:

if html=="":

continue

infoDict = {}

soup = BeautifulSoup(html, 'html.parser')

stockInfo = soup.find('div',attrs={'class':'stock-bets'})

name = stockInfo.find_all(attrs={'class':'bets-name'})[0]

infoDict.update({'股票名称': name.text.split()[0]})

keyList = stockInfo.find_all('dt')

valueList = stockInfo.find_all('dd')

for i in range(len(keyList)):

key = keyList[i].text

val = valueList[i].text

infoDict[key] = val

with open(fpath, 'a', encoding='utf-8') as f:

f.write( str(infoDict) + '\n' )

count = count + 1

print("\r当前进度: {:.2f}%".format(count*100/len(lst)),end="")

except:

count = count + 1

print("\r当前进度: {:.2f}%".format(count*100/len(lst)),end="")

continue

def main():

stock_list_url = 'http://quote.eastmoney.com/stocklist.html'

stock_info_url = 'https://gupiao.baidu.com/stock/'

output_file = 'D:/BaiduStockInfo.txt'

slist=[]

getStockList(slist, stock_list_url)

getStockInfo(slist, stock_info_url, output_file)

main()来自

Python网络爬虫与信息提取

中国大学mooc

http://www.icourse163.org/learn/BIT-1001870001?tid=1001962001#/learn/content?type=detail&id=1002699548&cid=1003101008

相关文章推荐

- 中国大学MOOC·Python网络爬虫与信息提取(一)

- Python网络爬虫与信息提取-中国大学MOOC

- 中国大学MOOC·Python网络爬虫与信息提取(二)——五个实例分析

- 【MOOC】Python网络爬虫与信息提取-北京理工大学-part 2

- MOOC-Python网络爬虫与信息提取-第二周 BeautifulSoup库入门与信息提取方法

- 【MOOC】Python网络爬虫与信息提取-北京理工大学-part 1

- 【MOOC】Python网络爬虫与信息提取-北京理工大学-part 4

- 【MOOC】Python网络爬虫与信息提取-北京理工大学-part 3

- Python网络爬虫与信息提取-Day10-(实例)中国大学排名定向爬虫

- Python网络爬虫和信息提取(一)

- Python网络爬虫与信息提取(三):网络爬虫之实战

- Python网络爬虫与信息提取(一):网络爬虫之规则

- Python网络爬虫与信息提取(二)—— BeautifulSoup

- Python网络爬虫与信息提取(一)

- Python网络爬虫与信息提取-Day7-基于bs4库的HTML内容遍历方法

- Python网络爬虫与信息提取-Day11-正则表达式的概念和语法

- Python网络爬虫与信息提取(三)—— Re模块

- Python 网络爬虫与信息获取(二)—— 页面内容提取

- Python网络爬虫与信息提取-Day9-信息标记与提取方法

- Python网络爬虫与信息提取 - requests库入门