Hadoop(三)java操作HDFS

2017-03-06 00:00

309 查看

1.Java读取hdfs文件内容

1.1.在创建 /root/Downloads/ 目录下创建hello文件,并写入内容

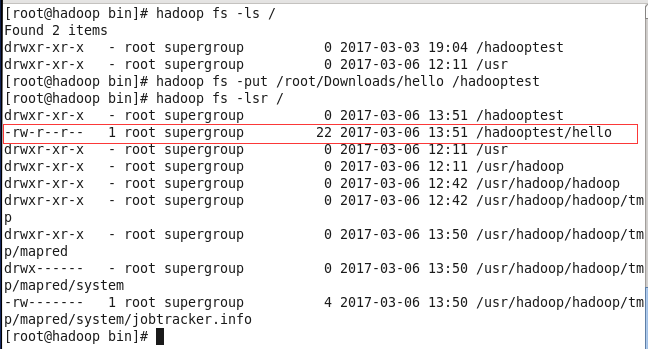

1.2.把hello文件从linux上传到hdfs中

1.3 eclipse中创建java项目并导入jar包(jar包来自hadoop)

1.4 输入如下代码,并执行

2.java创建HDFS文件夹

执行后,指令查询是否有该文件夹:

3.java上传文件到HDFS

4.java下载HDFS文件

5.java删除HDFS文件

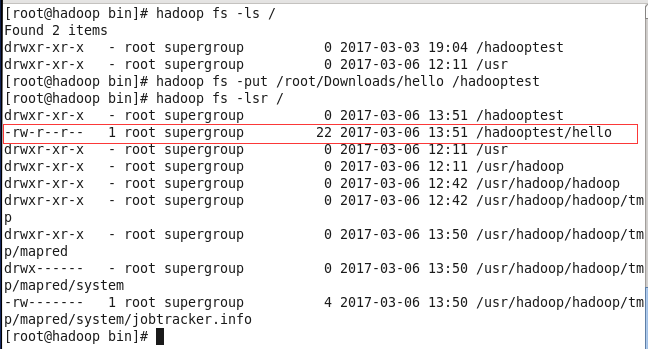

下图第一个红框为删除前,第二个红框为删除后:

1.1.在创建 /root/Downloads/ 目录下创建hello文件,并写入内容

1.2.把hello文件从linux上传到hdfs中

1.3 eclipse中创建java项目并导入jar包(jar包来自hadoop)

1.4 输入如下代码,并执行

public class TestHDFSRead {

public static final String HDFS_PATH="hdfs://192.168.80.100:9000/hadooptest/hello";

public static void main(String[] args) throws Exception {

// 将hdfs 格式的url 转换成系统能够识别的

URL.setURLStreamHandlerFactory(new FsUrlStreamHandlerFactory());

final URL url = new URL(HDFS_PATH);

final InputStream in = url.openStream();

//copyBytes(in, out, buffSize, close)

//四个参数分别为输入流,输出流,缓冲区大小,是否关闭流

IOUtils.copyBytes(in, System.out, 1024, true);

}

}

2.java创建HDFS文件夹

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

public class TestHDFS {

public static final String HDFS_PATH="hdfs://192.168.80.100:9000";

//前面有斜线表示从根目录开始,没有斜线从/usr/root开始

public static final String HadoopTestPath="/hadooptest2";

public static void main(String[] args) throws Exception {

final FileSystem fileSystem = FileSystem.get(new URI(HDFS_PATH), new Configuration());

//创建文件夹

fileSystem.mkdirs(new Path(HadoopTestPath));

}

}执行后,指令查询是否有该文件夹:

3.java上传文件到HDFS

import java.io.FileInputStream;

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

public class TestHDFS {

public static final String HDFS_PATH="hdfs://192.168.80.100:9000";

//前面有斜线表示从根目录开始,没有斜线从/usr/root开始

public static final String HadoopTestPath="/hadooptest2";

public static final String HadoopFilePath="/hadooptest2/hello123";//hello123为需要写入的文件

public static void main(String[] args) throws Exception {

final FileSystem fileSystem = FileSystem.get(new URI(HDFS_PATH), new Configuration());

//创建文件夹

//fileSystem.mkdirs(new Path(HadoopTestPath));

//上传文件

final FSDataOutputStream out = fileSystem.create(new Path(HadoopFilePath));

final FileInputStream in = new FileInputStream("d:/hello2.txt");//hello2.txt文件写入hello123

IOUtils.copyBytes(in,out, 1024, true);

}

}

4.java下载HDFS文件

public class TestHDFS {

public static final String HDFS_PATH="hdfs://192.168.80.100:9000";

//前面有斜线表示从根目录开始,没有斜线从/usr/root开始

public static final String HadoopTestPath="/hadooptest2";

public static final String HadoopFilePath="/hadooptest2/hello123";//hello123为需要写入的文件

public static void main(String[] args) throws Exception {

final FileSystem fileSystem = FileSystem.get(new URI(HDFS_PATH), new Configuration());

//创建文件夹

//fileSystem.mkdirs(new Path(HadoopTestPath));

//上传文件

//final FSDataOutputStream out = fileSystem.create(new Path(HadoopFilePath));

//final FileInputStream in = new FileInputStream("d:/hello2.txt");//hello2.txt文件写入hello123

//IOUtils.copyBytes(in,out, 1024, true);

//下载文件

final FSDataInputStream in = fileSystem.open(new Path(HadoopFilePath));

IOUtils.copyBytes(in,System.out, 1024, true);

}

}

5.java删除HDFS文件

public class TestHDFS {

public static final String HDFS_PATH="hdfs://192.168.80.100:9000";

//前面有斜线表示从根目录开始,没有斜线从/usr/root开始

public static final String HadoopTestPath="/hadooptest2";

public static final String HadoopFilePath="/hadooptest2/hello123";//hello123为需要写入的文件

public static void main(String[] args) throws Exception {

final FileSystem fileSystem = FileSystem.get(new URI(HDFS_PATH), new Configuration());

//创建文件夹

//fileSystem.mkdirs(new Path(HadoopTestPath));

//上传文件

//final FSDataOutputStream out = fileSystem.create(new Path(HadoopFilePath));

//final FileInputStream in = new FileInputStream("d:/hello2.txt");//hello2.txt文件写入hello123

//IOUtils.copyBytes(in,out, 1024, true);

//下载文件

//final FSDataInputStream in = fileSystem.open(new Path(HadoopFilePath));

//IOUtils.copyBytes(in,System.out, 1024, true);

//删除文件(夹),两个参数 第一个为删除路径,第二个为递归删除

fileSystem.delete(new Path(HadoopFilePath),true);

}

}下图第一个红框为删除前,第二个红框为删除后:

相关文章推荐

- hadoop java操作hdfs

- Hadoop HDFS文件操作的Java代码

- hadoop - hadoop2.6 伪分布式 - Java API 操作 HDFS

- Hadoop的HDFS Java pai 读写操作

- 第二篇:Hadoop HDFS常用JAVA api操作程序

- JAVA操作HDFS API(hadoop)

- hadoop java操作hdfs

- [Hadoop--基础]--java操作hdfs(上传、下载、查询)

- JAVA操作HDFS API(hadoop) HDFS API详解

- JAVA操作HDFS API(hadoop)

- hadoop学习:Java对HDFS的基本操作

- hadoop hdfs的java操作

- Hadoop Java API 操作 hdfs--1

- Hadoop HDFS文件操作的Java代码

- hadoop java HDFS 读写操作

- _00002 Hadoop HDFS体系结构及shell、java操作方式

- Hadoop HDFS的Java操作

- JAVA操作HDFS API(hadoop)

- C#、JAVA操作Hadoop(HDFS、Map/Reduce)真实过程概述。组件、源码下载。无法解决:Response status code does not indicate success: 500。

- java操作HDFS------Hadoop学习(3)