[置顶] Hive编程指南-JDBC连接、脚本执行

2017-02-14 08:48

686 查看

摘要:本文主要讲了如何通过java来连接Hive,以及如何执行hive脚本

2、 hive web界面的 (端口号9999) 启动方式

#hive --service hwi

用于通过浏览器来访问hive http://hadoop0:9999/hwi/ 3、 hive 远程服务 (端口号10000) 启动方式

#hive --service hiveserver

注意:hiveserver不能和hwi服务同时启动使用。

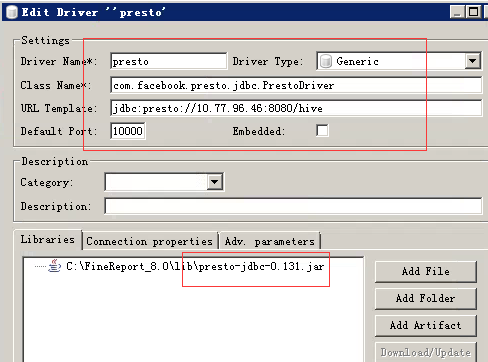

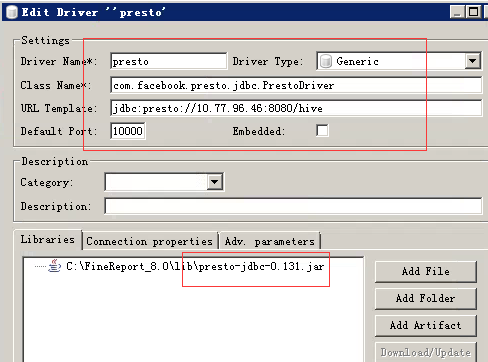

4、使用dbveare工具

需要将presto的jar添加进来并配置连接

HiveServer使用thrift服务来为客户端提供远程连接的访问端口,在JDBC连接Hive之前必须先启动HiveServer。

hive --service hiveserver

hiveserver默认端口是10000,可以使用hive --service hiveserver -p 10002,更改默认启动端口,此端口也是JDBC连接端口。

1、直接通过jdbc

package com.lin.bdp.common.utils;

import java.lang.reflect.Field;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.sql.ResultSetMetaData;

import java.sql.SQLException;

import java.sql.Statement;

import java.util.ArrayList;

import java.util.List;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import com.lin.bdp.common.vo.Visitor;

/**

*

* 功能概要:hive客户端工具

*

* @author linbingwen

* @since 2016年10月20日

*/

public class HiveJdbcClient {

private static final Logger logger = LoggerFactory.getLogger(HiveJdbcClient.class);

public static final char UNDERLINE = '_';

private static String driverName;

private static String url;

private static String user;

private static String password;

private static class LazyHolder {

private static final HiveJdbcClient INSTANCE = new HiveJdbcClient();

}

public static final HiveJdbcClient getInstance() {

return LazyHolder.INSTANCE;

}

/**

* 初始化参数

* @author linbingwen

* @since 2016年10月20日

*/

private void init() {

driverName = ConfigLoader.getProperty("hive.jdbc.driverName");

url = ConfigLoader.getProperty("hive.jdbc.url");

user = ConfigLoader.getProperty("hive.jdbc.user");

password = ConfigLoader.getProperty("hive.jdbc.password");

}

private void initPresto() {

driverName ="com.facebook.presto.jdbc.PrestoDriver";

url = "jdbc:presto://10.78.104.5:8080/hive/ods_uba";

user = "presto";

password = "Admin@123";

}

private HiveJdbcClient() {

init();

}

/**

* 获取连接

* @author linbingwen

* @since 2016年10月20日

* @return

* @throws ClassNotFoundException

* @throws SQLException

*/

private Connection getConnection() throws ClassNotFoundException, SQLException {

Class.forName(driverName);

Connection conn = DriverManager.getConnection(url, user, password);

return conn;

}

/**

* 按条件查找

* @author linbingwen

* @since 2016年10月20日

* @param clazz

* @param sql

* @return

* @throws Exception

*/

public <T> List<T> find(Class<T> clazz, String sql) throws Exception {

if (sql == null || sql.length() == 0) {

logger.warn("查询sql语句不能为空");

return new ArrayList<T>();

}

Connection connection = null;

PreparedStatement preState = null;

ResultSet rs = null;

try {

connection = getConnection();

Statement stmt = connection.createStatement();

rs = stmt.executeQuery(sql);

return (List<T>) handler(clazz, rs);

} catch (Exception e) {

logger.error("sql = {}执行出错,Exception = {}", sql, e.getLocalizedMessage());

throw e;

} finally {

release(connection,preState,rs);

}

}

/**

* 释放资源

*

* @author linbingwen

* @since 2016年8月31日

* @param conn

* @param st

* @param rs

*/

private void release(Connection conn, Statement st, ResultSet rs) {

if (rs != null) {

try {

rs.close();

} catch (Exception e) {

e.printStackTrace();

}

rs = null;

}

if (st != null) {

try {

st.close();

} catch (Exception e) {

e.printStackTrace();

}

st = null;

}

if (conn != null) {

try {

conn.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

/**

* 下划线字段转成陀峰字段

* @author linbingwen

* @since 2016年10月20日

* @param param

* @return

*/

private String underlineToCamel(String param) {

if (param == null || param.isEmpty()) {

return null;

}

int len = param.length();

StringBuilder sb = new StringBuilder(len);

for (int i = 0; i < len; i++) {

char c = param.charAt(i);

if (c == UNDERLINE) {

if (++i < len) {

sb.append(Character.toUpperCase(param.charAt(i)));

}

} else {

sb.append(c);

}

}

return sb.toString();

}

/**

* 组装list对象

* @author linbingwen

* @since 2016年10月20日

* @param clazz

* @param rs

* @return

* @throws Exception

*/

private Object handler(Class clazz, ResultSet rs) throws Exception {

List list = new ArrayList();

try {

while (rs.next()) {

Object bean = clazz.newInstance();

ResultSetMetaData meta = rs.getMetaData();

int count = meta.getColumnCount();

for (int i = 0; i < count; i++) {

String columnName = meta.getColumnName(i + 1);

String name = columnName;

if (columnName.contains(".")) {

String[] split = columnName.split("\\.");

if (split.length != 2) {

throw new Exception("输入的表名不正确!");

}

name =split[1];

}

Object value = rs.getObject(columnName);

name = underlineToCamel(name.toLowerCase());

try {

Field f = bean.getClass().getDeclaredField(name);

if (f != null) {

f.setAccessible(true);

f.set(bean, value);

}

} catch (NoSuchFieldException e) {

logger.error("表中字段:{}在类:{}没有对应的属性", name, clazz);

} catch (IllegalArgumentException e) {

logger.error("表中字段:{}在类:{}对应属性类型不一到", name, clazz);

}

}

list.add(bean);

}

} catch (Exception e) {

throw e;

}

logger.info("hiveHandler successed the total size is:{}",list.size());

return list;

}

@Override

public String toString() {

return "HiveJdbcClient driverName:" + driverName + " url:" + url + " user:" + user + " password:" + password;

}

public List<Table> export() throws ClassNotFoundException, SQLException {

String showtablesSQL = "show tables";

List<Table> tableList = new ArrayList<Table>();

List<String> tableNameList = new ArrayList<String>();

Connection conn = getConnection();

Statement stmt = null;

ResultSet tableRs = null; // 存库元数据

ResultSet colRs = null;//存储表元数据

try {

stmt = conn.createStatement();

stmt.executeQuery("use ods_uba");

//获取表名

tableRs = stmt.executeQuery(showtablesSQL);

while (tableRs.next()) {

String table = tableRs.getString(1);

tableNameList.add(table);

}

//获取表结构

com.lin.bdp.common.utils.Field field = null;

Table table = null;

for (int i = 0; i < tableNameList.size(); i++) {

String descTableSQL = "describe ";

List<com.lin.bdp.common.utils.Field> fieldList = new ArrayList<com.lin.bdp.common.utils.Field>();

descTableSQL = descTableSQL + tableNameList.get(i).trim();//拼接sql

colRs = stmt.executeQuery(descTableSQL);

while (colRs.next()) {

field = new com.lin.bdp.common.utils.Field();

field.setColumnName(colRs.getString(1));

field.setTypeName(colRs.getString(2));//测试大小

fieldList.add(field);

}

table = new Table();

table.setTableName(tableNameList.get(i).trim());

table.setField(fieldList);

System.out.println(table);

tableList.add(table);

}

} catch (SQLException ex) {

} finally {

if (colRs != null) {

try {

colRs.close();

} catch (SQLException ex) {

}

}

if (tableRs != null) {

try {

tableRs.close();

} catch (SQLException ex) {

}

}

if (conn != null) {

try {

conn.close();

} catch (SQLException ex) {

}

}

}

return tableList;

}

public static void main(String[] args) {

HiveJdbcClient hiveJdbcClient = HiveJdbcClient.getInstance();

try {

hiveJdbcClient.export();

} catch (Exception e) {

e.printStackTrace();

}

System.exit(0);

}

}

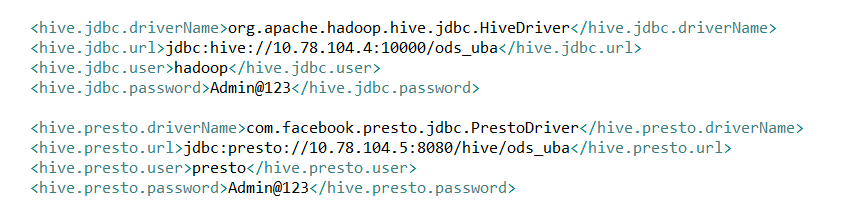

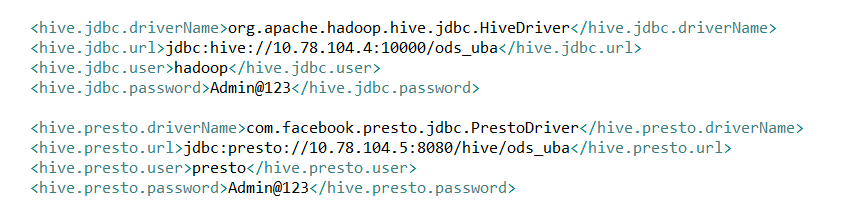

其中对应的配置文件:

对应的field.java

public class Field {

private String columnName;

private String typeName;

private int columnSize;

private int decimal_digits;

private int nullable;

public Field() {

}

public Field(String columnName, String typeName, int columnSize, int decimal_digits, int nullable) {

this.columnName = columnName;

this.typeName = typeName;

this.columnSize = columnSize;

this.decimal_digits = decimal_digits;

this.nullable = nullable;

}

public String getColumnName() {

return columnName;

}

public void setColumnName(String columnName) {

this.columnName = columnName;

}

public String getTypeName() {

return typeName;

}

public void setTypeName(String typeName) {

this.typeName = typeName;

}

public int getColumnSize() {

return columnSize;

}

public void setColumnSize(int columnSize) {

this.columnSize = columnSize;

}

public int getDecimal_digits() {

return decimal_digits;

}

public void setDecimal_digits(int decimal_digits) {

this.decimal_digits = decimal_digits;

}

public int getNullable() {

return nullable;

}

public void setNullable(int nullable) {

this.nullable = nullable;

}

@Override

public String toString() {

return "Field{" + "columnName=" + columnName + ", typeName=" + typeName + ", columnSize=" + columnSize + ", decimal_digits=" + decimal_digits + ", nullable=" + nullable + '}';

}

}

对应的table.java

package com.lin.bdp.common.utils;

import java.util.List;

public class Table {

private String tableName;

private List<Field> field;

public Table() {

}

public Table(String tableName, List<Field> field) {

this.tableName = tableName;

this.field = field;

}

public String getTableName() {

return tableName;

}

public void setTableName(String tableName) {

this.tableName = tableName;

}

public List<Field> getField() {

return field;

}

public void setField(List<Field> field) {

this.field = field;

}

@Override

public String toString() {

return "Table{" + "tableName=" + tableName + ", field=" + field + '}';

}

}

2、直接通过presto

在presto的安装目录下,etc/config.properties 包含 Presto Server 相关的配置,每一个 Presto Server 可以通时作为 coordinator 和 worker 使用。

http-server.http.port=8080这个参数为端口的配置

3、用到的相关jar包

<dependency>

<groupId>com.facebook.presto</groupId>

<artifactId>presto-jdbc</artifactId>

<version>0.75</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>0.7.1-cdh3u6</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>0.7.1-cdh3u6</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop.hive</groupId>

<artifactId>hive-metastore</artifactId>

<version>0.7.1-cdh3u6</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop.hive</groupId>

<artifactId>hive-service</artifactId>

<version>0.7.1-cdh3u6</version>

</dependency>

ive脚本的执行方式大致有三种:

当然也可以将这一行代码放在一个shell脚本中来执行:

如下是删除表里一天分区的记录(分区以opdt = 'yyyy-mm-dd')形式存在

#!/bin/sh

source ~/.bashrc

#清数据

doClean(){

partition=$(echo "$path/opdt=$statisDate")

echo "hdfs dfs -rm -r -f $partition"

hive -e "use ods_uba;ALTER TABLE $tableName DROP PARTITION (opdt='$statisDate');"

hdfs dfs -rm -r -f $partition

}

#统计日期默认取昨天

diffday=15

statisDate=`date +"%Y-%m-%d" -d "-"${diffday}"day"`

path=/hive/warehouse/ods_uba.db/$1

#首先判断是否有输入日期,以及输入日期是否合法,如果合法,取输入的日期。不合法报错,输入日期为空,取昨天

if [ $# -eq 0 ]; then

echo "您没有输入参数,请至少输入表名kafka_appchnl_source_log/kafka_web_source_log"

elif [ $# -eq 1 ]; then

echo "您输入的第一个参数为: $1 "

tableName=$1

if [[ "$tableName" == "kafka_appchnl_source_log" ]] || [[ "$tableName" == "kafka_web_source_log" ]]; then

echo "要清除的表名是:$tableName,路径:$path,清除分区的日期:$statisDate"

doClean $tableName $path $statisDate

else

echo "您输入的表名有错,请输入表名kafka_appchnl_source_log/kafka_web_source_log"

fi

elif [ $# -eq 2 ]; then

echo "您输入的第一个参数为: $1,第二个参数 :$2"

tableName=$1

statisDate=$2

if [[ "$tableName" == "kafka_appchnl_source_log" ]] || [[ "$tableName" == "kafka_web_source_log" ]]; then

echo "要清除的表名是:$tableName,路径:$path"

if [ ${#statisDate} -eq 10 ];then

echo "清除分区的日期:$statisDate"

doClean $tableName $path $statisDate

else

echo "您输入日期不合法,请输入yyyy-mm-dd类型参数"

fi

else

echo "您输入的表名有错,请输入表名kafka_appchnl_source_log/kafka_web_source_log"

fi

else

echo "您输入参数多于2个,请重新输入"

fi

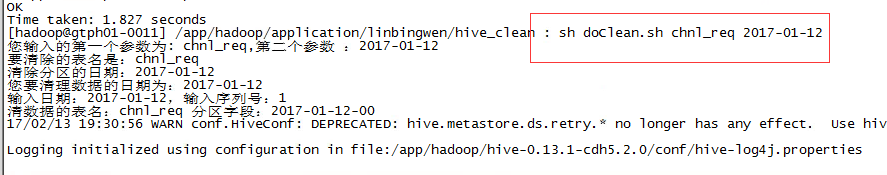

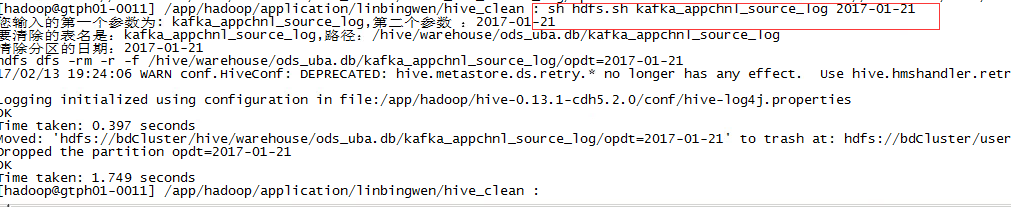

执行脚本:

新建一个clean.sql内容如下:

use ods_uba;

ALTER TABLE ${hiveconf:tableName} DROP PARTITION (partition_time='${hiveconf:partitionTime}');

执行如下语句就可以删除一个分区

hive -hiveconf tableName=lin -hiveconf partitionTime='2016-01-23' -f clean.sql

这里hiveconf可以用来传递参数

最后,也可以将shell脚本和sql脚本结合一起用。

新建一个如下doClean.sh

#!/bin/sh

. ~/.bashrc

#清数据,注意要传入一个yyyy-mm-dd类型参数

cleanData(){

echo "您要清理数据的日期为:$statisDate"

#生成每小时的查询条件

for i in `seq 24`

do

num=$(echo $i)

doClean $tableName $statisDate $num

done

}

#清数据

doClean(){

echo "输入日期:$statisDate,输入序列号:$num"

timeZone=$(printf "%02d\n" $(expr "$num" - "1"))

partitionTime=$(echo "$statisDate-$timeZone")

echo "清数据的表名:$tableName 分区字段:$partitionTime"

hive -hiveconf tableName=$tableName -hiveconf partitionTime=$partitionTime -f clean.sql

}

#统计日期默认取昨天

diffday=1

statisDate=`date +"%Y-%m-%d" -d "-"${diffday}"day"`

#首先判断是否有输入日期,以及输入日期是否合法,如果合法,取输入的日期。不合法报错,输入日期为空,取昨天

if [ $# -eq 0 ]; then

echo "您没有输入参数,请至少输入表名visit_log/evt_log/chnl_req"

elif [ $# -eq 1 ]; then

echo "您输入的第一个参数为: $1 "

tableName=$1

if [[ "$tableName" == "visit_log" ]] || [[ "$tableName" == "evt_log" ]] || [[ "$tableName" == "chnl_req" ]]; then

echo "要清除的表名是:$tableName,清除分区的日期:$statisDate"

cleanData $tableName $statisDate

else

echo "您输入的表名有错,请输入表名visit_log/evt_log/chnl_req"

fi

elif [ $# -eq 2 ]; then

echo "您输入的第一个参数为: $1,第二个参数 :$2"

tableName=$1

statisDate=$2

if [[ "$tableName" == "visit_log" ]] || [[ "$tableName" == "evt_log" ]] || [[ "$tableName" == "chnl_req" ]]; then

echo "要清除的表名是:$tableName"

if [ ${#statisDate} -eq 10 ];then

echo "清除分区的日期:$statisDate"

cleanData $tableName $statisDate

else

echo "您输入日期不合法,请输入yyyy-mm-dd类型参数"

fi

else

echo "您输入的表名有错,请输入表名visit_log/evt_log/chnl_req"

fi

else

echo "您输入参数多于2个,请重新输入"

fi

这里将一天分成了24个区,所以要删除一天的数据需要删除24个分区,分区以(yyyy-mm-dd-ss)

运行时使用:

一、Hive连接

1.1、通过shell

1、hive 命令行模式,直接输入#/hive/bin/hive的执行程序,或者输入#hive --service cli

2、 hive web界面的 (端口号9999) 启动方式

#hive --service hwi

用于通过浏览器来访问hive http://hadoop0:9999/hwi/ 3、 hive 远程服务 (端口号10000) 启动方式

#hive --service hiveserver

注意:hiveserver不能和hwi服务同时启动使用。

4、使用dbveare工具

需要将presto的jar添加进来并配置连接

1.2 通过java代码

使用java代码来连接hive时,驱动可以选择使用jdbc,也可以选择使用prestoHiveServer使用thrift服务来为客户端提供远程连接的访问端口,在JDBC连接Hive之前必须先启动HiveServer。

hive --service hiveserver

hiveserver默认端口是10000,可以使用hive --service hiveserver -p 10002,更改默认启动端口,此端口也是JDBC连接端口。

1、直接通过jdbc

package com.lin.bdp.common.utils;

import java.lang.reflect.Field;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.sql.ResultSetMetaData;

import java.sql.SQLException;

import java.sql.Statement;

import java.util.ArrayList;

import java.util.List;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import com.lin.bdp.common.vo.Visitor;

/**

*

* 功能概要:hive客户端工具

*

* @author linbingwen

* @since 2016年10月20日

*/

public class HiveJdbcClient {

private static final Logger logger = LoggerFactory.getLogger(HiveJdbcClient.class);

public static final char UNDERLINE = '_';

private static String driverName;

private static String url;

private static String user;

private static String password;

private static class LazyHolder {

private static final HiveJdbcClient INSTANCE = new HiveJdbcClient();

}

public static final HiveJdbcClient getInstance() {

return LazyHolder.INSTANCE;

}

/**

* 初始化参数

* @author linbingwen

* @since 2016年10月20日

*/

private void init() {

driverName = ConfigLoader.getProperty("hive.jdbc.driverName");

url = ConfigLoader.getProperty("hive.jdbc.url");

user = ConfigLoader.getProperty("hive.jdbc.user");

password = ConfigLoader.getProperty("hive.jdbc.password");

}

private void initPresto() {

driverName ="com.facebook.presto.jdbc.PrestoDriver";

url = "jdbc:presto://10.78.104.5:8080/hive/ods_uba";

user = "presto";

password = "Admin@123";

}

private HiveJdbcClient() {

init();

}

/**

* 获取连接

* @author linbingwen

* @since 2016年10月20日

* @return

* @throws ClassNotFoundException

* @throws SQLException

*/

private Connection getConnection() throws ClassNotFoundException, SQLException {

Class.forName(driverName);

Connection conn = DriverManager.getConnection(url, user, password);

return conn;

}

/**

* 按条件查找

* @author linbingwen

* @since 2016年10月20日

* @param clazz

* @param sql

* @return

* @throws Exception

*/

public <T> List<T> find(Class<T> clazz, String sql) throws Exception {

if (sql == null || sql.length() == 0) {

logger.warn("查询sql语句不能为空");

return new ArrayList<T>();

}

Connection connection = null;

PreparedStatement preState = null;

ResultSet rs = null;

try {

connection = getConnection();

Statement stmt = connection.createStatement();

rs = stmt.executeQuery(sql);

return (List<T>) handler(clazz, rs);

} catch (Exception e) {

logger.error("sql = {}执行出错,Exception = {}", sql, e.getLocalizedMessage());

throw e;

} finally {

release(connection,preState,rs);

}

}

/**

* 释放资源

*

* @author linbingwen

* @since 2016年8月31日

* @param conn

* @param st

* @param rs

*/

private void release(Connection conn, Statement st, ResultSet rs) {

if (rs != null) {

try {

rs.close();

} catch (Exception e) {

e.printStackTrace();

}

rs = null;

}

if (st != null) {

try {

st.close();

} catch (Exception e) {

e.printStackTrace();

}

st = null;

}

if (conn != null) {

try {

conn.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

/**

* 下划线字段转成陀峰字段

* @author linbingwen

* @since 2016年10月20日

* @param param

* @return

*/

private String underlineToCamel(String param) {

if (param == null || param.isEmpty()) {

return null;

}

int len = param.length();

StringBuilder sb = new StringBuilder(len);

for (int i = 0; i < len; i++) {

char c = param.charAt(i);

if (c == UNDERLINE) {

if (++i < len) {

sb.append(Character.toUpperCase(param.charAt(i)));

}

} else {

sb.append(c);

}

}

return sb.toString();

}

/**

* 组装list对象

* @author linbingwen

* @since 2016年10月20日

* @param clazz

* @param rs

* @return

* @throws Exception

*/

private Object handler(Class clazz, ResultSet rs) throws Exception {

List list = new ArrayList();

try {

while (rs.next()) {

Object bean = clazz.newInstance();

ResultSetMetaData meta = rs.getMetaData();

int count = meta.getColumnCount();

for (int i = 0; i < count; i++) {

String columnName = meta.getColumnName(i + 1);

String name = columnName;

if (columnName.contains(".")) {

String[] split = columnName.split("\\.");

if (split.length != 2) {

throw new Exception("输入的表名不正确!");

}

name =split[1];

}

Object value = rs.getObject(columnName);

name = underlineToCamel(name.toLowerCase());

try {

Field f = bean.getClass().getDeclaredField(name);

if (f != null) {

f.setAccessible(true);

f.set(bean, value);

}

} catch (NoSuchFieldException e) {

logger.error("表中字段:{}在类:{}没有对应的属性", name, clazz);

} catch (IllegalArgumentException e) {

logger.error("表中字段:{}在类:{}对应属性类型不一到", name, clazz);

}

}

list.add(bean);

}

} catch (Exception e) {

throw e;

}

logger.info("hiveHandler successed the total size is:{}",list.size());

return list;

}

@Override

public String toString() {

return "HiveJdbcClient driverName:" + driverName + " url:" + url + " user:" + user + " password:" + password;

}

public List<Table> export() throws ClassNotFoundException, SQLException {

String showtablesSQL = "show tables";

List<Table> tableList = new ArrayList<Table>();

List<String> tableNameList = new ArrayList<String>();

Connection conn = getConnection();

Statement stmt = null;

ResultSet tableRs = null; // 存库元数据

ResultSet colRs = null;//存储表元数据

try {

stmt = conn.createStatement();

stmt.executeQuery("use ods_uba");

//获取表名

tableRs = stmt.executeQuery(showtablesSQL);

while (tableRs.next()) {

String table = tableRs.getString(1);

tableNameList.add(table);

}

//获取表结构

com.lin.bdp.common.utils.Field field = null;

Table table = null;

for (int i = 0; i < tableNameList.size(); i++) {

String descTableSQL = "describe ";

List<com.lin.bdp.common.utils.Field> fieldList = new ArrayList<com.lin.bdp.common.utils.Field>();

descTableSQL = descTableSQL + tableNameList.get(i).trim();//拼接sql

colRs = stmt.executeQuery(descTableSQL);

while (colRs.next()) {

field = new com.lin.bdp.common.utils.Field();

field.setColumnName(colRs.getString(1));

field.setTypeName(colRs.getString(2));//测试大小

fieldList.add(field);

}

table = new Table();

table.setTableName(tableNameList.get(i).trim());

table.setField(fieldList);

System.out.println(table);

tableList.add(table);

}

} catch (SQLException ex) {

} finally {

if (colRs != null) {

try {

colRs.close();

} catch (SQLException ex) {

}

}

if (tableRs != null) {

try {

tableRs.close();

} catch (SQLException ex) {

}

}

if (conn != null) {

try {

conn.close();

} catch (SQLException ex) {

}

}

}

return tableList;

}

public static void main(String[] args) {

HiveJdbcClient hiveJdbcClient = HiveJdbcClient.getInstance();

try {

hiveJdbcClient.export();

} catch (Exception e) {

e.printStackTrace();

}

System.exit(0);

}

}

其中对应的配置文件:

对应的field.java

public class Field {

private String columnName;

private String typeName;

private int columnSize;

private int decimal_digits;

private int nullable;

public Field() {

}

public Field(String columnName, String typeName, int columnSize, int decimal_digits, int nullable) {

this.columnName = columnName;

this.typeName = typeName;

this.columnSize = columnSize;

this.decimal_digits = decimal_digits;

this.nullable = nullable;

}

public String getColumnName() {

return columnName;

}

public void setColumnName(String columnName) {

this.columnName = columnName;

}

public String getTypeName() {

return typeName;

}

public void setTypeName(String typeName) {

this.typeName = typeName;

}

public int getColumnSize() {

return columnSize;

}

public void setColumnSize(int columnSize) {

this.columnSize = columnSize;

}

public int getDecimal_digits() {

return decimal_digits;

}

public void setDecimal_digits(int decimal_digits) {

this.decimal_digits = decimal_digits;

}

public int getNullable() {

return nullable;

}

public void setNullable(int nullable) {

this.nullable = nullable;

}

@Override

public String toString() {

return "Field{" + "columnName=" + columnName + ", typeName=" + typeName + ", columnSize=" + columnSize + ", decimal_digits=" + decimal_digits + ", nullable=" + nullable + '}';

}

}

对应的table.java

package com.lin.bdp.common.utils;

import java.util.List;

public class Table {

private String tableName;

private List<Field> field;

public Table() {

}

public Table(String tableName, List<Field> field) {

this.tableName = tableName;

this.field = field;

}

public String getTableName() {

return tableName;

}

public void setTableName(String tableName) {

this.tableName = tableName;

}

public List<Field> getField() {

return field;

}

public void setField(List<Field> field) {

this.field = field;

}

@Override

public String toString() {

return "Table{" + "tableName=" + tableName + ", field=" + field + '}';

}

}

2、直接通过presto

package com.lin.bdp.common.utils;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.ResultSet;

import java.sql.ResultSetMetaData;

import java.sql.SQLException;

import java.sql.Statement;

public class HivePrestoClient {

public static void main(String[] args) throws SQLException, ClassNotFoundException{

//jdbc:presto://cdh1:8080/hive/sales

Class.forName("com.facebook.presto.jdbc.PrestoDriver");

Connection connection = DriverManager.getConnection("jdbc:presto://10.78.104.5:8080/hive/ods_uba","presto","Admin@123");

// connection.setCatalog("hive");

Statement stmt = connection.createStatement();

ResultSet rs = stmt.executeQuery("select * from ods_uba.visitor_lin limit 10");

// ResultSet rs = stmt.executeQuery("select count(*) uuid,lst_visit_chnl lst_visit_chnl from ods_uba.visitor_lin group by lst_visit_chnl");

while (rs.next()) {

ResultSetMetaData meta = rs.getMetaData();

int count = meta.getColumnCount();

for (int i = 0; i < count; i++) {

String name = meta.getColumnName(i + 1);

Object value = rs.getObject(name);

System.out.println(name + ":" + value);

}

}

rs.close();

connection.close();

System.out.println("exit");

// System.exit(0);

}

}在presto的安装目录下,etc/config.properties 包含 Presto Server 相关的配置,每一个 Presto Server 可以通时作为 coordinator 和 worker 使用。

http-server.http.port=8080这个参数为端口的配置

3、用到的相关jar包

<dependency>

<groupId>com.facebook.presto</groupId>

<artifactId>presto-jdbc</artifactId>

<version>0.75</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>0.7.1-cdh3u6</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>0.7.1-cdh3u6</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop.hive</groupId>

<artifactId>hive-metastore</artifactId>

<version>0.7.1-cdh3u6</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop.hive</groupId>

<artifactId>hive-service</artifactId>

<version>0.7.1-cdh3u6</version>

</dependency>

二、Hive脚本执行

Hive也可以直接执行sql脚本,或者通过shell脚本来调用sql脚本ive脚本的执行方式大致有三种:

1. hive控制台执行

比较简单,不再多说;2. hive -e "SQL"执行

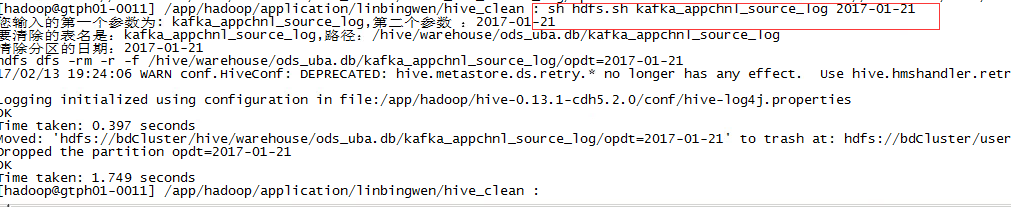

hive -e "use ods_uba;ALTER TABLE lin DROP PARTITION (opdt='2016-02-04');当然也可以将这一行代码放在一个shell脚本中来执行:

如下是删除表里一天分区的记录(分区以opdt = 'yyyy-mm-dd')形式存在

#!/bin/sh

source ~/.bashrc

#清数据

doClean(){

partition=$(echo "$path/opdt=$statisDate")

echo "hdfs dfs -rm -r -f $partition"

hive -e "use ods_uba;ALTER TABLE $tableName DROP PARTITION (opdt='$statisDate');"

hdfs dfs -rm -r -f $partition

}

#统计日期默认取昨天

diffday=15

statisDate=`date +"%Y-%m-%d" -d "-"${diffday}"day"`

path=/hive/warehouse/ods_uba.db/$1

#首先判断是否有输入日期,以及输入日期是否合法,如果合法,取输入的日期。不合法报错,输入日期为空,取昨天

if [ $# -eq 0 ]; then

echo "您没有输入参数,请至少输入表名kafka_appchnl_source_log/kafka_web_source_log"

elif [ $# -eq 1 ]; then

echo "您输入的第一个参数为: $1 "

tableName=$1

if [[ "$tableName" == "kafka_appchnl_source_log" ]] || [[ "$tableName" == "kafka_web_source_log" ]]; then

echo "要清除的表名是:$tableName,路径:$path,清除分区的日期:$statisDate"

doClean $tableName $path $statisDate

else

echo "您输入的表名有错,请输入表名kafka_appchnl_source_log/kafka_web_source_log"

fi

elif [ $# -eq 2 ]; then

echo "您输入的第一个参数为: $1,第二个参数 :$2"

tableName=$1

statisDate=$2

if [[ "$tableName" == "kafka_appchnl_source_log" ]] || [[ "$tableName" == "kafka_web_source_log" ]]; then

echo "要清除的表名是:$tableName,路径:$path"

if [ ${#statisDate} -eq 10 ];then

echo "清除分区的日期:$statisDate"

doClean $tableName $path $statisDate

else

echo "您输入日期不合法,请输入yyyy-mm-dd类型参数"

fi

else

echo "您输入的表名有错,请输入表名kafka_appchnl_source_log/kafka_web_source_log"

fi

else

echo "您输入参数多于2个,请重新输入"

fi

执行脚本:

3. hive -f SQL文件执行

如果sql都放在.sql文件中呢?那就用hive -f新建一个clean.sql内容如下:

use ods_uba;

ALTER TABLE ${hiveconf:tableName} DROP PARTITION (partition_time='${hiveconf:partitionTime}');

执行如下语句就可以删除一个分区

hive -hiveconf tableName=lin -hiveconf partitionTime='2016-01-23' -f clean.sql

这里hiveconf可以用来传递参数

最后,也可以将shell脚本和sql脚本结合一起用。

新建一个如下doClean.sh

#!/bin/sh

. ~/.bashrc

#清数据,注意要传入一个yyyy-mm-dd类型参数

cleanData(){

echo "您要清理数据的日期为:$statisDate"

#生成每小时的查询条件

for i in `seq 24`

do

num=$(echo $i)

doClean $tableName $statisDate $num

done

}

#清数据

doClean(){

echo "输入日期:$statisDate,输入序列号:$num"

timeZone=$(printf "%02d\n" $(expr "$num" - "1"))

partitionTime=$(echo "$statisDate-$timeZone")

echo "清数据的表名:$tableName 分区字段:$partitionTime"

hive -hiveconf tableName=$tableName -hiveconf partitionTime=$partitionTime -f clean.sql

}

#统计日期默认取昨天

diffday=1

statisDate=`date +"%Y-%m-%d" -d "-"${diffday}"day"`

#首先判断是否有输入日期,以及输入日期是否合法,如果合法,取输入的日期。不合法报错,输入日期为空,取昨天

if [ $# -eq 0 ]; then

echo "您没有输入参数,请至少输入表名visit_log/evt_log/chnl_req"

elif [ $# -eq 1 ]; then

echo "您输入的第一个参数为: $1 "

tableName=$1

if [[ "$tableName" == "visit_log" ]] || [[ "$tableName" == "evt_log" ]] || [[ "$tableName" == "chnl_req" ]]; then

echo "要清除的表名是:$tableName,清除分区的日期:$statisDate"

cleanData $tableName $statisDate

else

echo "您输入的表名有错,请输入表名visit_log/evt_log/chnl_req"

fi

elif [ $# -eq 2 ]; then

echo "您输入的第一个参数为: $1,第二个参数 :$2"

tableName=$1

statisDate=$2

if [[ "$tableName" == "visit_log" ]] || [[ "$tableName" == "evt_log" ]] || [[ "$tableName" == "chnl_req" ]]; then

echo "要清除的表名是:$tableName"

if [ ${#statisDate} -eq 10 ];then

echo "清除分区的日期:$statisDate"

cleanData $tableName $statisDate

else

echo "您输入日期不合法,请输入yyyy-mm-dd类型参数"

fi

else

echo "您输入的表名有错,请输入表名visit_log/evt_log/chnl_req"

fi

else

echo "您输入参数多于2个,请重新输入"

fi

这里将一天分成了24个区,所以要删除一天的数据需要删除24个分区,分区以(yyyy-mm-dd-ss)

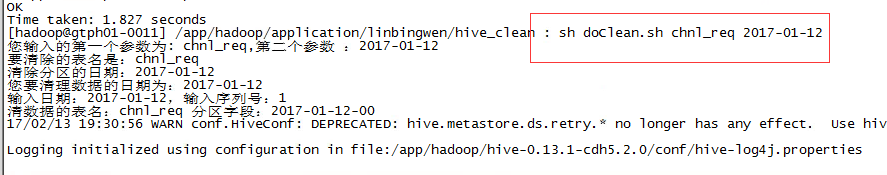

运行时使用:

相关文章推荐

- Maven java通过jdbc连接Hive 执行HQL语句

- hive编程-JDBC方式连接hive

- [置顶] Hive编程指南-表创建、内部表、外部表

- [置顶] Hive:用Java代码通过JDBC连接Hiveserver

- [置顶] Hive编程指南-数据类型、DDL、DML、常用字符串函数

- Hive编程入门总结-用Java代码通过JDBC连接Hiveserver

- [置顶] Hive编程指南-Spark操作Hive

- [置顶] 【R语言 连接数据库 】RMySQL数据库编程指南

- php连接hive执行sql查询

- 在另一个应用程序域中执行代码(C# 编程指南)

- .net连接数据库,执行sql脚本

- 22---数库编程14(jdbc连接oracle)

- Linux下通过EXPECT脚本自动执行ssh远程连接

- 通过JDBC驱动连接Hive操作实例

- 关于Hibernate获取JDBC连接 直接执行SQL

- Hive的JDBC连接方法

- Hive的JDBC连接方法分享与教程

- java 通过jdbc驱动连接hive操作

- hive 三种启动方式及用途,关注通过jdbc连接的启动

- Hive的JDBC连接