caffe如何自定义网络以及自定义层(python)(四)

2016-11-23 17:59

393 查看

刚刚看了一下官方的demo还有两三个,一起学习一下python的使用吧。经过前面的学习,后面就比较简单了。

官网这个代码看似简单了点,但是里面其实已经将自己写好的东西采用python调用了。

进入到pycaffe目录下查看一下代码结构布局:

终端运行

multilabel.py是自己的脚本

中间如果出现

File “examples/fcn/voc-fcn8s/solve.py”, line 17, in

solver = caffe.SGDSolver(‘examples/fcn/voc-fcn8s/solver.prototxt’)

SystemError: NULL result without error in PyObject_Call错误原因在于创建train.protxt中的python层时,找不到layers module, 也就是没有找到layers.py那个文件.

我的append路径没有有添加进去,后来直接给绝对路径就没错了。

官网这个代码看似简单了点,但是里面其实已经将自己写好的东西采用python调用了。

进入到pycaffe目录下查看一下代码结构布局:

终端运行

tree > tree.txt如果没有tree,先安装一下。

. ├── caffenet.py ├── layers │ ├── pascal_multilabel_datalayers.py │ ├── pascal_multilabel_datalayers.pyc │ ├── pascal_multilabel_with_datalayer │ │ ├── solver.prototxt │ │ ├── trainnet.prototxt │ │ └── valnet.prototxt │ └── pyloss.py ├── linreg.prototxt ├── multilabel.py ├── multilabel.py~ ├── tools.py ├── tools.pyc └── tree.txt 2 directories, 13 files再看看怎么运行这个demo,先下载好VOC2012的数据集。

multilabel.py是自己的脚本

import sys

import os

import numpy as np

import os.path as osp

import matplotlib.pyplot as plt

import pylab

from copy import copy

caffe_root = '/home/x/git/caffe/'

import sys

sys.path.insert(0, caffe_root + 'python')

import caffe

caffe.set_device(0)

caffe.set_mode_gpu()

plt.rcParams['figure.figsize'] = (6, 6)

import caffe # If you get "No module named _caffe", either you have not built pycaffe or you have the wrong path.

from caffe import layers as L, params as P # Shortcuts to define the net prototxt.

sys.path.append("/home/x/git/caffe/examples/pycaffe/layers") # the datalayers we will use are in this directory.

sys.path.append("/home/x/git/caffe/examples/pycaffe") # the tools file is in this folder

import tools #this contains some tools that we need

# set data root directory, e.g:

pascal_root = osp.join(caffe_root, 'data/VOC2012')

# these are the PASCAL classes, we'll need them later.

classes = np.asarray(['aeroplane', 'bicycle', 'bird', 'boat', 'bottle', 'bus', 'car', 'cat', 'chair', 'cow', 'diningtable', 'dog', 'horse', 'motorbike', 'person', 'pottedplant', 'sheep', 'sofa', 'train', 'tvmonitor'])

# initialize caffe for gpu mode

caffe.set_mode_gpu()

caffe.set_device(0)

###############################Define network prototxts

# helper function for common structures

def conv_relu(bottom, ks, nout, stride=1, pad=0, group=1):

conv = L.Convolution(bottom, kernel_size=ks, stride=stride,

num_output=nout, pad=pad, group=group)

return conv, L.ReLU(conv, in_place=True)

# another helper function

def fc_relu(bottom, nout):

fc = L.InnerProduct(bottom, num_output=nout)

return fc, L.ReLU(fc, in_place=True)

# yet another helper function

def max_pool(bottom, ks, stride=1):

return L.Pooling(bottom, pool=P.Pooling.MAX, kernel_size=ks, stride=stride)

# main netspec wrapper

def caffenet_multilabel(data_layer_params, datalayer):

# setup the python data layer

n = caffe.NetSpec()

n.data, n.label = L.Python(module = 'pascal_multilabel_datalayers', layer = datalayer,

ntop = 2, param_str=str(data_layer_params))

# the net itself

n.conv1, n.relu1 = conv_relu(n.data, 11, 96, stride=4)

n.pool1 = max_pool(n.relu1, 3, stride=2)

n.norm1 = L.LRN(n.pool1, local_size=5, alpha=1e-4, beta=0.75)

n.conv2, n.relu2 = conv_relu(n.norm1, 5, 256, pad=2, group=2)

n.pool2 = max_pool(n.relu2, 3, stride=2)

n.norm2 = L.LRN(n.pool2, local_size=5, alpha=1e-4, beta=0.75)

n.conv3, n.relu3 = conv_relu(n.norm2, 3, 384, pad=1)

n.conv4, n.relu4 = conv_relu(n.relu3, 3, 384, pad=1, group=2)

n.conv5, n.relu5 = conv_relu(n.relu4, 3, 256, pad=1, group=2)

n.pool5 = max_pool(n.relu5, 3, stride=2)

n.fc6, n.relu6 = fc_relu(n.pool5, 4096)

n.drop6 = L.Dropout(n.relu6, in_place=True)

n.fc7, n.relu7 = fc_relu(n.drop6, 4096)

n.drop7 = L.Dropout(n.relu7, in_place=True)

n.score = L.InnerProduct(n.drop7, num_output=20)

n.loss = L.SigmoidCrossEntropyLoss(n.score, n.label)

return str(n.to_proto())

workdir = '/home/x/git/caffe/examples/pycaffe/layers/pascal_multilabel_with_datalayer'

if not os.path.isdir(workdir):

os.makedirs(workdir)

solverprototxt = tools.CaffeSolver(trainnet_prototxt_path = osp.join(workdir, "trainnet.prototxt"), testnet_prototxt_path = osp.join(workdir, "valnet.prototxt"))

solverprototxt.sp['display'] = "1"

solverprototxt.sp['base_lr'] = "0.0001"

solverprototxt.write(osp.join(workdir, 'solver.prototxt'))

# write train net.

with open(osp.join(workdir, 'trainnet.prototxt'), 'w') as f:

# provide parameters to the data layer as a python dictionary. Easy as pie!

data_layer_params = dict(batch_size = 128, im_shape = [227, 227], split = 'train', pascal_root = pascal_root)

f.write(caffenet_multilabel(data_layer_params, 'PascalMultilabelDataLayerSync'))

# write validation net.

with open(osp.join(workdir, 'valnet.prototxt'), 'w') as f:

data_layer_params = dict(batch_size = 128, im_shape = [227, 227], split = 'val', pascal_root = pascal_root)

f.write(caffenet_multilabel(data_layer_params, 'PascalMultilabelDataLayerSync'))

solver = caffe.SGDSolver(osp.join(workdir, 'solver.prototxt'))

solver.net.copy_from(caffe_root + 'models/bvlc_reference_caffenet/bvlc_reference_caffenet.caffemodel')

solver.test_nets[0].share_with(solver.net)

solver.step(1)

transformer = tools.SimpleTransformer() # This is simply to add back the bias, re-shuffle the color channels to RGB, and so on...

image_index = 0 # First image in the batch.

plt.figure()

plt.imshow(transformer.deprocess(copy(solver.net.blobs['data'].data[image_index, ...])))

gtlist = solver.net.blobs['label'].data[image_index, ...].astype(np.int)

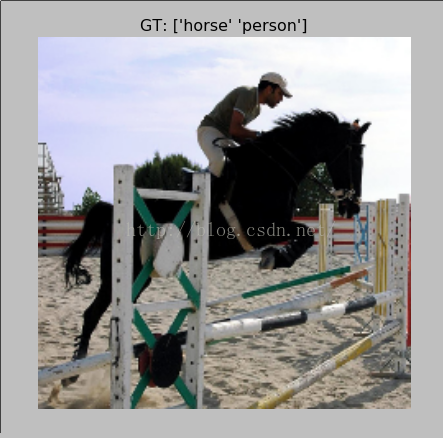

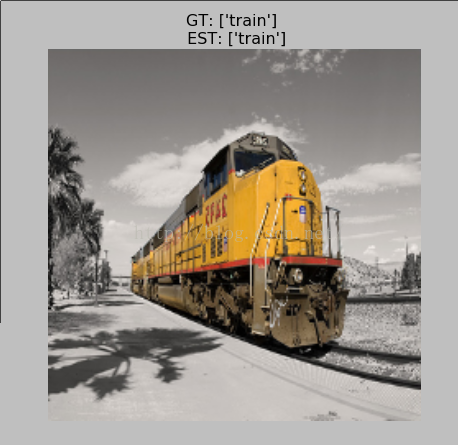

plt.title('GT: {}'.format(classes[np.where(gtlist)]))

plt.axis('off');

pylab.show()

#train net

def hamming_distance(gt, est):

return sum([1 for (g, e) in zip(gt, est) if g == e]) / float(len(gt))

def check_accuracy(net, num_batches, batch_size = 128):

acc = 0.0

for t in range(num_batches):

net.forward()

gts = net.blobs['label'].data

ests = net.blobs['score'].data > 0

for gt, est in zip(gts, ests): #for each ground truth and estimated label vector

acc += hamming_distance(gt, est)

return acc / (num_batches * batch_size)

for itt in range(6):

solver.step(100)

print 'itt:{:3d}'.format((itt + 1) * 100), 'accuracy:{0:.4f}'.format(check_accuracy(solver.test_nets[0], 50))

def check_baseline_accuracy(net, num_batches, batch_size = 128):

acc = 0.0

for t in range(num_batches):

net.forward()

gts = net.blobs['label'].data

ests = np.zeros((batch_size, len(gts)))

for gt, est in zip(gts, ests): #for each ground truth and estimated label vector

acc += hamming_distance(gt, est)

return acc / (num_batches * batch_size)

print 'Baseline accuracy:{0:.4f}'.format(check_baseline_accuracy(solver.test_nets[0], 5823/128))

test_net = solver.test_nets[0]

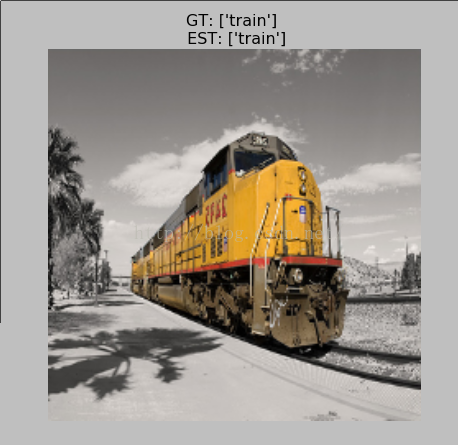

for image_index in range(5):

plt.figure()

plt.imshow(transformer.deprocess(copy(test_net.blobs['data'].data[image_index, ...])))

gtlist = test_net.blobs['label'].data[image_index, ...].astype(np.int)

estlist = test_net.blobs['score'].data[image_index, ...] > 0

plt.title('GT: {} \n EST: {}'.format(classes[np.where(gtlist)], classes[np.where(estlist)]))

plt.axis('off')

pylab.show()中间如果出现

File “examples/fcn/voc-fcn8s/solve.py”, line 17, in

solver = caffe.SGDSolver(‘examples/fcn/voc-fcn8s/solver.prototxt’)

SystemError: NULL result without error in PyObject_Call错误原因在于创建train.protxt中的python层时,找不到layers module, 也就是没有找到layers.py那个文件.

我的append路径没有有添加进去,后来直接给绝对路径就没错了。

相关文章推荐

- caffe如何自定义网络以及自定义层(python)(三)

- caffe如何自定义网络以及自定义层(python)(一)

- caffe如何自定义网络以及自定义层(python)(六)

- caffe如何自定义网络以及自定义层(python)(二)

- caffe如何自定义网络以及自定义层(python)(五)

- Caffe in Python之定义网络结构和添加自定义网络层

- caffe 实战系列:proto文件格式以及含义解析:如何定义网络,如何设置网络参数(以AlexNet为例)

- 如何在caffe中自定义网络层

- 如何在caffe中自定义网络层

- caffe 实战系列:proto文件格式以及含义解析:如何定义网络,如何设置网络参数(以AlexNet为例) 2016.3.30

- 如何针对自己的需要修改caffe的网络(Python)

- Moss/Sharepoint 如何自定义显示界面以及字段

- 什么是网络推广以及如何做好网络推广

- Python如何使用urllib2获取网络资源

- 【VSTO】创建 Excel 2007 AddIn (1. CommandBar 以及如何自定义Icon)

- 如何在DeepEarth中进行图形绘制(点、线、多边形以及自定义图片图层)

- 【转】Python如何使用urllib2获取网络资源(zz)

- [网络收集]用户自定义控件中如何引入样式文件

- ABAP–如何在’REUSE_ALV_GRID_DISPLAY’使用自定义F4帮助,返回多个字段以及计算修改其他字段

- 如何创建自定义winForm控件 以及添加事件属性