Nginx + varnish 构建高可用CDN节点集群

2016-11-21 14:58

351 查看

转载地址:https://my.oschina.net/imot/blog/289208

一、 环境描述

Linux server A (CentOS release 5.8 Final) 实IP:192.168.4.97 虚IP:192.168.4.96

Linux server B (CentOS release 5.8 Final) 实IP:192.168.4.99 虚IP:192.168.4.98

域名环境(DNS轮询解析到虚IP):

server.osapub.com 192.168.4.96

server.osapub.com 192.168.4.98

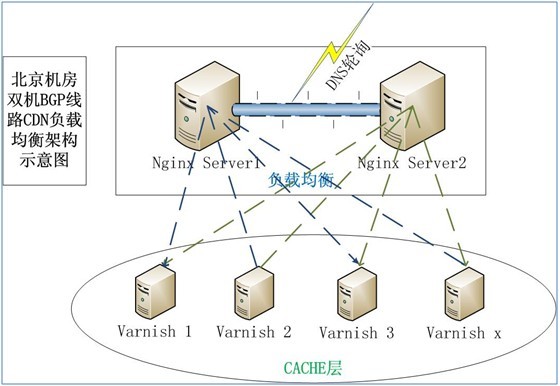

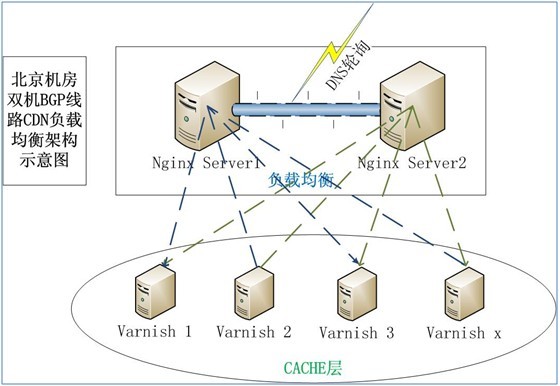

二、 简单架构示意图

描述:前端两台NGINX做DNS轮询,通过虚IP漂移+HA监控脚本相结合,实现前端两台NGINX高可用,利用NGINX反向代理功能对后端varnish实现高可用集群。

三、软件环境搭建

3.1 编译安装nginx

3.2 编译安装varnish

三、 配置varnish一些参数说明:

Backend servers

Varnish有后端(或称为源)服务器的概念。后端服务器是指Varnish提供加速服务的那台,通常提供内容。

第一件要做的事情是告诉Varnish,哪里能找到要加速的内容。

vcl_recv

vcl_recv是在请求开始时调用的。完成该子程序后,请求就被接收并解析了。用于确定是否需要服务请求,怎么服务,如果可用,使用哪个后端。

在vcl_recv中,你也可以修改请求。通常你可以修改cookie,或添加/移除请求头信息。

注意在vcl_recv中,只可以使用请求对象req。

vcl_fetch

vcl_fetch是在文档从后端被成功接收后调用的。通常用于调整响应头信息,触发ESI处理,万一请求失败就换个后端服务器。

在vcl_fecth中,你还可以使用请求对象req。还有个后端响应对象beresp。Beresp包含了后端的HTTP头信息。

varnish 3.X 配置参考文档:http://anykoro.sinaapp.com/?p=261

编辑:/usr/local/varnish/vcl.conf ,文件不存在则创建。注意:Server A 与server B 配置一致!

配置详情如下:

配置完成后执行:/root/varnish_start.sh 如果启动成功则表示配置无误!

四、 配置nginx编辑: /usr/local/nginx/conf/nginx.conf ,nginx 的配置方法请参考网上文档资料。

Server A(192.168.4.97) nginx配置如下:

server B (192.168.4.99)配置如下:

启动nginx:

/root/nginx_start

启动正常会监听80,82端口!

五、 运行nginx ha脚本

server A 的脚本下载地址:http://bbs.osapub.com/down/server_a_ha.tar.gz

解压力后得到三个脚本:

nginx_watchdog.sh

nginxha.sh

nginx_ha1.sh

server B 的脚本下载地址:http://bbs.osapub.com/down/server_b_ha.tar.gz

解压力后得到三个脚本:

nginx_watchdog.sh

nginxha.sh

nginx_ha2.sh

注意:脚本建议放到:/data/sh/ha 目录下,否则需要修改nginxha.sh 里面的程序路径!

六、 测试1,测试之前先把nginx 负载去掉,可以在前面加#号暂时注释,只保留本机解析,确保测试结果准确。

2,修改本机host文件

添加:192.168.4.99 server.osapub.com 到末尾,保存后访问。

3,正常结果显示如下:

到这里整个架构基本能运行起来了,根据大家的实际需求,对配置文件进行调优,HA脚本也可以进一步调优,关于报警,请参考社区自动安装mutt报警的脚本!

一、 环境描述

Linux server A (CentOS release 5.8 Final) 实IP:192.168.4.97 虚IP:192.168.4.96

Linux server B (CentOS release 5.8 Final) 实IP:192.168.4.99 虚IP:192.168.4.98

域名环境(DNS轮询解析到虚IP):

server.osapub.com 192.168.4.96

server.osapub.com 192.168.4.98

二、 简单架构示意图

描述:前端两台NGINX做DNS轮询,通过虚IP漂移+HA监控脚本相结合,实现前端两台NGINX高可用,利用NGINX反向代理功能对后端varnish实现高可用集群。

三、软件环境搭建

3.1 编译安装nginx

?

#!/bin/bash

####nginx 环境安装脚本,注意环境不同可能导致脚本运行出错,如果环境不同建议手工一条一条执行指令。

#创建工作目录

mkdir -p /dist/{dist,src}

cd /dist/dist

#下载安装包

wget http://bbs.osapub.com/down/google-perftools-1.8.3.tar.gz &> /dev/null

wget http://bbs.osapub.com/down/libunwind-0.99.tar.gz &> /dev/null

wget http://bbs.osapub.com/down/pcre-8.01.tar.gz &> /dev/null

wget http://bbs.osapub.com/down/nginx-1.0.5.tar.gz &> /dev/null

#------------------------------------------------------------------------

# 使用Google的开源TCMalloc库,忧化性能

cd /dist/src

tar zxf ../dist/libunwind-0.99.tar.gz

cd libunwind-0.99/

## 注意这里不能加其它 CFLAGS加速编译参数

CFLAGS=-fPIC ./configure

make clean

make CFLAGS=-fPIC

make CFLAGS=-fPIC install

if [ "$?" == "0" ]; then

echo "libunwind-0.99安装成功." >> ./install_log.txt

else

echo "libunwind-0.99安装失败." >> ./install_log.txt

exit 1

fi

##----------------------------------------------------------

## 使用Google的开源TCMalloc库,提高MySQL在高并发情况下的性能

cd /dist/src

tar zxf ../dist/google-perftools-1.8.3.tar.gz

cd google-perftools-1.8.3/

CHOST="x86_64-pc-linux-gnu" CFLAGS="-march=nocona -O2 -pipe" CXXFLAGS="-march=nocona -O2 -pipe" \

./configure

make clean

make && make install

if [ "$?" == "0" ]; then

echo "google-perftools-1.8.3安装成功." >> ./install_log.txt

else

echo "google-perftools-1.8.3安装失败." >> ./install_log.txt

exit 1

fi

echo "/usr/local/lib" > /etc/ld.so.conf.d/usr_local_lib.conf

/sbin/ldconfig

################ 安装nginx ##########################

#安装Nginx所需的pcre库

cd /dist/src

tar zxvf ../dist/pcre-8.01.tar.gz

cd pcre-8.01/

CHOST="x86_64-pc-linux-gnu" CFLAGS="-march=nocona -O2 -pipe" CXXFLAGS="-march=nocona -O2 -pipe" \

./configure

make && make install

if [ "$?" == "0" ]; then

echo "pcre-8.01安装成功." >> ./install_log.txt

else

echo "pcre-8.01安装失败." >> ./install_log.txt

exit 1

fi

cd ../

## 安装Nginx

## 为优化性能,可以安装 google 的 tcmalloc ,之前己经安装过了

## 所以我们编译 Nginx 时,加上参数 --with-google_perftools_module

## 然后在启动nginx前需要设置环境变量 export LD_PRELOAD=/usr/local/lib/libtcmalloc.so

## 加上 -O2 参数也能优化一些性能

##

## 默认的Nginx编译选项里居然是用 debug模式的(-g参数),在 auto/cc/gcc 文件最底下,去掉那个 -g 参数

## 就是将 CFLAGS="$CFLAGS -g" 修改为 CFLAGS="$CFLAGS" 或者直接删除这一行

cd /dist/src

rm -rf nginx-1.0.5

tar zxf ../dist/nginx-1.0.5.tar.gz

cd nginx-1.0.5/

sed -i 's#CFLAGS="$CFLAGS -g"#CFLAGS="$CFLAGS "#' auto/cc/gcc

make clean

CHOST="x86_64-pc-linux-gnu" CFLAGS="-march=nocona -O2 -pipe" CXXFLAGS="-march=nocona -O2 -pipe" \

./configure --user=www --group=www \

--prefix=/usr/local/nginx \

--with-http_stub_status_module \

--with-google_perftools_module

make && make install

if [ "$?" == "0" ]; then

echo "nginx-1.0.5安装成功." >> ./install_log.txt

else

echo "nginx-1.0.5安装失败." >> ./install_log.txt

exit 1

fi

cd ../

#创建Nginx日志目录

mkdir -p /data/logs

chmod +w /data/logs

chown -R www:www /data/logs

cd /usr/local/nginx/

mv conf conf_bak

ln -s /data/conf/nginx/ conf

echo 'export LD_PRELOAD=/usr/local/lib/libtcmalloc.so' > /root/nginx_start

echo 'ulimit -SHn 51200' >> /root/nginx_start

echo '/usr/local/nginx/sbin/nginx -c /usr/local/nginx/conf/nginx.conf' >> /root/nginx_start

echo '/usr/local/nginx/sbin/nginx -t' > /root/nginx_reload

echo 'kill -HUP `cat /usr/local/nginx/logs/nginx.pid`' >> /root/nginx_reload

chmod 700 /root/nginx_*3.2 编译安装varnish

#!/bin/bash #进入工作目录 cd /dist/dist #下载安装包 wget <a href="\"http://bbs.osapub.com/down/varnish-3.0.0.tar.gz\"" target="\"_blank\"">http://bbs.osapub.com/down/varnish-3.0.0.tar.gz</a> &> /dev/null cd /dist/src rm -fr varnish-3.0.0 export PKG_CONFIG_PATH=/usr/local/lib/pkgconfig tar zxvf ../dist/varnish-3.0.0.tar.gz cd varnish-3.0.0 #编译参数可以根据自己需要定制 ./configure -prefix=/usr/local/varnish -enable-debugging-symbols -enable-developer-warnings -enable-dependency-tracking make && make install if [ "$?" == "0" ]; then echo "varnish-3.0.0安装成功." >> ./install_log.txt else echo "varnish-3.0.0安装失败." >> ./install_log.txt exit 1 fi #设置启动、重启脚本 cat > /root/varnish_restart.sh <<EOF #!/bin/bash pkill varnish pkill varnish ulimit -SHn 51200 /usr/local/varnish/sbin/varnishd -n /data/varnish -f /usr/local/varnish/vcl.conf -a 0.0.0.0:81 -s malloc,12G -g www -u www -w 4000,12000,10 -T 127.0.0.1:3500 -p http_max_hdr=256 -p http_req_hdr_len=8192 EOF cat > /root/varnish_start.sh <<EOF #!/bin/bash ulimit -SHn 51200 /usr/local/varnish/sbin/varnishd -n /data/varnish -f /usr/local/varnish/vcl.conf -a 0.0.0.0:81 -s malloc,12G -g www -u www -w 4000,12000,10 -T 127.0.0.1:3500 -p http_max_hdr=256 -p http_req_hdr_len=8192 EOF chmod 755 /root/varnish*

三、 配置varnish一些参数说明:

Backend servers

Varnish有后端(或称为源)服务器的概念。后端服务器是指Varnish提供加速服务的那台,通常提供内容。

第一件要做的事情是告诉Varnish,哪里能找到要加速的内容。

vcl_recv

vcl_recv是在请求开始时调用的。完成该子程序后,请求就被接收并解析了。用于确定是否需要服务请求,怎么服务,如果可用,使用哪个后端。

在vcl_recv中,你也可以修改请求。通常你可以修改cookie,或添加/移除请求头信息。

注意在vcl_recv中,只可以使用请求对象req。

vcl_fetch

vcl_fetch是在文档从后端被成功接收后调用的。通常用于调整响应头信息,触发ESI处理,万一请求失败就换个后端服务器。

在vcl_fecth中,你还可以使用请求对象req。还有个后端响应对象beresp。Beresp包含了后端的HTTP头信息。

varnish 3.X 配置参考文档:http://anykoro.sinaapp.com/?p=261

编辑:/usr/local/varnish/vcl.conf ,文件不存在则创建。注意:Server A 与server B 配置一致!

配置详情如下:

?

###########后台代理服务器########

backend server_osapub_com{

.host = "192.168.4.97";

.port = "82";

}

acl purge {

"localhost";

"127.0.0.1";

}

#############################################

sub vcl_recv {

#################BAN##########################begin

if (req.request == "BAN") {

# Same ACL check as above:

if (!client.ip ~ purge) {

error 405 "Not allowed.";

}

ban("req.http.host == " + req.http.host +

"&& req.url == " + req.url);

error 200 "Ban added";

}

#################BAN##########################end

###############配置域名##################################

if(req.http.host ~ "^server.osapub.com" ||req.http.host ~ ".osapub.com" ) {

#使用哪一组后台服务器

set req.backend = server_osapub_com;

}

##################################################

if (req.restarts == 0) {

if (req.http.x-forwarded-for) {

set req.http.X-Forwarded-For = req.http.X-Forwarded-For + ", " + client.ip;

}

else {

set req.http.X-Forwarded-For = client.ip;

}

}

if (req.http.Accept-Encoding) {

if (req.url ~ "\.(jpg|jpeg|png|gif|gz|tgz|bz2|tbz|mp3|ogg|swf)$") {

remove req.http.Accept-Encoding;

} elsif (req.http.Accept-Encoding ~ "gzip") {

set req.http.Accept-Encoding = "gzip";}

elsif (req.http.Accept-Encoding ~ "deflate") {

set req.http.Accept-Encoding = "deflate";}

else {

remove req.http.Accept-Encoding;

}

}

if (req.http.Cache-Control ~ "no-cache") {

return (pass);

}

if (req.request != "GET" &&

req.request != "HEAD" &&

req.request != "PUT" &&

req.request != "POST" &&

req.request != "TRACE" &&

req.request != "OPTIONS" &&

req.request != "DELETE") {

return (pipe);

}

if (req.request != "GET" && req.request != "HEAD") {

return(pass);

}

if (req.http.Authorization || req.http.Cookie ||req.http.Authenticate) {

return (pass);

}

if (req.request == "GET" && req.url ~ "(?i)\.php($|\?)"){

return (pass);

}

if (req.url ~ "\.(css|jpg|jpeg|png|gif|gz|tgz|bz2|tbz|mp3|ogg|swf)$") {

unset req.http.Cookie;

}

return (lookup);

}

sub vcl_fetch {

set beresp.grace = 5m;

if (beresp.status == 404 || beresp.status == 503 || beresp.status == 500 || beresp.status == 502) {

set beresp.http.X-Cacheable = "NO: beresp.status";

set beresp.http.X-Cacheable-status = beresp.status;

return (hit_for_pass);

}

#决定哪些头不缓存

if (req.url ~ "\.(php|shtml|asp|aspx|jsp|js|ashx)$") {

return (hit_for_pass);

}

if (req.url ~ "\.(jpg|jpeg|png|gif|gz|tgz|bz2|tbz|mp3|ogg|swf)$") {

unset beresp.http.set-cookie;

}

if (beresp.ttl <= 0s) {

set beresp.http.X-Cacheable = "NO: !beresp.cacheable";

return (hit_for_pass);

}

else {

unset beresp.http.expires;

}

return (deliver);

}

sub vcl_deliver {

if (resp.http.magicmarker) {

unset resp.http.magicmarker;

set resp.http.age = "0";

}

# add cache hit data

if (obj.hits > 0) {

set resp.http.X-Cache = "HIT";

set resp.http.X-Cache-Hits = obj.hits;

}

else {

set resp.http.X-Cache = "MISS";

}

# hidden some sensitive http header returning to client, when the cache server received from backend server response

remove resp.http.X-Varnish;

remove resp.http.Via;

remove resp.http.Age;

remove resp.http.X-Powered-By;

remove resp.http.X-Drupal-Cache;

return (deliver);

}

sub vcl_error {

if (obj.status == 503 && req.restarts < 5) {

set obj.http.X-Restarts = req.restarts;

return (restart);

}

}

sub vcl_hit {

if (req.http.Cache-Control ~ "no-cache") {

if (! (req.http.Via || req.http.User-Agent ~ "bot|MSIE")) {

set obj.ttl = 0s;

return (restart);

}

}

return(deliver);

}配置完成后执行:/root/varnish_start.sh 如果启动成功则表示配置无误!

四、 配置nginx编辑: /usr/local/nginx/conf/nginx.conf ,nginx 的配置方法请参考网上文档资料。

Server A(192.168.4.97) nginx配置如下:

?

user www www;

worker_processes 16;

error_log /logs/nginx/nginx_error.log crit;

#Specifies the value for maximum file descriptors that can be opened by this process.

worker_rlimit_nofile 65500;

events

{

use epoll;

worker_connections 65500;

}

http

{

include mime.types;

default_type application/octet-stream;

server_names_hash_bucket_size 128;

client_header_buffer_size 64k;

large_client_header_buffers 4 64k;

client_max_body_size 10m;

server_tokens off;

expires 1h;

sendfile on;

tcp_nopush on;

keepalive_timeout 60;

tcp_nodelay on;

fastcgi_connect_timeout 200;

fastcgi_send_timeout 300;

fastcgi_read_timeout 600;

fastcgi_buffer_size 128k;

fastcgi_buffers 4 64k;

fastcgi_busy_buffers_size 128k;

fastcgi_temp_file_write_size 128k;

fastcgi_temp_path /dev/shm;

gzip on;

gzip_min_length 2048;

gzip_buffers 4 16k;

gzip_http_version 1.1;

gzip_types text/plain text/css application/xml application/x-javascript ;

log_format access '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" $http_x_forwarded_for';

################# include ###################

upstream varnish_server {

server 127.0.0.1:81 weight=2 max_fails=3 fail_timeout=30s;

server 192.168.4.99:81 weight=2 max_fails=3 fail_timeout=30s;

ip_hash;

}

server

{

listen 80;

server_name 192.168.4.96 192.168.4.98;

index index.html index.htm index.php index.aspx;

location /

{

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_connect_timeout 200;

proxy_send_timeout 300;

proxy_read_timeout 500;

proxy_buffer_size 256k;

proxy_buffers 4 128k;

proxy_busy_buffers_size 256k;

proxy_temp_file_write_size 256k;

proxy_temp_path /dev/shm;

proxy_pass http://varnish_server;

expires off;

access_log /logs/nginx/192.168.4.96.log access;

}

}

server

{

listen 82;

server_name 192.168.4.97;

index index.html index.htm index.php;

root /data/web/awstats/www;

location ~ .*\.php$

{

include fcgi.conf;

fastcgi_pass 127.0.0.1:10080;

fastcgi_index index.php;

expires off;

}

access_log /logs/nginx/awstats.osapub.com.log access;

}

}server B (192.168.4.99)配置如下:

?

user www www;

worker_processes 16;

error_log /logs/nginx/nginx_error.log crit;

#Specifies the value for maximum file descriptors that can be opened by this process.

worker_rlimit_nofile 65500;

events

{

use epoll;

worker_connections 65500;

}

http

{

include mime.types;

default_type application/octet-stream;

server_names_hash_bucket_size 128;

client_header_buffer_size 64k;

large_client_header_buffers 4 64k;

client_max_body_size 10m;

server_tokens off;

expires 1h;

sendfile on;

tcp_nopush on;

keepalive_timeout 60;

tcp_nodelay on;

fastcgi_connect_timeout 200;

fastcgi_send_timeout 300;

fastcgi_read_timeout 600;

fastcgi_buffer_size 128k;

fastcgi_buffers 4 64k;

fastcgi_busy_buffers_size 128k;

fastcgi_temp_file_write_size 128k;

fastcgi_temp_path /dev/shm;

gzip on;

gzip_min_length 2048;

gzip_buffers 4 16k;

gzip_http_version 1.1;

gzip_types text/plain text/css application/xml application/x-javascript ;

log_format access '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" $http_x_forwarded_for';

################# include ###################

upstream varnish_server {

server 127.0.0.1:81 weight=2 max_fails=3 fail_timeout=30s;

server 192.168.4.97:81 weight=2 max_fails=3 fail_timeout=30s;

ip_hash;

}

server

{

listen 80;

server_name 192.168.4.96 192.168.4.98;

index index.html index.htm index.php index.aspx;

location /

{

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_connect_timeout 200;

proxy_send_timeout 300;

proxy_read_timeout 500;

proxy_buffer_size 256k;

proxy_buffers 4 128k;

proxy_busy_buffers_size 256k;

proxy_temp_file_write_size 256k;

proxy_temp_path /dev/shm;

proxy_pass http://varnish_server;

expires off;

access_log /logs/nginx/192.168.4.98.log access;

}

}

server

{

listen 82;

server_name 192.168.4.99;

index index.html index.htm index.php;

root /data/web/awstats/www;

location ~ .*\.php$

{

include fcgi.conf;

fastcgi_pass 127.0.0.1:10080;

fastcgi_index index.php;

expires off;

}

access_log /logs/nginx/awstats.osapub.com.log access;

}

}启动nginx:

/root/nginx_start

启动正常会监听80,82端口!

五、 运行nginx ha脚本

server A 的脚本下载地址:http://bbs.osapub.com/down/server_a_ha.tar.gz

解压力后得到三个脚本:

nginx_watchdog.sh

nginxha.sh

nginx_ha1.sh

server B 的脚本下载地址:http://bbs.osapub.com/down/server_b_ha.tar.gz

解压力后得到三个脚本:

nginx_watchdog.sh

nginxha.sh

nginx_ha2.sh

注意:脚本建议放到:/data/sh/ha 目录下,否则需要修改nginxha.sh 里面的程序路径!

六、 测试1,测试之前先把nginx 负载去掉,可以在前面加#号暂时注释,只保留本机解析,确保测试结果准确。

2,修改本机host文件

添加:192.168.4.99 server.osapub.com 到末尾,保存后访问。

3,正常结果显示如下:

到这里整个架构基本能运行起来了,根据大家的实际需求,对配置文件进行调优,HA脚本也可以进一步调优,关于报警,请参考社区自动安装mutt报警的脚本!

相关文章推荐

- 10021---Nginx + varnish 构建高可用CDN节点集群

- 使用Nginx构建一个“高”可用的PHP集群

- Nginx+Keepalived简单构建高可用集群

- Centos6下nginx+keepalived构建高可用web集群

- nginx+keepalived的高可用负载均衡集群构建

- Nginx+Keepalived简单构建高可用集群

- Linux(centos)+Varnish+nginx+php(FastCGI)+MYSQL5+MenCache+MenCachedb构建大流量服务器集群

- 使用 Nginx 构建一个“高”可用的 PHP 集群

- nginx+keepalived的高可用负载均衡集群构建

- Nginx+Keepalived简单构建高可用集群

- [置顶] Java集群优化——dubbo+zookeeper构建高可用分布式集群

- keepalived + nginx组建高可用负载平衡Web server集群

- Keepalived+Nginx实现高可用和双主节点负载均衡

- LVS+keeplived+nginx+apache搭建高可用、高性能php集群

- LVS+keeplived+nginx+apache搭建高可用、高性能php集群

- Keepalived+Nginx构建高可用的负载均衡

- Nginx+Keepalived搭建高可用负载均衡集群

- [置顶] Java集群优化——dubbo+zookeeper构建高可用分布式集群

- 架构设计:负载均衡层设计方案(6)——Nginx + Keepalived构建高可用的负载层

- 如何用DNS+GeoIP+Nginx+Varnish做世界级的CDN