Hive远程模式安装(1.00)

2016-09-11 15:57

429 查看

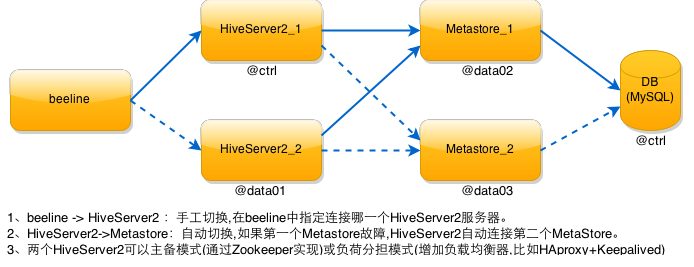

hiveserver2和metastore分离部署,元数据存储采用mysql,mysql与metastore分离部署。

mysql: 部署在ctrl节点

hiveserver2: 部署在ctrl和data01节点

metastore: 部署在data02和data03节点

beeline: 部署在其他任意一台机器

部署图:

首先参考《Hive单节点安装使用(1.00)》文档,安装好单节点的hive软件,然后将整个目录拷贝到其他节点,再按如下步骤配置各节点。

hive 1.0中已经不再提供hiveserver,取而代之的是hiveserver2。

hiveserver2已经不再需要hive.metastore.local这个配置项(hive.metastore.uris为空,则表示是metastore在本地,否则就是远程),直接配置hive.metastore.uris即可。

hiveserver2不连接mysql数据库,不需要配置连接mysql的配置项。

metastore节点配置

需要配置连接mysql的配置项:

其他配置:

hive.metastore.warehouse.dir:hive数据在HDFS中的目录

hive.exec.scratchdir:hive在HDFS中的临时目录

hive.exec.local.scratchdir:hive的本地临时目录,/tmp/hive

hive.downloaded.resources.dir: hive下载的本地临时目录, /tmp/hive

beeline客户端安装

beeline已经集成在hive软件包中,不需要额外安装。 下载hive软件包,然后配置hadoop的主目录即可:

在hive-env.sh中增加:

或者:

启动metastore

使用beeline连接

启动hwi服务

访问Web

比如将日志路径修改为:/opt/hive/log/

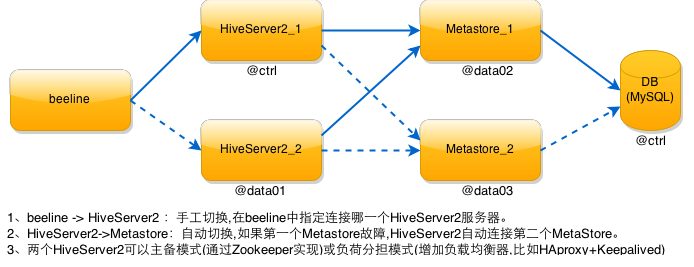

mysql: 部署在ctrl节点

hiveserver2: 部署在ctrl和data01节点

metastore: 部署在data02和data03节点

beeline: 部署在其他任意一台机器

部署图:

首先参考《Hive单节点安装使用(1.00)》文档,安装好单节点的hive软件,然后将整个目录拷贝到其他节点,再按如下步骤配置各节点。

安装配置

hiveserver2节点配置hive 1.0中已经不再提供hiveserver,取而代之的是hiveserver2。

hiveserver2已经不再需要hive.metastore.local这个配置项(hive.metastore.uris为空,则表示是metastore在本地,否则就是远程),直接配置hive.metastore.uris即可。

1 2 3 4 5 6 | <property> <name>hive.metastore.uris</name> <value>thrift://data02:9083,thrift://data03:9083</value> <description>Thrift URI for the remote metastore. Used by metastore client to connect to remote metastore.</description> </property> |

metastore节点配置

需要配置连接mysql的配置项:

1 2 3 4 5 6 | <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://ctrl:3306/hive?createDatabaseIfNotExist=true</value> <description>JDBC connect string for a JDBC metastore</description> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> <description>Driver class name for a JDBC metastore</description> </property> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>hive</value> <description>username to use against metastore database</description> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>hive</value> <description>password to use against metastore database</description> </property> |

hive.metastore.warehouse.dir:hive数据在HDFS中的目录

hive.exec.scratchdir:hive在HDFS中的临时目录

hive.exec.local.scratchdir:hive的本地临时目录,/tmp/hive

hive.downloaded.resources.dir: hive下载的本地临时目录, /tmp/hive

1 2 3 4 5 6 | <property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

<description>location of default database for the warehouse</description>

</property>

<property>

<name>hive.exec.scratchdir</name>

<value>/tmp/hive</value>

<description>HDFS root scratch dir for Hive jobs which gets

created with write all (733)

permission. For each connecting user, an HDFS scratch dir:

${hive.exec.scratchdir}/< username> is created, with

${hive.scratch.dir.permission}.</description>

</property>

<property>

<name>hive.exec.local.scratchdir</name>

<value>/tmp/hive</value>

<description>Local scratch space for Hive jobs</description>

</property>

<property>

<name>hive.downloaded.resources.dir</name>

<value>/tmp/hive</value>

<description>Temporary local directory for added resources

in the remote file system.</description>

</property> |

beeline已经集成在hive软件包中,不需要额外安装。 下载hive软件包,然后配置hadoop的主目录即可:

1 2 3 4 5 6 | cd /opt wget http://apache.fayea.com/hive/stable/apache-hive-1.0.0-bin.tar.gz tar -zxvf apache-hive-1.0.0-bin.tar.gz mv apache-hive-1.0.0-bin hive cd /opt/hive/conf mv hive-env.sh.template hive-env.sh |

1 2 3 | export HADOOP_HOME=/opt/hadoop/client/hadoop-2.4.1 export HIVE_HOME=/opt/hive export HIVE_CONF_DIR=/opt/hadoop/client/hive/conf |

启动

启动hiveserver2[hadoop@ctrl bin]$ hive --service hiveserver2 & [hadoop@data01 bin]$ hive --service hiveserver2 &

或者:

[hadoop@ctrl bin]$ hiveserver2 & [hadoop@data01 bin]$ hiveserver2 &

启动metastore

[hadoop@data02 bin]$ hive --service metastore & [hadoop@data03 bin]$ hive --service metastore &

使用beeline连接

[root@cheyo conf]# beeline --color=true --fastConnect=true -u jdbc:hive2://192.168.99.107:10000 [root@cheyo conf]# ../bin/beeline --color=true --fastConnect=true Beeline version 1.0.0 by Apache Hive beeline> !connect jdbc:hive2://ctrl:10000 scan complete in 17ms Connecting to jdbc:hive2://ctrl:10000 Enter username for jdbc:hive2://ctrl:10000:hadoop Enter password for jdbc:hive2://ctrl:10000: Connected to: Apache Hive (version 1.0.0) Driver: Hive JDBC (version 1.0.0) Transaction isolation: TRANSACTION_REPEATABLE_READ 0: jdbc:hive2://ctrl:10000> show tables; +-----------+--+ | tab_name | +-----------+--+ | person | | t_hive | +-----------+--+ 2 rows selected (0.404 seconds) 0: jdbc:hive2://ctrl:10000> select * from person; +--------------+-------------+--+ | person.name | person.age | +--------------+-------------+--+ | cheyo | 25 | | yahoo | 30 | | people | 27 | +--------------+-------------+--+ 3 rows selected (0.413 seconds) 0: jdbc:hive2://ctrl:10000>

启动hwi(Web界面)

待保/opt/hive/lib/目录下有hive-hwi-1.0.0.war文件。hwi只需要在一台HiveServer2上启动即可。启动hwi服务

[hadoop@ctrl bin]$ hive --service hwi &

访问Web

http://ip:9999/hwi

注意

使用beeline连接时,填写的username将用于权限管理。填写错误可能会导致运行HiveQL命令时指示没有权限。日志

Hive的日志默认在/tmp/{username}/hive.log中。可以通过配置文件修改。比如将日志路径修改为:/opt/hive/log/

1 2 3 4 5 6 | cd /opt/hive mkdir -p /opt/hive/log cd /opt/hive/conf mv hive-log4j.properties.template hive-log4j.properties vi hive-log4j.properties #修改如下一行: hive.log.dir=/opt/hive/log mv hive-exec-log4j.properties.template hive-exec-log4j.properties vi hive-exec-log4j.properties #修改如下一行: hive.log.dir=/opt/hive/log |

相关文章推荐

- Vmware Ubuntu 下Hive的远程模式安装

- 大数据_Hive的安装配置(远程模式)

- Hive-1.2.1远程模式的安装和配置

- Hive的三种安装方式(内嵌模式,本地模式远程模式)

- Hive远程模式安装指导

- hive的三种模式安装(内嵌模式,本地模式远程模式)

- Hadoop之Hive本地与远程mysql数据库管理模式安装手册

- Hive 远程模式安装

- Hive远程模式安装和本地安装

- Hive远程模式安装

- hive的远程模式安装(用mysql作为hive的元数据库)

- Hive1.2.1本地、远程模式安装配置及常见错误

- Hive远程模式安装

- Hive-0.12.0-cdh5.0.1 安装[metasore 内嵌模式、本地模式、远程模式]

- Hadoop之Hive本地与远程mysql数据库管理模式安装手册

- Hive两种模式安装

- Hive两种模式安装

- Hadoop-1.2.1伪分布下 hive-0.10.0内嵌模式安装

- Hive本地模式安装

- 安装Hive(独立模式 使用mysql连接)