CMT跟踪算法代码分析笔记

2016-05-19 18:09

344 查看

代码主要在CMT这个类里面,主要包含initialize和processFrame两个函数实现,跟论文契合的非常好,每一个成员时干啥的,我都注释在旁边了

代码下载链接点击打开链接

最重要的跟踪实现流程:

初始化的代码实现,加注释:

processFrame:代码又短又可爱,很好理解

总结:这是一个基于特征点匹配的跟踪算法,创新之处在于使用了特征点的相对距离的投票来确定目标位置.优势在于对于不形变的物体而言,不管物体怎么移动旋转,其上面的特征点相对中心的距离是在缩放比例下是确定的.

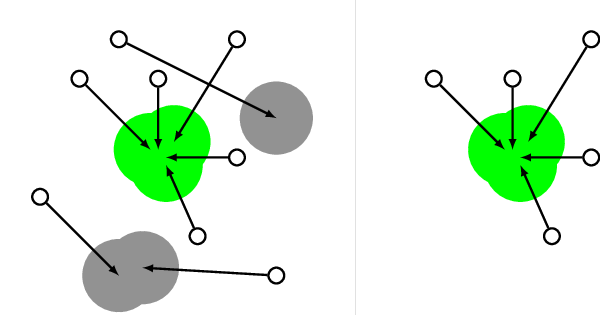

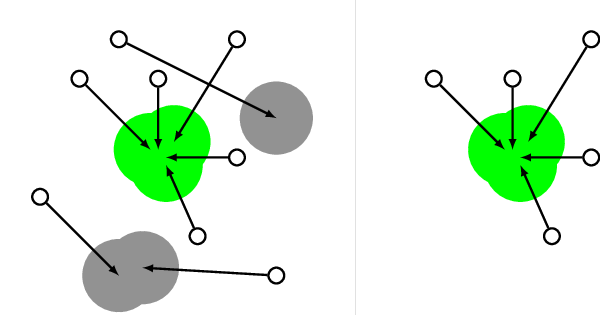

作者在论文中这样说:The main idea behind CMT is to break down the object of interest into tiny parts, known as keypoints.In each frame, we try to again find the keypoints that were already therein the initial selection of the object of interest.We do this by employing

two different kind of methods.First, we track keypoints from the previous frame to the current frame by estimatingwhat is known as its

optic flow.Second, we match keypoints globally by comparing their

descriptors.As both of these methods are error-prone, we employ a novel way of looking for consensus withinthe found keypoints by letting each keypoint vote for the object center,as shown in the following image:

而后通过对中心的聚类投票确定中心位置:

代码下载链接点击打开链接

namespace cmt

{

class CMT

{

public:

CMT() : str_detector("FAST"), str_descriptor("BRISK") {} //默认的特征检测和描述子

void initialize(const Mat im_gray, const Rect rect); //rect是初始目标框

void processFrame(const Mat im_gray);

Fusion fusion;//跟踪点融合:融合跟踪和匹配的点 将两种点都放在一起,并且不重复

Matcher matcher; //DescriptorMatcher 用的knnMatch匹配

Tracker tracker; //光溜匹配跟踪 上一帧的特征点使用光流法跟踪得到这一帧的特征点的位置T

Consensus consensus;//包括scale和rotation angel的求取和对目标中心的投票

string str_detector;

string str_descriptor;

vector<Point2f> points_active; //public for visualization purposes

RotatedRect bb_rot;

private:

Ptr<FeatureDetector> detector;

Ptr<DescriptorExtractor> descriptor;

Size2f size_initial;

vector<int> classes_active; //forgroud points -in target boundingbox's

float theta;

Mat im_prev;

};

} /* namespace CMT */最重要的跟踪实现流程:

初始化的代码实现,加注释:

void CMT::initialize(const Mat im_gray, const Rect rect)

{

//Remember initial size

size_initial = rect.size();

//Remember initial image

im_prev = im_gray;

//Compute center of rect

Point2f center = Point2f(rect.x + rect.width/2.0, rect.y + rect.height/2.0);

//Initialize rotated bounding box

bb_rot = RotatedRect(center, size_initial, 0.0);

//Initialize detector and descriptor

//FeatureDetector is OpenCV's feature detector,including "FAST","ORB","SIFT"

//"STAR",MSER","GFTT","HARRIS","Dense","SimpleBlob"

detector = FeatureDetector::create(str_detector);//FAST

descriptor = DescriptorExtractor::create(str_descriptor);//BRISK

//Get initial keypoints in whole image and compute their descriptors

vector<KeyPoint> keypoints;

detector->detect(im_gray, keypoints);

//Divide keypoints into foreground and background keypoints according to selection

//in target bounding box is foreground

vector<KeyPoint> keypoints_fg;

vector<KeyPoint> keypoints_bg;

for (size_t i = 0; i < keypoints.size(); i++)

{

KeyPoint k = keypoints[i];

Point2f pt = k.pt;

if (pt.x > rect.x && pt.y > rect.y && pt.x < rect.br().x && pt.y < rect.br().y)

{

keypoints_fg.push_back(k);

}

else

{

keypoints_bg.push_back(k);

}

}

//Create foreground classes

vector<int> classes_fg;

classes_fg.reserve(keypoints_fg.size());

for (size_t i = 0; i < keypoints_fg.size(); i++)

{

classes_fg.push_back(i);

}

//Compute foreground/background features

Mat descs_fg;

Mat descs_bg;

descriptor->compute(im_gray, keypoints_fg, descs_fg);

descriptor->compute(im_gray, keypoints_bg, descs_bg);

//Only now is the right time to convert keypoints to points, as compute() might remove some keypoints

vector<Point2f> points_fg;

vector<Point2f> points_bg;

for (size_t i = 0; i < keypoints_fg.size(); i++)

{

points_fg.push_back(keypoints_fg[i].pt);

}

for (size_t i = 0; i < keypoints_bg.size(); i++)

{

points_bg.push_back(keypoints_bg[i].pt);

}

//Create normalized points: distance to center

vector<Point2f> points_normalized;

for (size_t i = 0; i < points_fg.size(); i++)

{

points_normalized.push_back(points_fg[i] - center);

}

//Initialize matcher

matcher.initialize(points_normalized, descs_fg, classes_fg, descs_bg, center);

//Initialize consensus get Xi,Xj distance and angle

consensus.initialize(points_normalized);

//Create initial set of active keypoints

for (size_t i = 0; i < keypoints_fg.size(); i++)

{

points_active.push_back(keypoints_fg[i].pt);

classes_active = classes_fg;

}

}processFrame:代码又短又可爱,很好理解

void CMT::processFrame(Mat im_gray) {

//Track keypoints

vector<Point2f> points_tracked;

vector<unsigned char> status;

tracker.track(im_prev, im_gray, points_active, points_tracked, status);

//cout << points_tracked.size() << " tracked points.";

//keep only successful classes

vector<int> classes_tracked;

for (size_t i = 0; i < classes_active.size(); i++)

{

if (status[i])

{

classes_tracked.push_back(classes_active[i]);

}

}

//Detect keypoints, compute descriptors

vector<KeyPoint> keypoints;

detector->detect(im_gray, keypoints);

Mat descriptors;

descriptor->compute(im_gray, keypoints, descriptors);

//Match keypoints globally

vector<Point2f> points_matched_global;

vector<int> classes_matched_global;

matcher.matchGlobal(keypoints, descriptors, points_matched_global, classes_matched_global);

//Fuse tracked and globally matched points

vector<Point2f> points_fused;

vector<int> classes_fused;

fusion.preferFirst(points_tracked, classes_tracked, points_matched_global, classes_matched_global,

points_fused, classes_fused);

//Estimate scale and rotation from the fused points

float scale;

float rotation;

consensus.estimateScaleRotation(points_fused, classes_fused, scale, rotation);

// FILE_LOG(logDEBUG) << "scale " << scale << ", " << "rotation " << rotation;

//Find inliers and the center of their votes

Point2f center;

vector<Point2f> points_inlier;

vector<int> classes_inlier;

consensus.findConsensus(points_fused, classes_fused, scale, rotation,

center, points_inlier, classes_inlier);

//Match keypoints locally

vector<Point2f> points_matched_local;

vector<int> classes_matched_local;

matcher.matchLocal(keypoints, descriptors, center, scale, rotation, points_matched_local, classes_matched_local);

//Clear active points

points_active.clear();

classes_active.clear();

//Fuse locally matched points and inliers

fusion.preferFirst(points_matched_local, classes_matched_local, points_inlier, classes_inlier, points_active, classes_active);

bb_rot = RotatedRect(center, size_initial * scale, rotation/CV_PI * 180);

//Remember current image

im_prev = im_gray;

}再把上一篇cmt笔记的算法图贴一遍,会更清晰:

总结:这是一个基于特征点匹配的跟踪算法,创新之处在于使用了特征点的相对距离的投票来确定目标位置.优势在于对于不形变的物体而言,不管物体怎么移动旋转,其上面的特征点相对中心的距离是在缩放比例下是确定的.

作者在论文中这样说:The main idea behind CMT is to break down the object of interest into tiny parts, known as keypoints.In each frame, we try to again find the keypoints that were already therein the initial selection of the object of interest.We do this by employing

two different kind of methods.First, we track keypoints from the previous frame to the current frame by estimatingwhat is known as its

optic flow.Second, we match keypoints globally by comparing their

descriptors.As both of these methods are error-prone, we employ a novel way of looking for consensus withinthe found keypoints by letting each keypoint vote for the object center,as shown in the following image:

而后通过对中心的聚类投票确定中心位置:

相关文章推荐

- java Socket实现简单在线聊天(二)

- java Socket实现简单在线聊天(三)

- java Socket实现简单在线聊天(一)

- JAVA敏捷开发环境搭建

- JAVA敏捷开发环境搭建

- JAVA敏捷开发环境搭建

- java类加载顺序

- 我的学习之路-JAVA-04

- typedef C/C++

- 无法安装 golang.org/x/tools/的库

- QT5.5.1 嵌入式平台 鼠标键盘不能热插拔问题解决(二)

- 利用Myeclipse自动生成webService客户端代码

- github使用手册

- myeclipse 中的xml第一行出错

- 【java】:java从零开始学2:如何打开eclipse的工程管理目录

- 【MATLAB 学习笔记】 SimMechanics 流程攻略(3)

- Java ConcurrentModificationException异常原因和解决方法

- java自定义标签的简单例子

- 【java】:java从零开始学1:环境配置

- 在eclipse中使用Lombok