Hbase之Java API使用

2016-04-13 21:53

453 查看

maven中pom.xml配置

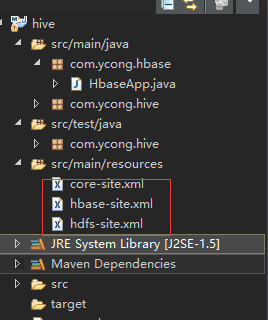

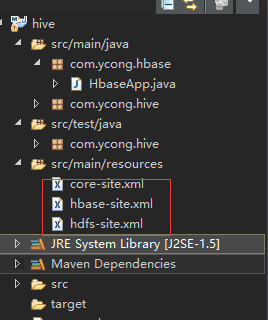

将hadoop和hbase的配置文件放到classpath下

java API相关操作

HBase与MapReduce集成使用(一、CLASSPATH及ImportTsv使用)

HBase与MapReduce集成使用(二、Bulk Load Data)

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<hive.version>0.13.1</hive.version>

<hbase.version>0.98.6-hadoop2</hbase.version>

</properties>

<!-- HBase Client -->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>${hbase.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>${hbase.version}</version>

</dependency>将hadoop和hbase的配置文件放到classpath下

java API相关操作

package com.ycong.hbase;

import java.io.IOException;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.util.Bytes;

public class HbaseApp {

public static HTable getHtableByname(String tableName) throws IOException{

//get configuration instance

Configuration conf = HBaseConfiguration.create();

//get table instance

HTable table = new HTable(conf, tableName);

return table;

}

public static void GetDatas(String rowkey) throws IOException{

HTable table = HbaseApp.getHtableByname("student");

//Get Data

Get get = new Get(Bytes.toBytes(rowkey));

get.addColumn(Bytes.toBytes("info"), Bytes.toBytes("name"));

get.addColumn(Bytes.toBytes("info"), Bytes.toBytes("age"));

Result result = table.get(get);

for(Cell cell : result.rawCells()){

System.out.print(Bytes.toString(CellUtil.cloneRow(cell)) + "\t");

System.out.println(//

Bytes.toString(CellUtil.cloneFamily(cell)) //

+ ":" + //

Bytes.toString(CellUtil.cloneQualifier(cell)) //

+ "->" + //

Bytes.toString(CellUtil.cloneValue(cell)) //

+ " " + //

cell.getTimestamp()

);

}

table.close();

}

public static void putDatas(String rowkey) throws IOException{

HTable table = HbaseApp.getHtableByname("student");

Put put = new Put(Bytes.toBytes(rowkey));

put.add(//

Bytes.toBytes("info"), //

Bytes.toBytes("age"), //

Bytes.toBytes("10")

);

put.add(//

Bytes.toBytes("info"), //

Bytes.toBytes("city"), //

Bytes.toBytes("shanghai")

);

table.put(put);

table.close();

}

public static void DeleteData(){

HTable table = null;

try {

table = HbaseApp.getHtableByname("student");

Delete delete = new Delete(Bytes.toBytes("10020"));

//delete.deleteColumn(Bytes.toBytes("info"), Bytes.toBytes("age"));

delete.deleteFamily(Bytes.toBytes("info"));

table.delete(delete);

} catch (IOException e) {

e.printStackTrace();

}finally {

IOUtils.closeStream(table);

}

}

public static void ScanData(){

HTable table = null;

ResultScanner resultScanner = null;

try {

table = HbaseApp.getHtableByname("student");

Scan scan = new Scan();

scan.setStartRow(Bytes.toBytes("10001"));

scan.setStopRow(Bytes.toBytes("10005"));

resultScanner = table.getScanner(scan);

for(Result result : resultScanner){

for(Cell cell : result.rawCells()){

System.out.print(Bytes.toString(CellUtil.cloneRow(cell)) + "\t");

System.out.println(//

Bytes.toString(CellUtil.cloneFamily(cell)) //

+ ":" + //

Bytes.toString(CellUtil.cloneQualifier(cell)) //

+ "->" + //

Bytes.toString(CellUtil.cloneValue(cell)) //

+ " " + //

cell.getTimestamp()

);

}

System.out.println("===========================================");

}

} catch (IOException e) {

e.printStackTrace();

}finally {

IOUtils.closeStream(table);

}

}

public static void main(String[] args) throws IOException {

ScanData();

}

}HBase与MapReduce集成使用(一、CLASSPATH及ImportTsv使用)

export HBASE_HOME=/opt/app/hbase-0.98.6-hadoop2

export HADOOP_HOME=/opt/app/hadoop-2.5.0

export HADOOP_CLASSPATH=`${HBASE_HOME}/bin/hbase mapredcp`

$HADOOP_HOME/bin/yarn jar $HBASE_HOME/lib/hbase-server-0.98.6-hadoop2.jar

$HADOOP_HOME/bin/yarn jar \

$HBASE_HOME/lib/hbase-server-0.98.6-hadoop2.jar importtsv \

-Dimporttsv.columns=HBASE_ROW_KEY,\

info:name,info:age,info:sex \

student \

hdfs://bigdata.eclipse.com:8020/user/ycong/hbase/importtsvHBase与MapReduce集成使用(二、Bulk Load Data)

将文件转换成hfile格式 $HADOOP_HOME/bin/yarn jar \ $HBASE_HOME/lib/hbase-server-0.98.6-hadoop2.jar importtsv \ -Dimporttsv.bulk.output=hdfs://bigdata.eclipse.com:8020/user/ycong/hbase/hfileOutput \ -Dimporttsv.columns=HBASE_ROW_KEY,\ info:name,info:age,info:sex \ student \ hdfs://bigdata.eclipse.com:8020/user/ycong/hbase/importtsv 加载hfile格式的文件到hbase表中 $HADOOP_HOME/bin/yarn jar $HBASE_HOME/lib/hbase-server-0.98.6-hadoop2.jar \ completebulkload \ hdfs://bigdata.eclipse.com:8020/user/ycong/hbase/hfileOutput \ student

相关文章推荐

- (3)Java设计模式-单例模式(Singleton)

- Java和Flex整合报错(四)

- java代码生成二维码

- (2)Java设计模式-抽象工厂方法模式(Abstract Factory)

- 虚拟机类的加载过程

- java 树递归

- java8新语法学习

- JDK安装与环境变量配置

- (java)从零开始之--异常处理(以文件拷贝为例)

- Java中的XML

- java中的序列化与反序列化

- Kth Smallest Element in a BST

- springmvc 使用InternalResourceViewResolver解析URL文件

- (1)Java设计模式-工厂方法模式(Factory Method)

- JAVA里的深克隆与浅克隆实现

- 创建SpringMvc框架的web项目

- java 追加内容到文件末尾的几种常用方法

- JAVA基础重点难点笔记

- [Java学习笔记]Java简介

- Spring 依赖注入,在Main方法中取得Spring控制的实例