Spark java.lang.UnsatisfiedLinkError: org.apache.hadoop.util.NativeCrc32

2015-09-30 09:49

806 查看

环境: Spark1.3-Hadoop2.6-bin 、Hadoop-2.5

在运行Spark程序写出文件(savaAsTextFile)的时候,我遇到了这个错误:

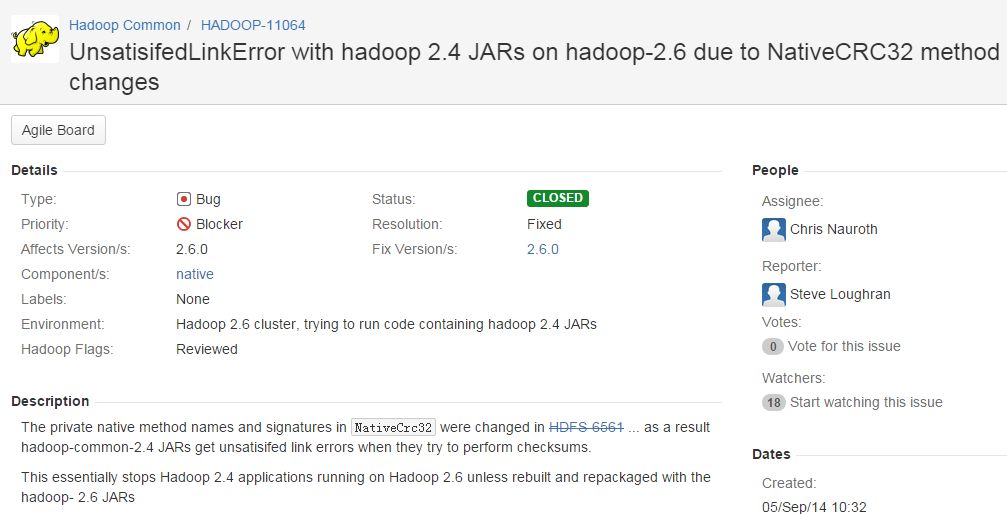

查到的还是什么window远程访问Hadoop的错误,最后查阅官方文档HADOOP-11064

看描述可以清楚这是Spark版本与Hadoop版本不适配导致的错误,遇到这种错误的一般是从Spark官网下载预编译好的二进制bin文件。

因此解决办法有两种:

1. 重新下载并配置Spark预编译好的对应的Hadoop版本

2. 从官网上下载Spark源码按照预装好的Hadoop版本进行编译(毕竟Spark的配置比Hadoop轻松不少)。

在运行Spark程序写出文件(savaAsTextFile)的时候,我遇到了这个错误:

java.lang.UnsatisfiedLinkError: org.apache.hadoop.util.NativeCrc32.nativeVerifyChunkedSums(IILjava/nio/ByteBuffer;ILjava/nio/ByteBuffer;IILjava/lang/String;J)V at org.apache.hadoop.util.NativeCrc32.nativeVerifyChunkedSums(Native Method) at org.apache.hadoop.util.NativeCrc32.verifyChunkedSums(NativeCrc32.java:57) at org.apache.hadoop.util.DataChecksum.verifyChunkedSums(DataChecksum.java:291) at org.apache.hadoop.hdfs.BlockReaderLocal.doByteBufferRead(BlockReaderLocal.java:338) at org.apache.hadoop.hdfs.BlockReaderLocal.fillSlowReadBuffer(BlockReaderLocal.java:388) at org.apache.hadoop.hdfs.BlockReaderLocal.read(BlockReaderLocal.java:408) at org.apache.hadoop.hdfs.DFSInputStream$ByteArrayStrategy.doRead(DFSInputStream.java:642) at org.apache.hadoop.hdfs.DFSInputStream.readBuffer(DFSInputStream.java:698) at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:752) at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:793) at java.io.DataInputStream.read(DataInputStream.java:149) at org.apache.hadoop.io.IOUtils.readFully(IOUtils.java:192) at org.apache.hadoop.hbase.util.FSUtils.getVersion(FSUtils.java:495) at org.apache.hadoop.hbase.util.FSUtils.checkVersion(FSUtils.java:582) at org.apache.hadoop.hbase.master.MasterFileSystem.checkRootDir(MasterFileSystem.java:460) at org.apache.hadoop.hbase.master.MasterFileSystem.createInitialFileSystemLayout(MasterFileSystem.java:151) at org.apache.hadoop.hbase.master.MasterFileSystem.<init>(MasterFileSystem.java:128) at org.apache.hadoop.hbase.master.HMaster.finishInitialization(HMaster.java:790) at org.apache.hadoop.hbase.master.HMaster.run(HMaster.java:603) at java.lang.Thread.run(Thread.java:744)

查到的还是什么window远程访问Hadoop的错误,最后查阅官方文档HADOOP-11064

看描述可以清楚这是Spark版本与Hadoop版本不适配导致的错误,遇到这种错误的一般是从Spark官网下载预编译好的二进制bin文件。

因此解决办法有两种:

1. 重新下载并配置Spark预编译好的对应的Hadoop版本

2. 从官网上下载Spark源码按照预装好的Hadoop版本进行编译(毕竟Spark的配置比Hadoop轻松不少)。

相关文章推荐

- Spark RDD API详解(一) Map和Reduce

- 使用spark和spark mllib进行股票预测

- Spark随谈——开发指南(译)

- Spark,一种快速数据分析替代方案

- eclipse 开发 spark Streaming wordCount

- Spark初探

- Spark Streaming初探

- 搭建hadoop/spark集群环境

- 整合Kafka到Spark Streaming——代码示例和挑战

- Spark 性能相关参数配置详解-任务调度篇

- 基于spark1.3.1的spark-sql实战-01

- 基于spark1.3.1的spark-sql实战-02

- 在 Databricks 可获得 Spark 1.5 预览版

- spark standalone模式 zeppelin安装

- Apache Spark 1.5.0正式发布

- Tachyon 0.7.1伪分布式集群安装与测试

- spark取得lzo压缩文件报错 java.lang.ClassNotFoundException

- tachyon与hdfs,以及spark整合

- 使用openfire,spark,fastpath webchat搭建在线咨询服务详细图文解说

- Spark源码分析(1) 从WordCount示例看Spark延迟计算原理