设计模式C++实现(1)——工厂模式

2015-08-26 11:55

344 查看

今天看到 caffe 注册类的程序, 感觉这个设计模式不错。 所以先整理一下工厂类设计模式,然后根据caffe具体代码 在分析

分类: 设计模式2011-08-05

23:00 67099人阅读 评论(75) 收藏 举报

设计模式c++classuml产品编程

软件领域中的设计模式为开发人员提供了一种使用专家设计经验的有效途径。设计模式中运用了面向对象编程语言的重要特性:封装、继承、多态,真正领悟设计模式的精髓是可能一个漫长的过程,需要大量实践经验的积累。最近看设计模式的书,对于每个模式,用C++写了个小例子,加深一下理解。主要参考《大话设计模式》和《设计模式:可复用面向对象软件的基础》两本书。本文介绍工厂模式的实现。

工厂模式属于创建型模式,大致可以分为三类,简单工厂模式、工厂方法模式、抽象工厂模式。听上去差不多,都是工厂模式。下面一个个介绍,首先介绍简单工厂模式,它的主要特点是需要在工厂类中做判断,从而创造相应的产品。当增加新的产品时,就需要修改工厂类。有点抽象,举个例子就明白了。有一家生产处理器核的厂家,它只有一个工厂,能够生产两种型号的处理器核。客户需要什么样的处理器核,一定要显示地告诉生产工厂。下面给出一种实现方案。

[cpp] view

plaincopyprint?

enum CTYPE {COREA, COREB};

class SingleCore

{

public:

virtual void Show() = 0;

};

//单核A

class SingleCoreA: public SingleCore

{

public:

void Show() { cout<<"SingleCore A"<<endl; }

};

//单核B

class SingleCoreB: public SingleCore

{

public:

void Show() { cout<<"SingleCore B"<<endl; }

};

//唯一的工厂,可以生产两种型号的处理器核,在内部判断

class Factory

{

public:

SingleCore* CreateSingleCore(enum CTYPE ctype)

{

if(ctype == COREA) //工厂内部判断

return new SingleCoreA(); //生产核A

else if(ctype == COREB)

return new SingleCoreB(); //生产核B

else

return NULL;

}

};

这样设计的主要缺点之前也提到过,就是要增加新的核类型时,就需要修改工厂类。这就违反了开放封闭原则:软件实体(类、模块、函数)可以扩展,但是不可修改。于是,工厂方法模式出现了。所谓工厂方法模式,是指定义一个用于创建对象的接口,让子类决定实例化哪一个类。Factory Method使一个类的实例化延迟到其子类。

听起来很抽象,还是以刚才的例子解释。这家生产处理器核的产家赚了不少钱,于是决定再开设一个工厂专门用来生产B型号的单核,而原来的工厂专门用来生产A型号的单核。这时,客户要做的是找好工厂,比如要A型号的核,就找A工厂要;否则找B工厂要,不再需要告诉工厂具体要什么型号的处理器核了。下面给出一个实现方案。

[cpp] view

plaincopyprint?

class SingleCore

{

public:

virtual void Show() = 0;

};

//单核A

class SingleCoreA: public SingleCore

{

public:

void Show() { cout<<"SingleCore A"<<endl; }

};

//单核B

class SingleCoreB: public SingleCore

{

public:

void Show() { cout<<"SingleCore B"<<endl; }

};

class Factory

{

public:

virtual SingleCore* CreateSingleCore() = 0;

};

//生产A核的工厂

class FactoryA: public Factory

{

public:

SingleCoreA* CreateSingleCore() { return new SingleCoreA; }

};

//生产B核的工厂

class FactoryB: public Factory

{

public:

SingleCoreB* CreateSingleCore() { return new SingleCoreB; }

};

工厂方法模式也有缺点,每增加一种产品,就需要增加一个对象的工厂。如果这家公司发展迅速,推出了很多新的处理器核,那么就要开设相应的新工厂。在C++实现中,就是要定义一个个的工厂类。显然,相比简单工厂模式,工厂方法模式需要更多的类定义。

既然有了简单工厂模式和工厂方法模式,为什么还要有抽象工厂模式呢?它到底有什么作用呢?还是举这个例子,这家公司的技术不断进步,不仅可以生产单核处理器,也能生产多核处理器。现在简单工厂模式和工厂方法模式都鞭长莫及。抽象工厂模式登场了。它的定义为提供一个创建一系列相关或相互依赖对象的接口,而无需指定它们具体的类。具体这样应用,这家公司还是开设两个工厂,一个专门用来生产A型号的单核多核处理器,而另一个工厂专门用来生产B型号的单核多核处理器,下面给出实现的代码。

[cpp] view

plaincopyprint?

//单核

class SingleCore

{

public:

virtual void Show() = 0;

};

class SingleCoreA: public SingleCore

{

public:

void Show() { cout<<"Single Core A"<<endl; }

};

class SingleCoreB :public SingleCore

{

public:

void Show() { cout<<"Single Core B"<<endl; }

};

//多核

class MultiCore

{

public:

virtual void Show() = 0;

};

class MultiCoreA : public MultiCore

{

public:

void Show() { cout<<"Multi Core A"<<endl; }

};

class MultiCoreB : public MultiCore

{

public:

void Show() { cout<<"Multi Core B"<<endl; }

};

//工厂

class CoreFactory

{

public:

virtual SingleCore* CreateSingleCore() = 0;

virtual MultiCore* CreateMultiCore() = 0;

};

//工厂A,专门用来生产A型号的处理器

class FactoryA :public CoreFactory

{

public:

SingleCore* CreateSingleCore() { return new SingleCoreA(); }

MultiCore* CreateMultiCore() { return new MultiCoreA(); }

};

//工厂B,专门用来生产B型号的处理器

class FactoryB : public CoreFactory

{

public:

SingleCore* CreateSingleCore() { return new SingleCoreB(); }

MultiCore* CreateMultiCore() { return new MultiCoreB(); }

};

至此,工厂模式介绍完了。利用Rational Rose 2003软件,给出三种工厂模式的UML图,加深印象。

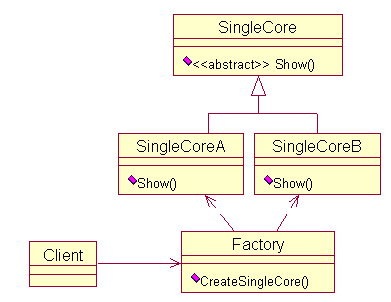

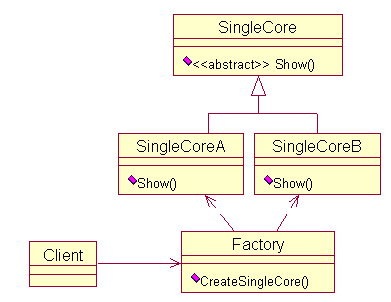

简单工厂模式的UML图:

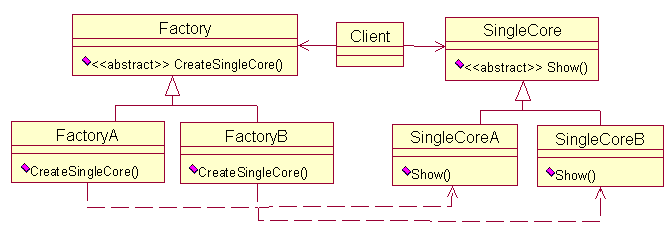

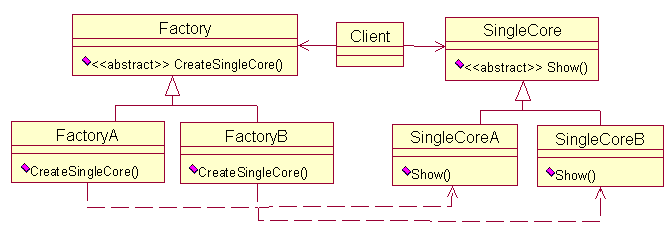

工厂方法的UML图:

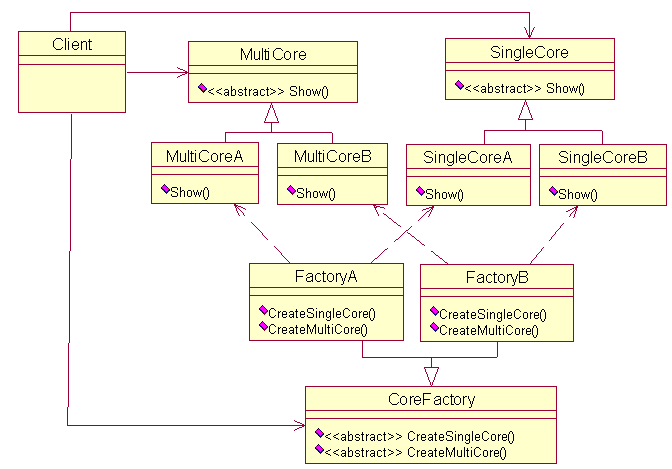

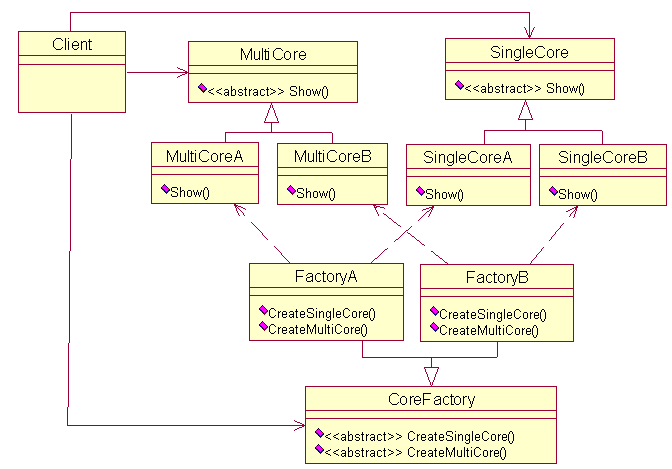

抽象工厂模式的UML图:

二、根据caffe 代码分析一下

caffe 利用的是简单工厂类方法

下面是caffe注册类的头文件

头文件 定义了两种注册类的方法。

通过一个函数宏来定义, 这个函数宏的用法和 caffe.cpp 的入口函数相似。

实现文件

设计模式C++实现(1)——工厂模式

分类: 设计模式2011-08-0523:00 67099人阅读 评论(75) 收藏 举报

设计模式c++classuml产品编程

软件领域中的设计模式为开发人员提供了一种使用专家设计经验的有效途径。设计模式中运用了面向对象编程语言的重要特性:封装、继承、多态,真正领悟设计模式的精髓是可能一个漫长的过程,需要大量实践经验的积累。最近看设计模式的书,对于每个模式,用C++写了个小例子,加深一下理解。主要参考《大话设计模式》和《设计模式:可复用面向对象软件的基础》两本书。本文介绍工厂模式的实现。

工厂模式属于创建型模式,大致可以分为三类,简单工厂模式、工厂方法模式、抽象工厂模式。听上去差不多,都是工厂模式。下面一个个介绍,首先介绍简单工厂模式,它的主要特点是需要在工厂类中做判断,从而创造相应的产品。当增加新的产品时,就需要修改工厂类。有点抽象,举个例子就明白了。有一家生产处理器核的厂家,它只有一个工厂,能够生产两种型号的处理器核。客户需要什么样的处理器核,一定要显示地告诉生产工厂。下面给出一种实现方案。

[cpp] view

plaincopyprint?

enum CTYPE {COREA, COREB};

class SingleCore

{

public:

virtual void Show() = 0;

};

//单核A

class SingleCoreA: public SingleCore

{

public:

void Show() { cout<<"SingleCore A"<<endl; }

};

//单核B

class SingleCoreB: public SingleCore

{

public:

void Show() { cout<<"SingleCore B"<<endl; }

};

//唯一的工厂,可以生产两种型号的处理器核,在内部判断

class Factory

{

public:

SingleCore* CreateSingleCore(enum CTYPE ctype)

{

if(ctype == COREA) //工厂内部判断

return new SingleCoreA(); //生产核A

else if(ctype == COREB)

return new SingleCoreB(); //生产核B

else

return NULL;

}

};

这样设计的主要缺点之前也提到过,就是要增加新的核类型时,就需要修改工厂类。这就违反了开放封闭原则:软件实体(类、模块、函数)可以扩展,但是不可修改。于是,工厂方法模式出现了。所谓工厂方法模式,是指定义一个用于创建对象的接口,让子类决定实例化哪一个类。Factory Method使一个类的实例化延迟到其子类。

听起来很抽象,还是以刚才的例子解释。这家生产处理器核的产家赚了不少钱,于是决定再开设一个工厂专门用来生产B型号的单核,而原来的工厂专门用来生产A型号的单核。这时,客户要做的是找好工厂,比如要A型号的核,就找A工厂要;否则找B工厂要,不再需要告诉工厂具体要什么型号的处理器核了。下面给出一个实现方案。

[cpp] view

plaincopyprint?

class SingleCore

{

public:

virtual void Show() = 0;

};

//单核A

class SingleCoreA: public SingleCore

{

public:

void Show() { cout<<"SingleCore A"<<endl; }

};

//单核B

class SingleCoreB: public SingleCore

{

public:

void Show() { cout<<"SingleCore B"<<endl; }

};

class Factory

{

public:

virtual SingleCore* CreateSingleCore() = 0;

};

//生产A核的工厂

class FactoryA: public Factory

{

public:

SingleCoreA* CreateSingleCore() { return new SingleCoreA; }

};

//生产B核的工厂

class FactoryB: public Factory

{

public:

SingleCoreB* CreateSingleCore() { return new SingleCoreB; }

};

工厂方法模式也有缺点,每增加一种产品,就需要增加一个对象的工厂。如果这家公司发展迅速,推出了很多新的处理器核,那么就要开设相应的新工厂。在C++实现中,就是要定义一个个的工厂类。显然,相比简单工厂模式,工厂方法模式需要更多的类定义。

既然有了简单工厂模式和工厂方法模式,为什么还要有抽象工厂模式呢?它到底有什么作用呢?还是举这个例子,这家公司的技术不断进步,不仅可以生产单核处理器,也能生产多核处理器。现在简单工厂模式和工厂方法模式都鞭长莫及。抽象工厂模式登场了。它的定义为提供一个创建一系列相关或相互依赖对象的接口,而无需指定它们具体的类。具体这样应用,这家公司还是开设两个工厂,一个专门用来生产A型号的单核多核处理器,而另一个工厂专门用来生产B型号的单核多核处理器,下面给出实现的代码。

[cpp] view

plaincopyprint?

//单核

class SingleCore

{

public:

virtual void Show() = 0;

};

class SingleCoreA: public SingleCore

{

public:

void Show() { cout<<"Single Core A"<<endl; }

};

class SingleCoreB :public SingleCore

{

public:

void Show() { cout<<"Single Core B"<<endl; }

};

//多核

class MultiCore

{

public:

virtual void Show() = 0;

};

class MultiCoreA : public MultiCore

{

public:

void Show() { cout<<"Multi Core A"<<endl; }

};

class MultiCoreB : public MultiCore

{

public:

void Show() { cout<<"Multi Core B"<<endl; }

};

//工厂

class CoreFactory

{

public:

virtual SingleCore* CreateSingleCore() = 0;

virtual MultiCore* CreateMultiCore() = 0;

};

//工厂A,专门用来生产A型号的处理器

class FactoryA :public CoreFactory

{

public:

SingleCore* CreateSingleCore() { return new SingleCoreA(); }

MultiCore* CreateMultiCore() { return new MultiCoreA(); }

};

//工厂B,专门用来生产B型号的处理器

class FactoryB : public CoreFactory

{

public:

SingleCore* CreateSingleCore() { return new SingleCoreB(); }

MultiCore* CreateMultiCore() { return new MultiCoreB(); }

};

至此,工厂模式介绍完了。利用Rational Rose 2003软件,给出三种工厂模式的UML图,加深印象。

简单工厂模式的UML图:

工厂方法的UML图:

抽象工厂模式的UML图:

二、根据caffe 代码分析一下

caffe 利用的是简单工厂类方法

下面是caffe注册类的头文件

头文件 定义了两种注册类的方法。

通过一个函数宏来定义, 这个函数宏的用法和 caffe.cpp 的入口函数相似。

/**

* @brief A layer factory that allows one to register layers.

* During runtime, registered layers could be called by passing a LayerParameter

* protobuffer to the CreateLayer function:

*

* LayerRegistry<Dtype>::CreateLayer(param);

*

* There are two ways to register a layer. Assuming that we have a layer like:

*

* template <typename Dtype>

* class MyAwesomeLayer : public Layer<Dtype> {

* // your implementations

* };

*

* and its type is its C++ class name, but without the "Layer" at the end

* ("MyAwesomeLayer" -> "MyAwesome").

*

* If the layer is going to be created simply by its constructor, in your c++

* file, add the following line:

*

* REGISTER_LAYER_CLASS(MyAwesome);

*

* Or, if the layer is going to be created by another creator function, in the

* format of:

*

* template <typename Dtype>

* Layer<Dtype*> GetMyAwesomeLayer(const LayerParameter& param) {

* // your implementation

* }

*

* (for example, when your layer has multiple backends, see GetConvolutionLayer

* for a use case), then you can register the creator function instead, like

*

* REGISTER_LAYER_CREATOR(MyAwesome, GetMyAwesomeLayer)

*

* Note that each layer type should only be registered once.

*/

#ifndef CAFFE_LAYER_FACTORY_H_

#define CAFFE_LAYER_FACTORY_H_

#include <map>

#include <string>

#include "caffe/common.hpp"

#include "caffe/proto/caffe.pb.h"

namespace caffe {

template <typename Dtype>

class Layer;

template <typename Dtype>

class LayerRegistry {

public:

typedef shared_ptr<Layer<Dtype> > (*Creator)(const LayerParameter&);

typedef std::map<string, Creator> CreatorRegistry;

static CreatorRegistry& Registry() {

static CreatorRegistry* g_registry_ = new CreatorRegistry();

return *g_registry_;

}

// Adds a creator.

static void AddCreator(const string& type, Creator creator) {

CreatorRegistry& registry = Registry();

CHECK_EQ(registry.count(type), 0)

<< "Layer type " << type << " already registered.";

registry[type] = creator;

}

// Get a layer using a LayerParameter.

static shared_ptr<Layer<Dtype> > CreateLayer(const LayerParameter& param) {

LOG(INFO) << "Creating layer " << param.name();

const string& type = param.type();

CreatorRegistry& registry = Registry();

CHECK_EQ(registry.count(type), 1) << "Unknown layer type: " << type

<< " (known types: " << LayerTypeList() << ")";

return registry[type](param);

}

private:

// Layer registry should never be instantiated - everything is done with its

// static variables.

LayerRegistry() {}

static string LayerTypeList() {

CreatorRegistry& registry = Registry();

string layer_types;

for (typename CreatorRegistry::iterator iter = registry.begin();

iter != registry.end(); ++iter) {

if (iter != registry.begin()) {

layer_types += ", ";

}

layer_types += iter->first;

}

return layer_types;

}

};

template <typename Dtype>

class LayerRegisterer {

public:

LayerRegisterer(const string& type,

shared_ptr<Layer<Dtype> > (*creator)(const LayerParameter&)) {

// LOG(INFO) << "Registering layer type: " << type;

LayerRegistry<Dtype>::AddCreator(type, creator);

}

};

#define REGISTER_LAYER_CREATOR(type, creator) \

static LayerRegisterer<float> g_creator_f_##type(#type, creator<float>); \

static LayerRegisterer<double> g_creator_d_##type(#type, creator<double>) \

#define REGISTER_LAYER_CLASS(type) \

template <typename Dtype> \

shared_ptr<Layer<Dtype> > Creator_##type##Layer(const LayerParameter& param) \

{ \

return shared_ptr<Layer<Dtype> >(new type##Layer<Dtype>(param)); \

} \

REGISTER_LAYER_CREATOR(type, Creator_##type##Layer)

} // namespace caffe

#endif // CAFFE_LAYER_FACTORY_H_实现文件

// Make sure we include Python.h before any system header

// to avoid _POSIX_C_SOURCE redefinition

#ifdef WITH_PYTHON_LAYER

#include <boost/python.hpp>

#endif

#include <string>

#include "caffe/layer.hpp"

#include "caffe/layer_factory.hpp"

#include "caffe/proto/caffe.pb.h"

#include "caffe/vision_layers.hpp"

#ifdef WITH_PYTHON_LAYER

#include "caffe/python_layer.hpp"

#endif

namespace caffe {

// Get convolution layer according to engine.

template <typename Dtype>

shared_ptr<Layer<Dtype> > GetConvolutionLayer(

const LayerParameter& param) {

ConvolutionParameter_Engine engine = param.convolution_param().engine();

if (engine == ConvolutionParameter_Engine_DEFAULT) {

engine = ConvolutionParameter_Engine_CAFFE;

#ifdef USE_CUDNN

engine = ConvolutionParameter_Engine_CUDNN;

#endif

}

if (engine == ConvolutionParameter_Engine_CAFFE) {

return shared_ptr<Layer<Dtype> >(new ConvolutionLayer<Dtype>(param));

#ifdef USE_CUDNN

} else if (engine == ConvolutionParameter_Engine_CUDNN) {

return shared_ptr<Layer<Dtype> >(new CuDNNConvolutionLayer<Dtype>(param));

#endif

} else {

LOG(FATAL) << "Layer " << param.name() << " has unknown engine.";

}

}

REGISTER_LAYER_CREATOR(Convolution, GetConvolutionLayer);

// Get pooling layer according to engine.

template <typename Dtype>

shared_ptr<Layer<Dtype> > GetPoolingLayer(const LayerParameter& param) {

PoolingParameter_Engine engine = param.pooling_param().engine();

if (engine == PoolingParameter_Engine_DEFAULT) {

engine = PoolingParameter_Engine_CAFFE;

#ifdef USE_CUDNN

engine = PoolingParameter_Engine_CUDNN;

#endif

}

if (engine == PoolingParameter_Engine_CAFFE) {

return shared_ptr<Layer<Dtype> >(new PoolingLayer<Dtype>(param));

#ifdef USE_CUDNN

} else if (engine == PoolingParameter_Engine_CUDNN) {

PoolingParameter p_param = param.pooling_param();

if (p_param.pad() || p_param.pad_h() || p_param.pad_w() ||

param.top_size() > 1) {

LOG(INFO) << "CUDNN does not support padding or multiple tops. "

<< "Using Caffe's own pooling layer.";

return shared_ptr<Layer<Dtype> >(new PoolingLayer<Dtype>(param));

}

return shared_ptr<Layer<Dtype> >(new CuDNNPoolingLayer<Dtype>(param));

#endif

} else {

LOG(FATAL) << "Layer " << param.name() << " has unknown engine.";

}

}

REGISTER_LAYER_CREATOR(Pooling, GetPoolingLayer);

// Get relu layer according to engine.

template <typename Dtype>

shared_ptr<Layer<Dtype> > GetReLULayer(const LayerParameter& param) {

ReLUParameter_Engine engine = param.relu_param().engine();

if (engine == ReLUParameter_Engine_DEFAULT) {

engine = ReLUParameter_Engine_CAFFE;

#ifdef USE_CUDNN

engine = ReLUParameter_Engine_CUDNN;

#endif

}

if (engine == ReLUParameter_Engine_CAFFE) {

return shared_ptr<Layer<Dtype> >(new ReLULayer<Dtype>(param));

#ifdef USE_CUDNN

} else if (engine == ReLUParameter_Engine_CUDNN) {

return shared_ptr<Layer<Dtype> >(new CuDNNReLULayer<Dtype>(param));

#endif

} else {

LOG(FATAL) << "Layer " << param.name() << " has unknown engine.";

}

}

REGISTER_LAYER_CREATOR(ReLU, GetReLULayer);

// Get sigmoid layer according to engine.

template <typename Dtype>

shared_ptr<Layer<Dtype> > GetSigmoidLayer(const LayerParameter& param) {

SigmoidParameter_Engine engine = param.sigmoid_param().engine();

if (engine == SigmoidParameter_Engine_DEFAULT) {

engine = SigmoidParameter_Engine_CAFFE;

#ifdef USE_CUDNN

engine = SigmoidParameter_Engine_CUDNN;

#endif

}

if (engine == SigmoidParameter_Engine_CAFFE) {

return shared_ptr<Layer<Dtype> >(new SigmoidLayer<Dtype>(param));

#ifdef USE_CUDNN

} else if (engine == SigmoidParameter_Engine_CUDNN) {

return shared_ptr<Layer<Dtype> >(new CuDNNSigmoidLayer<Dtype>(param));

#endif

} else {

LOG(FATAL) << "Layer " << param.name() << " has unknown engine.";

}

}

REGISTER_LAYER_CREATOR(Sigmoid, GetSigmoidLayer);

// Get softmax layer according to engine.

template <typename Dtype>

shared_ptr<Layer<Dtype> > GetSoftmaxLayer(const LayerParameter& param) {

SoftmaxParameter_Engine engine = param.softmax_param().engine();

if (engine == SoftmaxParameter_Engine_DEFAULT) {

engine = SoftmaxParameter_Engine_CAFFE;

#ifdef USE_CUDNN

engine = SoftmaxParameter_Engine_CUDNN;

#endif

}

if (engine == SoftmaxParameter_Engine_CAFFE) {

return shared_ptr<Layer<Dtype> >(new SoftmaxLayer<Dtype>(param));

#ifdef USE_CUDNN

} else if (engine == SoftmaxParameter_Engine_CUDNN) {

return shared_ptr<Layer<Dtype> >(new CuDNNSoftmaxLayer<Dtype>(param));

#endif

} else {

LOG(FATAL) << "Layer " << param.name() << " has unknown engine.";

}

}

REGISTER_LAYER_CREATOR(Softmax, GetSoftmaxLayer);

// Get tanh layer according to engine.

template <typename Dtype>

shared_ptr<Layer<Dtype> > GetTanHLayer(const LayerParameter& param) {

TanHParameter_Engine engine = param.tanh_param().engine();

if (engine == TanHParameter_Engine_DEFAULT) {

engine = TanHParameter_Engine_CAFFE;

#ifdef USE_CUDNN

engine = TanHParameter_Engine_CUDNN;

#endif

}

if (engine == TanHParameter_Engine_CAFFE) {

return shared_ptr<Layer<Dtype> >(new TanHLayer<Dtype>(param));

#ifdef USE_CUDNN

} else if (engine == TanHParameter_Engine_CUDNN) {

return shared_ptr<Layer<Dtype> >(new CuDNNTanHLayer<Dtype>(param));

#endif

} else {

LOG(FATAL) << "Layer " << param.name() << " has unknown engine.";

}

}

REGISTER_LAYER_CREATOR(TanH, GetTanHLayer);

#ifdef WITH_PYTHON_LAYER

template <typename Dtype>

shared_ptr<Layer<Dtype> > GetPythonLayer(const LayerParameter& param) {

Py_Initialize();

try {

bp::object module = bp::import(param.python_param().module().c_str());

bp::object layer = module.attr(param.python_param().layer().c_str())(param);

return bp::extract<shared_ptr<PythonLayer<Dtype> > >(layer)();

} catch (bp::error_already_set) {

PyErr_Print();

throw;

}

}

REGISTER_LAYER_CREATOR(Python, GetPythonLayer);

#endif

// Layers that use their constructor as their default creator should be

// registered in their corresponding cpp files. Do not register them here.

} // namespace caffe

相关文章推荐

- 几种快速傅里叶变换(FFT)的C++实现

- C++_编写动态链接库

- 深入理解C语言的函数调用过程

- c++与perl在正则表达式运算速度上的比较

- 【资料整理】C语言位运算总结

- C++多态

- WTL小问题解决方法汇总

- LeetCode 215. Kth Largest Element in an Array

- [VS2010]_[初级]_[VC++ Express 使用WDK的ATL编译出现的问题解决办法]

- [VS2010]_[初级]_[VC++ Express 使用WDK的ATL编译出现的问题解决办法]

- C语言中字符串的内存地址操作的相关函数简介

- C++中的标准库类型

- C# 中动态调用C++动态链接

- C# 中动态调用C++动态链接

- 黑马程序员——C语言中的数据

- C++ 预处理、编译、汇编、链接

- 对比C语言中memccpy()函数和memcpy()函数的用法

- 《算法导论》的桶排序C++实现

- C++ STL 中的 bitset 用法

- [DP]HDOJ1158 Employment Planning