用Sqoop将mysql中的表和数据导入到Hive中

2015-04-10 17:52

981 查看

1、安装mysql

查询以前安装的mysql相关包

rpm -qa | grep mysql

暴力删除这个包

rpm -e mysql-libs-5.1.66-2.el6_3.i686 --nodeps

rpm -ivh MySQL-server-5.1.73-1.glibc23.i386.rpm

rpm -ivh MySQL-client-5.1.73-1.glibc23.i386.rpm

执行命令设置mysql

/usr/bin/mysql_secure_installation

GRANT ALL PRIVILEGES ON hive.* TO

'root'@'%' IDENTIFIED BY '123' WITH GRANT OPTION;

FLUSH PRIVILEGES

2、安装hive,将hive添加到环境变量当中

3、安装sqoop,(备注:sqoop和hive安装在同一台服务器上)

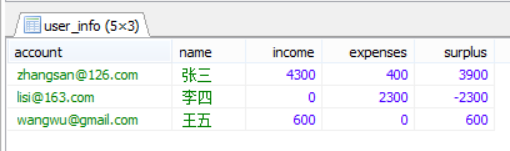

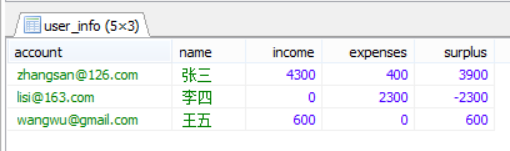

4、mysql中如下两张表,user_info 和 trade_detail (建表语句见最后):

5、hive当中创建两张表

create table trade_detail (id bigint, account string, income double, expenses double, time string) row format delimited fields terminated by '\t';

create table user_info (id bigint, account string, name string, age int) row format delimited fields terminated by '\t';

6、将mysql当中的数据直接导入到hive当中

sqoop import --connect jdbc:mysql://192.168.1.10:3306/itcast --username root --password 123 --table trade_detail --hive-import --hive-overwrite --hive-table trade_detail --fields-terminated-by '\t'

sqoop import --connect jdbc:mysql://192.168.1.10:3306/itcast --username root --password 123 --table user_info --hive-import --hive-overwrite --hive-table user_info --fields-terminated-by '\t'

7、创建一个result表保存前一个sql执行的结果

create table result row format delimited fields terminated by '\t' as select t2.account, t2.name, t1.income, t1.expenses, t1.surplus from user_info t2 join (select account, sum(income) as income, sum(expenses) as expenses, sum(income-expenses) as surplus

from trade_detail group by account) t1 on (t1.account = t2.account);

create table user (id int, name string) row format delimited fields terminated by '\t'

将本地文件系统上的数据导入到HIVE当中

load data local inpath '/root/user.txt' into table user;

创建外部表

create external table stubak (id int, name string) row format delimited fields terminated by '\t' location '/stubak';

创建分区表

普通表和分区表区别:有大量数据增加的需要建分区表

create table book (id bigint, name string) partitioned by (pubdate string) row format delimited fields terminated by '\t';

分区表加载数据

load data local inpath './book.txt' overwrite into table book partition (pubdate='2010-08-22');

------------------------------- 附上mysql中创建表的脚本--------------------------------------

-- 导出 表 mydb.trade_detail 结构

CREATE TABLE IF NOT EXISTS `trade_detail` (

`id` int(11) NOT NULL,

`account` varchar(100) DEFAULT NULL,

`income` double DEFAULT NULL,

`expenses` double DEFAULT NULL,

`time` timestamp NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-- 正在导出表 mydb.trade_detail 的数据:~10 rows (大约)

/*!40000 ALTER TABLE `trade_detail` DISABLE KEYS */;

INSERT INTO `trade_detail` (`id`, `account`, `income`, `expenses`, `time`) VALUES

(1, 'zhangsan@126.com', 2000, 0, '2015-04-10 00:00:00'),

(2, 'lisi@163.com', 0, 300, '2015-04-10 15:06:13'),

(3, 'wangwu@gmail.com', 600, 0, '2015-04-10 15:06:41'),

(4, 'zhangsan@126.com', 0, 200, '2015-04-10 15:07:04'),

(5, 'lisi@163.com', 0, 1700, '2015-04-10 15:08:32'),

(6, 'zhangsan@126.com', 300, 0, '2015-04-10 15:08:29'),

(7, 'zhangsan@126.com', 1500, 0, '2015-04-10 15:08:27'),

(8, 'zhangsan@126.com', 0, 200, '2015-04-10 15:08:21'),

(9, 'zhangsan@126.com', 500, 0, '2015-04-10 15:08:18'),

(10, 'lisi@163.com', 0, 300, '2015-04-10 15:08:17');

/*!40000 ALTER TABLE `trade_detail` ENABLE KEYS */;

-- 导出 表 mydb.user_info 结构

CREATE TABLE IF NOT EXISTS `user_info` (

`id` int(11) NOT NULL,

`account` varchar(100) DEFAULT NULL,

`name` varchar(50) DEFAULT NULL,

`age` int(11) DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-- 正在导出表 mydb.user_info 的数据:~4 rows (大约)

/*!40000 ALTER TABLE `user_info` DISABLE KEYS */;

INSERT INTO `user_info` (`id`, `account`, `name`, `age`) VALUES

(1, 'zhangsan@126.com', '张三', 33),

(2, 'lisi@163.com', '李四', 45),

(3, 'wangwu@gmail.com', '王五', 32),

(4, 'zhongliu@126.com', '赵柳', 26);

使用sqoop,将hdfs中的数据导入到mysql数据库中

./sqoop-export --connect "jdbc:mysql://192.168.7.7:3306/test_ysf?useUnicode=true&characterEncoding=utf-8" --username root --table dg_dim_auction_d --password password --export-dir /ysf/input/dg_dim_auction_d --fields-terminated-by ','

使用sqoop,将[b]mysql数据库中的数据导入到hdfs中[/b]

./sqoop import --connect jdbc:mysql://192.168.7.7:3306/asto_ec_web --username root --password password --table shop_tb --target-dir /ysf/input/shop_tb --fields-terminated-by ','

$SQOOP_HOME/bin/sqoop import --connect "jdbc:mysql://192.168.7.7:3306/retail_loan?useUnicode=true&characterEncoding=utf-8&zeroDateTimeBehavior=convertToNull&transformedBitIsBoolean=true" --username root

--password password --query 'SELECT store_id,order_id,order_date,cigar_name,wholesale_price,purchase_amount,order_amount,money_amount,producer_name FROM datag_yancao_historyOrderDetailData where $CONDITIONS' -m 1 --target-dir /ycd/input/offline/$year_month/$day/tobacco_order_details

--fields-terminated-by '\t' >> $PROJECT_LOG_HOME/offline/$date_cur/sqoop_ycd.log

红色的不加,可能会出现乱码问题

1、安装mysql

查询以前安装的mysql相关包

rpm -qa | grep mysql

暴力删除这个包

rpm -e mysql-libs-5.1.66-2.el6_3.i686 --nodeps

rpm -ivh MySQL-server-5.1.73-1.glibc23.i386.rpm

rpm -ivh MySQL-client-5.1.73-1.glibc23.i386.rpm

执行命令设置mysql

/usr/bin/mysql_secure_installation

GRANT ALL PRIVILEGES ON hive.* TO

'root'@'%' IDENTIFIED BY '123' WITH GRANT OPTION;

FLUSH PRIVILEGES

2、安装hive,将hive添加到环境变量当中

3、安装sqoop,(备注:sqoop和hive安装在同一台服务器上)

4、mysql中如下两张表,user_info 和 trade_detail (建表语句见最后):

5、hive当中创建两张表

create table trade_detail (id bigint, account string, income double, expenses double, time string) row format delimited fields terminated by '\t';

create table user_info (id bigint, account string, name string, age int) row format delimited fields terminated by '\t';

6、将mysql当中的数据直接导入到hive当中

sqoop import --connect jdbc:mysql://192.168.1.10:3306/itcast --username root --password 123 --table trade_detail --hive-import --hive-overwrite --hive-table trade_detail --fields-terminated-by '\t'

sqoop import --connect jdbc:mysql://192.168.1.10:3306/itcast --username root --password 123 --table user_info --hive-import --hive-overwrite --hive-table user_info --fields-terminated-by '\t'

7、创建一个result表保存前一个sql执行的结果

create table result row format delimited fields terminated by '\t' as select t2.account, t2.name, t1.income, t1.expenses, t1.surplus from user_info t2 join (select account, sum(income) as income, sum(expenses) as expenses, sum(income-expenses) as surplus

from trade_detail group by account) t1 on (t1.account = t2.account);

create table user (id int, name string) row format delimited fields terminated by '\t'

将本地文件系统上的数据导入到HIVE当中

load data local inpath '/root/user.txt' into table user;

创建外部表

create external table stubak (id int, name string) row format delimited fields terminated by '\t' location '/stubak';

创建分区表

普通表和分区表区别:有大量数据增加的需要建分区表

create table book (id bigint, name string) partitioned by (pubdate string) row format delimited fields terminated by '\t';

分区表加载数据

load data local inpath './book.txt' overwrite into table book partition (pubdate='2010-08-22');

------------------------------- 附上mysql中创建表的脚本--------------------------------------

-- 导出 表 mydb.trade_detail 结构

CREATE TABLE IF NOT EXISTS `trade_detail` (

`id` int(11) NOT NULL,

`account` varchar(100) DEFAULT NULL,

`income` double DEFAULT NULL,

`expenses` double DEFAULT NULL,

`time` timestamp NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-- 正在导出表 mydb.trade_detail 的数据:~10 rows (大约)

/*!40000 ALTER TABLE `trade_detail` DISABLE KEYS */;

INSERT INTO `trade_detail` (`id`, `account`, `income`, `expenses`, `time`) VALUES

(1, 'zhangsan@126.com', 2000, 0, '2015-04-10 00:00:00'),

(2, 'lisi@163.com', 0, 300, '2015-04-10 15:06:13'),

(3, 'wangwu@gmail.com', 600, 0, '2015-04-10 15:06:41'),

(4, 'zhangsan@126.com', 0, 200, '2015-04-10 15:07:04'),

(5, 'lisi@163.com', 0, 1700, '2015-04-10 15:08:32'),

(6, 'zhangsan@126.com', 300, 0, '2015-04-10 15:08:29'),

(7, 'zhangsan@126.com', 1500, 0, '2015-04-10 15:08:27'),

(8, 'zhangsan@126.com', 0, 200, '2015-04-10 15:08:21'),

(9, 'zhangsan@126.com', 500, 0, '2015-04-10 15:08:18'),

(10, 'lisi@163.com', 0, 300, '2015-04-10 15:08:17');

/*!40000 ALTER TABLE `trade_detail` ENABLE KEYS */;

-- 导出 表 mydb.user_info 结构

CREATE TABLE IF NOT EXISTS `user_info` (

`id` int(11) NOT NULL,

`account` varchar(100) DEFAULT NULL,

`name` varchar(50) DEFAULT NULL,

`age` int(11) DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-- 正在导出表 mydb.user_info 的数据:~4 rows (大约)

/*!40000 ALTER TABLE `user_info` DISABLE KEYS */;

INSERT INTO `user_info` (`id`, `account`, `name`, `age`) VALUES

(1, 'zhangsan@126.com', '张三', 33),

(2, 'lisi@163.com', '李四', 45),

(3, 'wangwu@gmail.com', '王五', 32),

(4, 'zhongliu@126.com', '赵柳', 26);

使用sqoop,将hdfs中的数据导入到mysql数据库中

./sqoop-export --connect "jdbc:mysql://192.168.7.7:3306/test_ysf?useUnicode=true&characterEncoding=utf-8" --username root --table dg_dim_auction_d --password password --export-dir /ysf/input/dg_dim_auction_d --fields-terminated-by ','

使用sqoop,将[b]mysql数据库中的数据导入到hdfs中[/b]

./sqoop import --connect jdbc:mysql://192.168.7.7:3306/asto_ec_web --username root --password password --table shop_tb --target-dir /ysf/input/shop_tb --fields-terminated-by ','

$SQOOP_HOME/bin/sqoop import --connect "jdbc:mysql://192.168.7.7:3306/retail_loan?useUnicode=true&characterEncoding=utf-8&zeroDateTimeBehavior=convertToNull&transformedBitIsBoolean=true" --username root

--password password --query 'SELECT store_id,order_id,order_date,cigar_name,wholesale_price,purchase_amount,order_amount,money_amount,producer_name FROM datag_yancao_historyOrderDetailData where $CONDITIONS' -m 1 --target-dir /ycd/input/offline/$year_month/$day/tobacco_order_details

--fields-terminated-by '\t' >> $PROJECT_LOG_HOME/offline/$date_cur/sqoop_ycd.log

红色的不加,可能会出现乱码问题

相关文章推荐

- OOzie调度sqoop1 Action 从mysql导入数据到hive

- 利用sqoop将hive数据导入导出数据到mysql

- Sqoop将MySQL和Oracle的数据导入HIVE和Hbase

- Sqoop增量从MySQL中向hive导入数据

- sqoop 从mysql导入数据到hdfs、hive

- 大数据基础(二)hadoop, mave, hbase, hive, sqoop在ubuntu 14.04.04下的安装和sqoop与hdfs,hive,mysql导入导出

- 使用sqoop将mysql中数据导入到hive中

- 利用sqoop从mysql向多分区hive表中导入数据

- 使用sqoop把mysql数据导入hive

- Sqoop_详细总结 使用Sqoop将HDFS/Hive/HBase与MySQL/Oracle中的数据相互导入、导出

- [Sqoop]将Mysql数据表导入到Hive

- 利用sqoop将hive数据导入导出数据到mysql

- Sqoop增量从MySQL中向hive导入数据

- 使用Sqoop将HDFS/Hive/HBase与MySQL/Oracle中的数据相互导入、导出

- sqoop:mysql和Hbase/Hive/Hdfs之间相互导入数据

- sqoop:mysql和Hbase/Hive/Hdfs之间相互导入数据

- 利用sqoop将hive数据导入导出数据到mysql (转)

- sqoop 导入mysql数据到hive

- 利用sqoop将hive数据导入导出数据到mysql